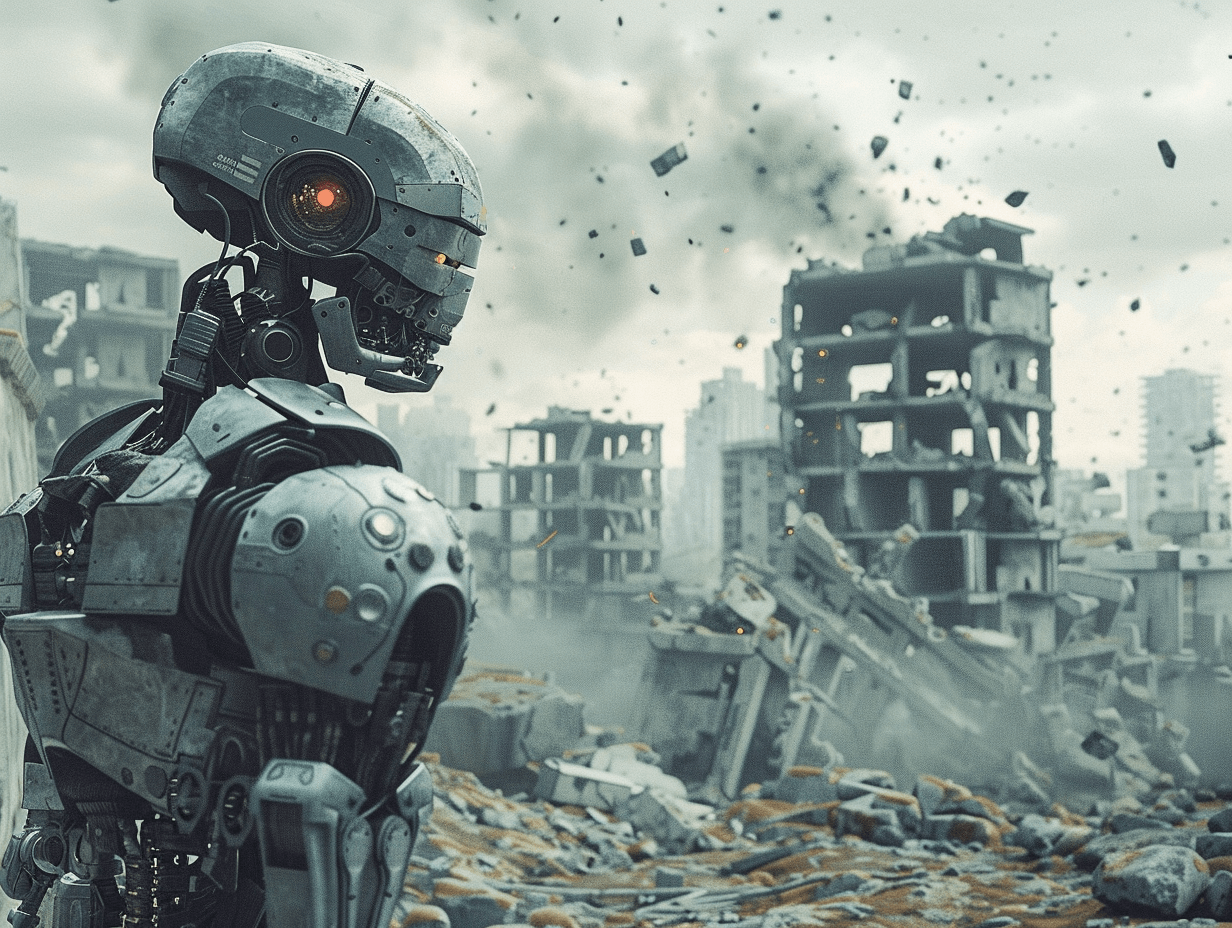

Former OpenAI employee, Daniel Kokotajlo, proports AI destruction warnings. Kokotajilo warns of a 70% chance that AI will destroy or catastrophically harm humanity.,Kokotajlo and other AI experts assert their "right to warn" the public about the risks posed by AI.,OpenAI is accused of ignoring safety concerns in the pursuit of artificial general intelligence (AGI).

AI's Doomsday Prediction: 70% Chance of Destruction

Daniel Kokotajlo, a former governance researcher at OpenAI, has issued a chilling warning. He believes that the odds of artificial intelligence (AI) either destroying or causing catastrophic harm to humankind are greater than a coin flip, specifically around 70%. These concerns echo broader discussions about AI's Secret Revolution: Trends You Can't Miss and the potential for unintended consequences.

The Race to AGI: Ignoring the Risks

Kokotajlo's concerns stem from OpenAI's pursuit of artificial general intelligence (AGI). AGI is a type of AI that can understand, learn, and apply knowledge across a wide range of tasks at a level equal to or beyond a human being. Understanding the various Deliberating on the Many Definitions of Artificial General Intelligence is crucial to grasping the scope of these warnings.

Kokotajlo accuses OpenAI of ignoring the monumental risks posed by AGI. He claims that the company is "recklessly racing to be the first" to develop AGI, captivated by its possibilities but disregarding the potential dangers.

Asserting the 'Right to Warn'

Enjoying this? Get more in your inbox.

Weekly AI news & insights from Asia.

Kokotajlo is not alone in his concerns. He, along with other former and current employees at Google DeepMind, Anthropic, and Geoffrey Hinton, the so-called "Godfather of AI," are asserting their "right to warn" the public about the risks posed by AI. This movement highlights the growing debate around Why ProSocial AI Is The New ESG.

A Call for Safety: Urging OpenAI's CEO

Kokotajlo's concerns about AI's potential harm led him to personally urge OpenAI's CEO, Sam Altman, to "pivot to safety." He wanted the company to spend more time implementing guardrails to reign in the technology rather than continuing to make it smarter.

However, Kokotajlo felt that Altman's agreement was merely lip service. Fed up, Kokotajlo quit the firm in April, stating that he had "lost confidence that OpenAI will behave responsibly" as it continues trying to build near-human-level AI.

OpenAI's Response: Engaging in Rigorous Debate

In response to these allegations, OpenAI stated that they are proud of their track record in providing the most capable and safest AI systems. They agree that rigorous debate is crucial and will continue to engage with governments, civil society and other communities around the world. They also highlighted their avenues for employees to express their concerns, including an anonymous integrity hotline and a Safety and Security Committee led by members of their board and safety leaders from the company. For further reading on AI safety, the European Commission's Ethics Guidelines for Trustworthy AI provides a comprehensive framework.

The Future of AI: A Call to Action

The warnings from Kokotajlo and other AI experts underscore the need for a more cautious and responsible approach to AI development. As we race towards AGI, it is crucial to prioritise safety and address the potential risks posed by this powerful technology. This sentiment is increasingly reflected in global discussions, including those around North Asia: Diverse Models of Structured Governance for AI.

Comment and Share:

What are your thoughts on the warnings from AI experts about the potential destruction or catastrophic harm caused by AI? Do you think we are doing enough to prioritise safety in AI development? We'd love to hear your thoughts. Subscribe to our newsletter for updates on AI and AGI developments.

Latest Comments (4)

This is quite a sobering read, makes you wonder if we're not just rushing headfirst into something without really thinking it through. Especially the part about "disregard for safety measures" – that sounds like a recipe for disaster. Are these companies transparent enough about their internal safety protocols, or is it all under wraps?

Honestly, this "AI race to destruction" narrative feels a bit overblown, doesn't it? While I get the concern for safety, especially with AGI, it’s not like every boffin in a lab is actively trying to unleash Skynet. We've seen similar doom-and-gloom predictions with every major tech leap, from the internet to genetic engineering. Maybe instead of just drumming up fear, these "insiders" could offer more concrete, actionable solutions beyond vague warnings. It’s easy to point fingers, harder to build a safer, more productive path forward. Just a thought from Singapore; we're quite pragmatic here.

Nakakatakot naman! Reading this, I can't help but think about how quickly AI is being adopted in the Philippines, especially in BPO. If these hazards are real, what does this mean for our call centres and local tech start-ups? Hope our government has a proper strategy and not just rushing into things.

This AI doomsday talk is getting real, isn't it? Just seeing it everywhere now.

Leave a Comment