The debate over what counts as true Artificial General Intelligence is more unsettled than the technology itself.

There is no universally agreed definition of Artificial General Intelligence (AGI), despite its central place in AI debates. Shifting definitions allow companies and researchers to frame AI progress in ways that suit their own agendas. The absence of clarity risks undermining serious discourse on progress, risks, and responsibilities in AI development.

Why Defining AGI Matters

Artificial General Intelligence (AGI) is often described as the pinnacle of AI, but even this seemingly straightforward statement hides a swamp of competing interpretations. Should AGI mean machines that can think like humans? Or simply those that can outperform us in many, but not all, intellectual tasks? If we cannot settle on what AGI is, how will we ever agree when we have achieved it?

Socrates once observed that the beginning of wisdom lies in the definition of terms. Today’s AI community might do well to revisit that advice. Without clarity, we are left with a noisy marketplace where headlines shout of breakthroughs, but insiders quietly admit that the cheese keeps being moved.

The Shifting Goalposts of Pinnacle AI

The AI industry has long been split between three stages: today’s narrow AI, tomorrow’s general AI, and the speculative possibility of superintelligence. Narrow AI, from recommendation engines to translation tools, excels in specific tasks but fails to generalise. AGI is meant to close this gap, functioning across domains with human-level adaptability. Superintelligence, in turn, refers to systems that vastly exceed human capacity.

Yet the language around AGI is anything but stable. OpenAI chief Sam Altman, for instance, has variously described AGI as systems that can “tackle increasingly complex problems, at human level, in many fields” and more recently dismissed the term as “not super useful”. Such flexibility may suit a commercial narrative, especially when each new model is marketed as inching us towards AGI. But it leaves the public and policymakers guessing. For more on how AI is impacting various sectors, consider reading about APAC AI in 2026: 4 Trends You Need To Know.

Why Definitions Are Contested

The problem is not only linguistic but philosophical. Intelligence itself resists neat boundaries. Some researchers argue AGI should be defined as machines able to solve all human‑solvable problems. Others emphasise adaptability, autonomy, or economic usefulness. The Association for the Advancement of Artificial Intelligence (AAAI) recently conceded that there is no agreed test for AGI’s achievement, with some resorting to the phrase “we’ll know it when we see it”.

Enjoying this? Get more in your inbox.

Weekly AI news & insights from Asia.

This lack of consensus creates risks. Without a shared definition, companies can declare victory on their own terms. A model that beats humans in “economically valuable work” may be hailed as AGI in one narrative, while another insists true AGI requires competence across every intellectual domain. These are not minor differences but fundamentally divergent visions of AI’s purpose. The broader implications of AI's societal impact are explored in Is AI Cognitive Colonialism?.

Competing Definitions in Practice

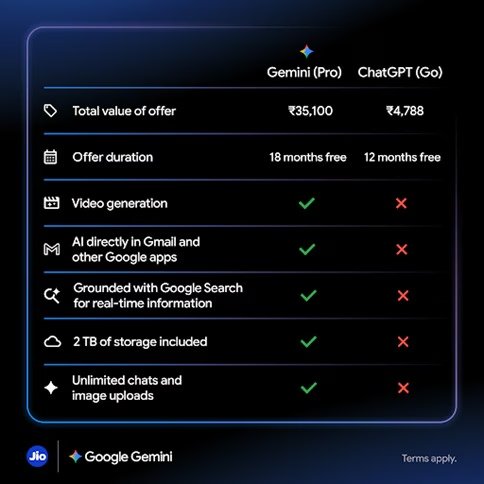

A quick survey illustrates the spread:

Gartner (2024): AGI is the hypothetical machine intelligence able to perform any intellectual task that humans can, across real or virtual environments. Wikipedia (2025): AGI is AI capable of performing the full spectrum of cognitively demanding tasks at or above human proficiency. IBM (2024): AGI marks the stage where AI systems can match or exceed human cognitive abilities across any task, representing the ultimate goal of AI research. OpenAI Charter: AGI is defined as highly autonomous systems that outperform humans at most economically valuable work.

The contrast is stark. Some highlight universality, others economic productivity. Some stress parity with humans, others surpassing them. No two capture quite the same spirit. For a deeper dive into the technical aspects of AI, you might find articles on What is GDPval - and why it matters insightful.

The Danger of Moving the Cheese

Definitions matter because they anchor expectations. If AGI means “all tasks”, then we remain decades away. If it means “many fields”, companies can claim progress today. The risk, as critics point out, is that definitions become tailored to fit product cycles rather than scientific milestones. As a result, debates about progress, safety, and governance collapse into talking past one another.

The metaphor of “moving the cheese” captures this tendency. In AI, the cheese often shifts to wherever the latest model happens to land. This not only confuses outsiders but erodes trust in the field itself.

Should We Retire the Term?

Some scholars argue that the phrase AGI has become too polluted to be useful. It conjures science‑fiction imagery, invites hype, and fuels polarisation. Perhaps, they suggest, we should abandon it altogether in favour of more precise terms. Others counter that AGI has already achieved public recognition and discarding it would only muddy waters further. You can read more about the ongoing debate in the AI community from publications like the AI Magazine.

For now, the term seems set to persist. But if it does, the field must work harder to enclose what one writer called the “wilderness of ideas” within a coherent definition. Otherwise, debates on AI’s risks and opportunities will remain unmoored.

Next time a headline proclaims that AGI is imminent, the sensible question is not when but what the writer means by AGI. Until the field settles its terms, the debate will remain less about machines and more about semantics. And yet, those semantics may decide how governments regulate, how investors allocate billions, and how societies prepare for what comes next.

So, what definition of AGI would you accept?

Latest Comments (4)

Honestly, shouldn't we focus on making current AI helpful for everyday Filipinos, instead of getting hung up on fancy AGI debates? Just sayin'.

Spot on! The varied definitions of AGI are truly a headache, especially when you see how different folks in Singapore and across Asia use the term. It feels like everyone's got their own flavour of what it means. Maybe a proper refinement, rather than chucking it out, is the way forward.

This article hits the nail on the head. So many times I hear people here debating AGI without even agreeing on basic definitions first. It’s a real challenge, like everyone’s talking about a different animal. Refining the term, or even binning it for clearer concepts, seems like a proper good idea to move the conversation forward, especially with all the hype nowadays.

Fascinating read. It definitely feels like we're always chasing the "next big thing" in tech, and AGI is no exception. This debate over definitions is crucial, especially as AI develops so rapidly in countries like India. Clarity helps distinguish genuine progress from just hype, you know?

Leave a Comment