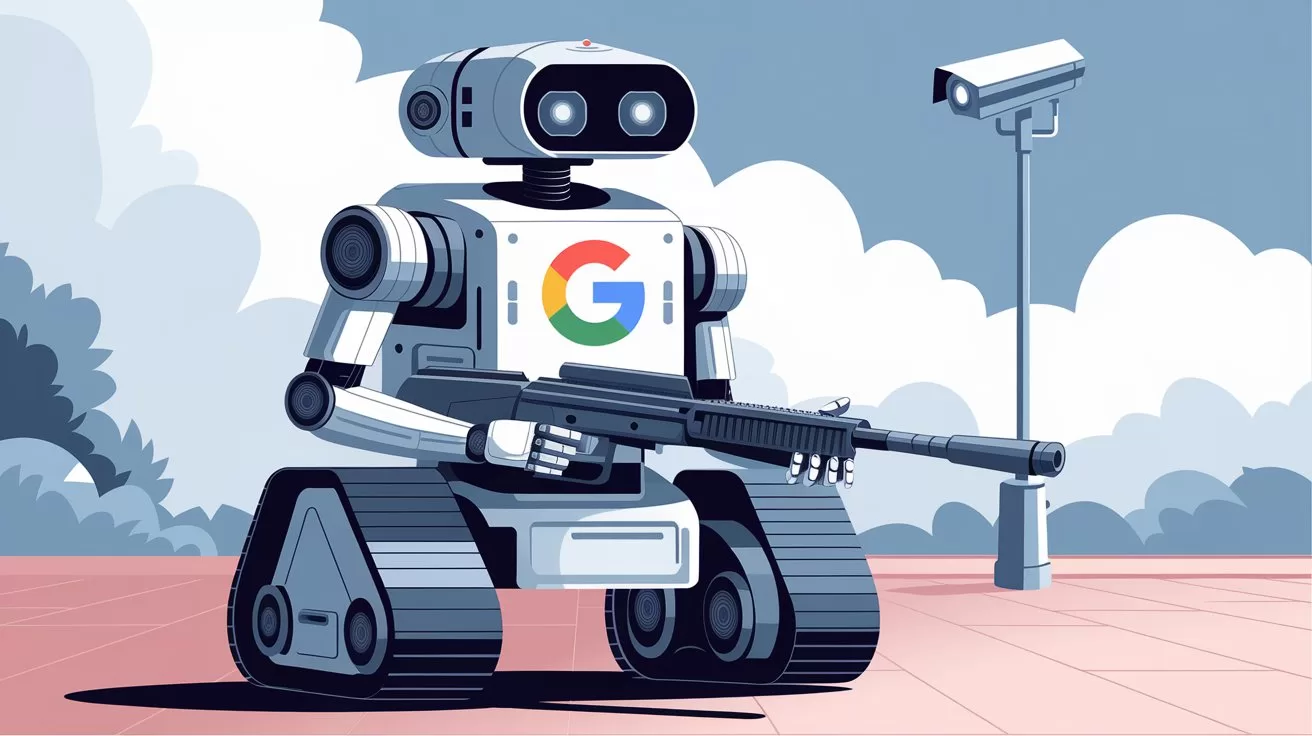

Google has quietly removed its pledge not to use AI for weapons or surveillance.,The original 2018 guidelines referenced human rights; now they emphasise “Bold Innovation.”,Critics view this as Big Tech dropping any pretence of distancing itself from controversial government contracts.,Questions loom about what this means for AI ethics and democracy.

Big Tech’s Changing Moral Compass: Project Maven

Back in 2018, Google found itself in hot water when the public learned of its involvement in Project Maven, a contract with the U.S. Department of Defense to develop AI for drone imaging. In response to the backlash, CEO Sundar Pichai laid out a set of AI principles, pledging not to use the technology for weapons, surveillance, or projects that contravene international human rights.

Fast-forward to today, and those promises have disappeared. The updated AI principles now pivot away from banning military and surveillance applications, instead extolling “Bold Innovation,” balancing benefits against “foreseeable risks,” and citing the importance of “Responsible development and deployment.” The reference to avoiding technologies that breach human rights standards has been softened, offering more leeway for Google—and possibly other tech giants—to pursue lucrative military or policing contracts.

Emphasis on Innovation Over Ethics

The newly framed goals outline three main principles, with the spotlight firmly on “Bold Innovation”—celebrating AI’s capacity to drive economic progress, improve lives, and tackle humanity’s greatest challenges. While noble in theory, critics argue that this reframing effectively dilutes the stronger language of the original guidelines.

The second principle highlights the “Responsible development and deployment” of AI, mentioning “unintended or harmful outcomes” and “unfair bias.” Yet this also appears more lenient than the previous stance. Instead of a strict refusal to engage in ethically dubious projects, Google now mentions “appropriate human oversight, due diligence, and feedback mechanisms.” This shift seems designed to minimise PR fallout rather than erect hard boundaries.

Enjoying this? Get more in your inbox.

Weekly AI news & insights from Asia.

The Historical Ties

Silicon Valley’s roots in military funding date back decades, with large-scale defence contracts instrumental in fostering technological breakthroughs. But in recent years, consumer-facing tech companies often sought to distance themselves from these associations, wary of public and shareholder pushback. The latest changes suggest that Google—and arguably the rest of Big Tech—are now less concerned about being seen as collaborating with entities that use technology for surveillance and warfare. This move aligns with broader trends in the industry, as seen with SoftBank's Big Bet on physical AI and the increasing integration of AI in various sectors, including defense.

From “Don’t Be Evil” to “Don’t Be Caught”

The original motto “Don’t be evil” has been left behind, replaced with a pragmatic drive for profit and power. Google’s newly sanitised language signals a broader cultural shift in Silicon Valley, where business interests are increasingly trumping public relations concerns. From allegations of bias to potential abuse of surveillance tech, the ethical questions surrounding AI remain as pertinent as ever. For instance, discussions around AI's cognitive colonialism highlight some of these deeper concerns.

What do YOU think as Google Lifts AI Ban on Weapons and Surveillance?

So, are we ready to give tech giants free rein on weapons and surveillance, or is it time for stricter global regulation? This is a question many governments are grappling with, as evidenced by Taiwan’s AI Law which is quietly redefining "responsible innovation." The ethical implications of AI in warfare are a growing concern among international bodies and human rights organizations^ Human Rights Watch.

Let’s Talk AI!

How are you preparing for the AI-driven future? What questions are you training yourself to ask? Drop your thoughts in the comments, share this with your network, and Subscribe to our newsletter for more deep dives into AI’s impact on work, life, and everything in between.

Latest Comments (2)

Wah, Google changing tune so fast? Wonder how this affects their "do no evil" mantra now for real.

Honestly, this news from Google doesn't surprise me one bit, yaar. It feels like a natural progression, sadly. We've seen this play out with so many tech giants – lofty ideals at the start, then the inevitable pivot towards profitability and market dominance, often at the expense of those initial ethical stances. It's almost like a race to the bottom, where anyone who doesn't engage in certain lucrative but ethically dubious areas gets left behind. This isn't just about Google; it reflects a worrying global trend where the lines between innovation and weaponisation are becoming increasingly blurred. What happens to the "don't be evil" mantra now, eh? It's all a bit disheartening, frankly speaking.

Leave a Comment