AI is shaping not just our behaviours but also our minds, raising questions about agency, culture and sovereignty

You're scrolling through your social feed, an AI algorithm decides what you see. You ask ChatGPT for advice on a personal problem, it responds through the lens of Western individualism. Your smartphone's voice assistant struggles to grasp your accent but works perfectly for others. These are not glitches; they are signals of something deeper, something many now call AI cognitive colonialism.

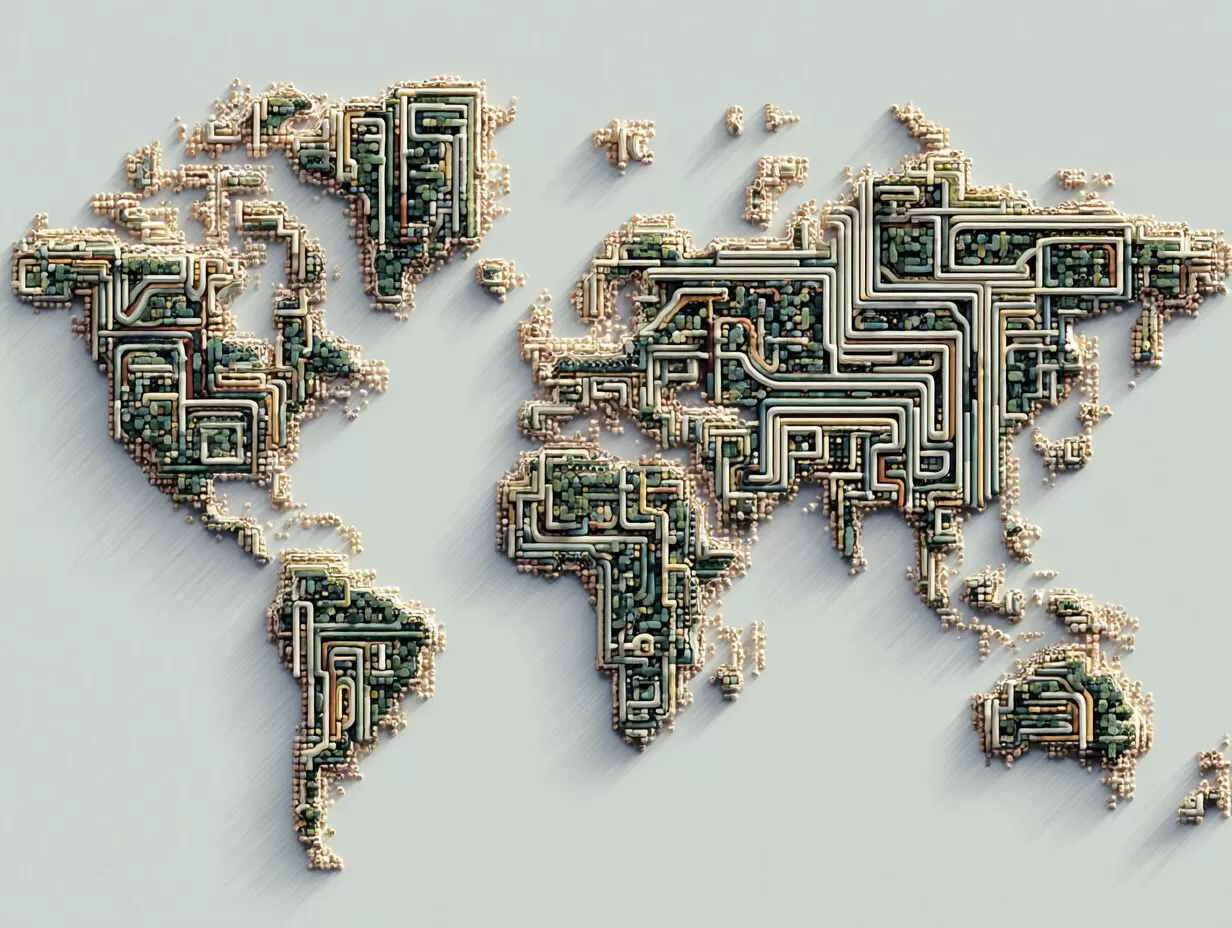

The phrase may sound dramatic, but it captures a real concern: AI is influencing how we perceive the world and how we think, with the flow of power moving largely in one direction from a handful of technology giants to billions of people worldwide.

AI cognitive colonialism describes the one-way influence of global AI systems that reshape how societies think.,Data extraction, Western bias and monocultural training threaten intellectual diversity and agency.,Prosocial AI, tailored to communities, offers a more equal and culturally sensitive alternative.

The New Extraction Economy

Colonial powers once extracted gold and spices from faraway lands. Today, technology firms mine something more intimate; our minds. Vast quantities of digital conversations, posts and behaviours are scraped, feeding AI systems that are then sold back as “services” to those very communities. But the worldview embedded in these systems is rarely theirs; it reflects the perspectives of Silicon Valley and its funders.

What appears altruistic, such as Google and Microsoft offering AI tools to schools and universities free of charge, is also a long-term play: grooming the mindsets of future users. The trade is subtle, youthful curiosity shaped within the contours of Western values, presented as neutral technology.

Our Brain On AI

Neuroscience tells us that human brains are highly plastic, rewiring themselves depending on repeated inputs. Historically, this meant adapting to local languages, environments and traditions. Increasingly, however, it means adapting to AI-driven digital environments.

Navigation apps alter our spatial memory. Autocomplete shapes our writing. Repeated interaction with AI risks what might be called agency decay, the outsourcing of cognitive skills that once defined human independence. As reliance shifts into dependency, the line between tool and master becomes uncomfortably blurred.

The Monoculture Problem

Enjoying this? Get more in your inbox.

Weekly AI news & insights from Asia.

Agricultural monocultures are fragile, prone to disease and collapse. Cognitive monocultures are no different. When AI systems, trained primarily on Western and English-speaking data, spread globally, they flatten perspectives. They implicitly decide which problems are worth solving and which solutions count as “rational.”

This creates blind spots. Consider climate change: solutions that make sense in Europe may fail in Southeast Asia if local contexts are ignored. Homogenised AI risks not just inefficiency but also injustice, as communities are left with systems unfit for their realities.

When AI Gets It Right

Yet AI is not inherently colonial. Technology itself is neutral; it is the human choices in design, training and deployment that determine whether it is oppressive or empowering. There are examples of ProSocial AI, designed not to extract but to support.

Indigenous groups in Canada are working with researchers to build AI tools that preserve endangered languages. In India, AI trained on regional health data is improving early detection of diseases. In Indonesia, local edtech firms are experimenting with AI tutors designed to work in Bahasa Indonesia, Javanese and Sundanese rather than defaulting to English, giving students tools that align with their learning realities. Japan, meanwhile, is embedding cultural ethics into AI guidelines, ensuring that harmony, collective well-being and responsibility underpin its systems.

These efforts begin with community needs rather than corporate profit margins, showing how AI can be contextual, sensitive and collaborative. Such systems are tailored, trained and tested with cultural nuance. They embody planetary dignity rather than narrow dominance.

The Resistance Toolkit

If cognitive colonialism is the threat, then resilience lies in a strengthened mental immune system. A practical “A-Armour” can help:

Assumptions: Ask what worldview the AI reflects. Is it complete or skewed?,Alternatives: Seek diverse human perspectives alongside AI suggestions.,Authority: Consider who built the system, and whose voices were absent.,Accuracy: Verify AI outputs against reliable, varied sources.,Agenda: Follow the incentives. Who gains if you think or act in a certain way?

Such vigilance ensures AI remains a tool rather than a master.

Choosing Our Cognitive Future

AI already influences how we think. The choice now is whether this influence will expand human wisdom or narrow it into uniformity. The danger is not AI itself but its monocultural drift, a creeping homogenisation of thought.

Asia, with its immense diversity of languages, traditions and perspectives, has a unique role to play in resisting cognitive colonialism. By championing locally rooted AI, the region can preserve cognitive plurality at a moment when humanity needs it most. The UNESCO Recommendation on the Ethics of Artificial Intelligence highlights the importance of cultural diversity in AI development.

The crossroads is clear. Will AI homogenise thought into a single, exportable model, or will it nurture the diversity that makes us collectively resilient? The answer, for now, remains ours to shape.

Latest Comments (2)

This is a thought-provoking piece, really makes you think. It's fascinating how you’ve brought out the Asia-specific examples. But, I wonder, doesn't the pursuit of ProSocial AI itself risk creating new forms of cognitive bias, even with good intentions, by defining “prosocial” from a particular lens?

This is a brilliant piece. I've often worried about how these "smart" systems might be eroding our unique ways of thinking back home. Is it really possible, though, to develop ProSocial AI that consistently respects and elevates *all* cultural nuances, or are we just hoping to mitigate the damage rather than truly prevent a form of cognitive neocolonialism?

Leave a Comment