Deepfakes are becoming more prevalent in Asia, with both innovative and malicious applications.,Detecting deepfakes is challenging due to the lack of training data and generalizable models.,Politicians and celebrities are prime targets for deepfakes due to the abundance of available data.,Ethical considerations and preventive measures are crucial to mitigate the risks of deepfakes.

In the rapidly evolving world of artificial intelligence (AI), deepfakes have emerged as a powerful and controversial force. These AI-generated or modified images, audio recordings, or videos can depict real or fictional people, raising both excitement and concern. As Asia embraces AI and AGI, it's essential to explore the implications of deepfakes in the region.

The Advancement of Deepfake Technology

Deepfakes have come a long way since their inception. Chenliang Xu, an associate professor of computer science at the University of Rochester, notes that generating video using AI is an ongoing research topic. His team was among the first to use artificial neural networks to generate multimodal video in 2017. They started with simple tasks like creating a moving video of a violin player from an image and audio. Now, they can generate real-time, fully drivable heads and even turn them into various styles specified by language descriptions.

However, creating deepfakes is still a complex process. Xu explains, "Generating moving videos along with corresponding audio are difficult problems on their own—and aligning them is even harder." Despite these challenges, the technology continues to advance rapidly.

The Challenges of Deepfake Detection

As deepfakes become more prevalent, so does the need for effective detection technologies. Xu's team has also developed technology for deepfake detection, but he notes that it's an area that needs extensive further research. One of the main challenges is the lack of training data needed to build generalized deepfake detection models. A great example of the cutting-edge in video generation is OpenAI's Sora model.

"If you want to build a technology that's able to detect deepfakes, you need to create a database that identifies what are fake images and what are real images," says Xu. "That labeling requires an additional layer of human involvement that generation does not."

"If you want to build a technology that's able to detect deepfakes, you need to create a database that identifies what are fake images and what are real images," says Xu. "That labeling requires an additional layer of human involvement that generation does not."

Enjoying this? Get more in your inbox.

Weekly AI news & insights from Asia.

Moreover, creating a detector that can generalize to different types of deepfake generators is a significant hurdle. A model may perform well against known techniques but struggle with new or different models. This is particularly relevant when considering the diverse models of structured governance across North Asia and their varied approaches to AI regulation.

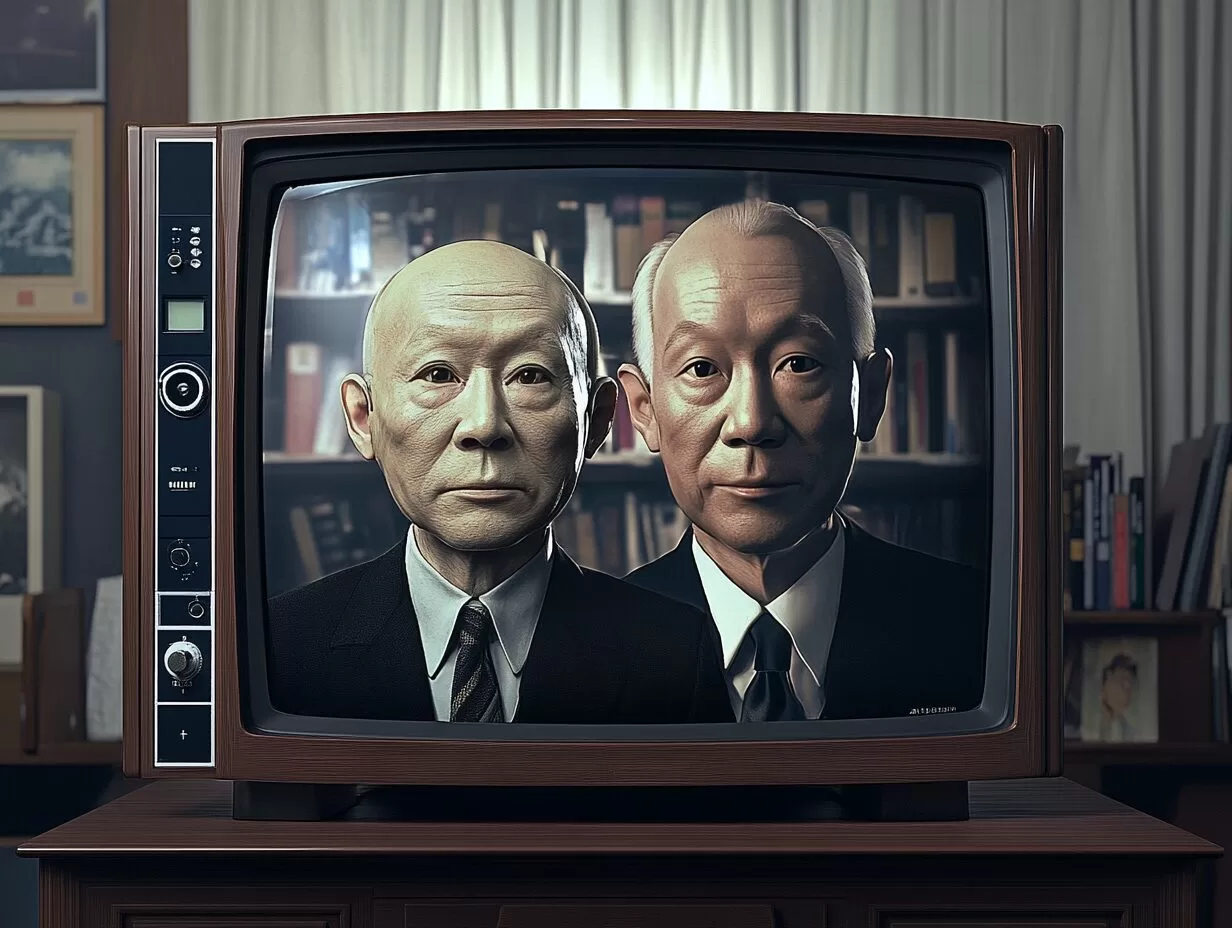

Who Are the Easiest Targets for Deepfakes?

Politicians and celebrities are the earliest and easiest targets for deepfakes. Xu explains that this is due to the abundance of data available about them. Generative AI models can use this data to learn expressions, voices, movements, and emotions, making it easier to create convincing deepfakes.

However, these deepfakes may initially be more noticeable due to the training data they're built on. Xu notes that using only high-quality photographs to train a model may result in an overly smooth style, which can be a cue that it's a deepfake. Other cues can include unnatural reactions, limited head movements, and even the number of teeth shown. But as image generators have overcome similar early tells, enough training data can mitigate these limitations. For more on spotting AI-generated content, check out Spotting AI Video: The #1 Clue.

The Ethical Concerns Surrounding Deepfakes

Deepfakes raise significant ethical concerns. Xu emphasizes the need for the research community to invest more effort into developing deepfake detection strategies and grappling with these ethical issues. The European Commission's Joint Research Centre has published extensive work on this topic, highlighting the urgent need for robust detection mechanisms and policy responses Fighting disinformation: The challenge of deepfakes.

"Generative models are a tool that in the hands of good people can do good things, but in the hands of bad people can do bad things," says Xu. "The technology itself isn't good or bad, but we need to discuss how to prevent these powerful tools from ending up in the wrong hands and used maliciously."

"Generative models are a tool that in the hands of good people can do good things, but in the hands of bad people can do bad things," says Xu. "The technology itself isn't good or bad, but we need to discuss how to prevent these powerful tools from ending up in the wrong hands and used maliciously."

The Future of Deepfakes in Asia

As Asia continues to embrace AI and AGI, deepfakes will likely play a significant role in the region's technological landscape. While they pose risks, they also offer opportunities for innovation. It's crucial for policymakers, researchers, and the public to engage in open dialogue about the ethical implications and preventive measures surrounding deepfakes.

Comment and Share:

What are your thoughts on the future of deepfakes in Asia? How can we ensure that these powerful tools are used responsibly? Share your ideas and experiences with AI and AGI technologies in the comments below. And don't forget to Subscribe to our newsletter for updates on AI and AGI developments.

Latest Comments (2)

Here in China, deepfakes are becoming a major headache for our tech giants and entertainment industry. This piece really highlights the difficult challenges ahead.

Wow, just stumbled upon this piece. It's a proper eye-opener. I'm especially curious about how we, the everyday citizens in Asian countries, can actively protect ourselves when deepfake technology gets even more sophisticated than what's mentioned here. It's a real worry, innit?

Leave a Comment