YouTube introduces a mandatory AI disclosure policy for creators using generative AI in misleading ways.,AI labels to be prominent on health, news, elections, and finance content.,Google's collective effort to moderate AI-generated content extends beyond YouTube.

YouTube's Mandatory AI Disclosure Policy

YouTube, the popular Google-owned video streaming platform, is now requiring creators to disclose their use of generative artificial intelligence (AI) when it could potentially mislead viewers. This move aims to distinguish fact from fiction in an era where AI is increasingly used to alter content.

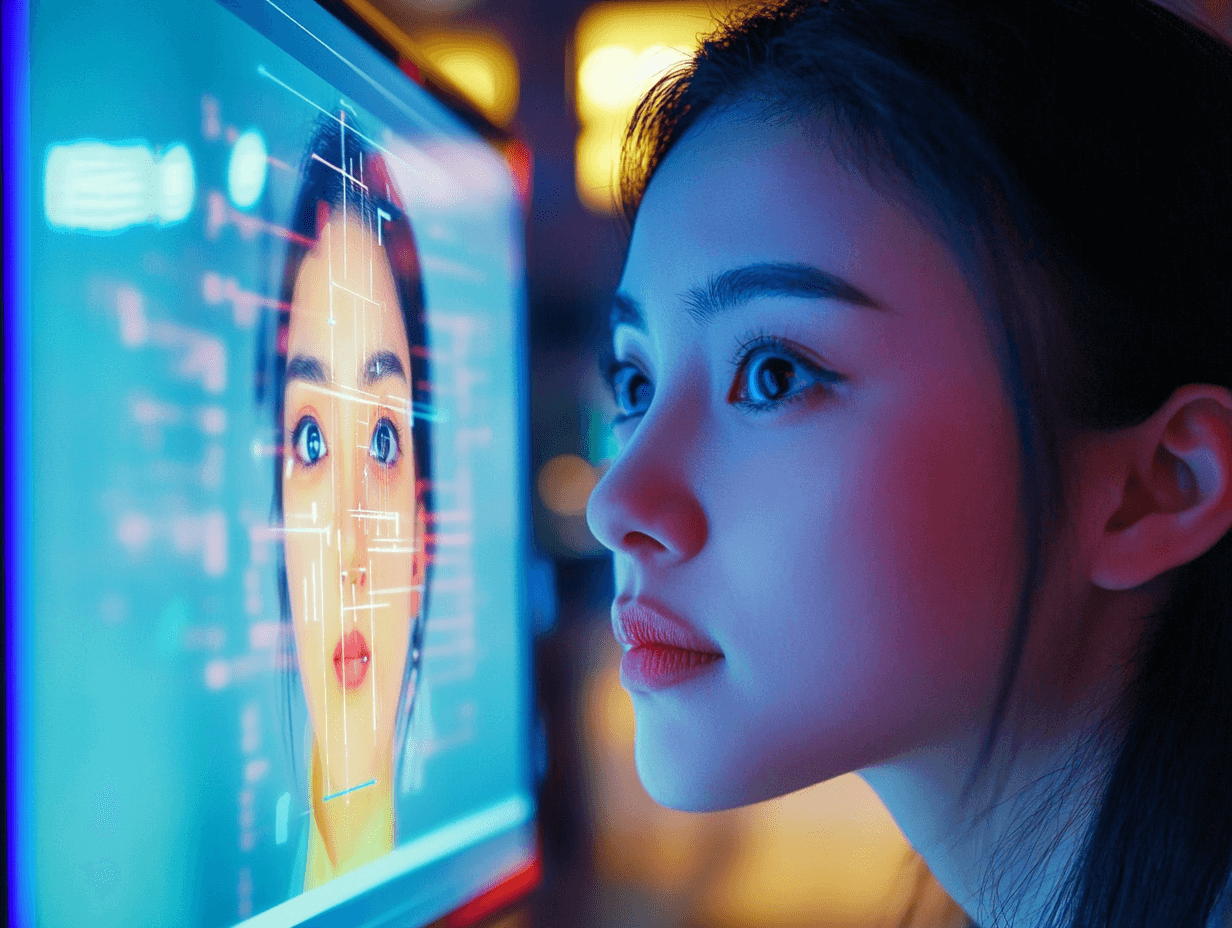

Flagging AI-Generated Content

Creators must now specify when they've used AI to make significant changes to their content. This includes altering imagery of people or events that didn't occur, making a person say or do something that didn't happen, or creating a realistic scene that didn't occur but appears to have, thanks to AI. The disclosure will be displayed in the expanded description for the content, informing viewers about the alterations made. For those interested in understanding how AI can manipulate visuals, our article on spotting AI video offers valuable insights.

Enjoying this? Get more in your inbox.

Weekly AI news & insights from Asia.

Prominent AI Disclosure Labels

Not all AI-generated content requires a label. However, YouTube is making these disclosures more prominent on specific topics like health, news, elections, or finance. This is to ensure that viewers are well-informed about the authenticity of the content they consume in these critical areas. This policy aligns with broader discussions on ProSocial AI and responsible technology.

Google's Broader AI Moderation Efforts

Google's focus on AI moderation extends beyond YouTube. In 2023, Android app developers were asked to flag potentially offensive AI-generated content. This collective effort reflects Google's commitment to navigating the new AI-generated landscape responsibly. For further reading on Google's AI initiatives, you might find our piece on Google's AI Overviews insightful. The company's stance also mirrors the growing global concern over AI ethics, as highlighted in reports such as the European Commission's White Paper on Artificial Intelligence^ https://ec.europa.eu/info/publications/white-paper-artificial-intelligence-european-approach-excellence-and-trust_en.

Comment and Share:

Do you think YouTube's new AI disclosure policy will significantly impact the way viewers consume and perceive content? Share your thoughts in the comments below.

Latest Comments (2)

This is a positive move, innit? I remember struggling to tell what was real in some videos a little while back, especially those deep-fake-ish ones. Good to see YouTube finally bringing some clarity. For us here, where AI's presence is growing, this transparency is crucial, a proper step in the right direction for content consumption.

Good to see this, but I'm curious how they'll properly police deepfakes and subtler AI alterations, especially with regional languages. A real challenge, that.

Leave a Comment