Generative AI, a type of artificial intelligence adept at creating realistic content, is emerging as a powerful tool for malicious actors in the financial services industry. Scammers are wielding this technology to launch sophisticated attacks, making it increasingly difficult for individuals and businesses to distinguish genuine transactions from fraudulent ones. Read on to learn more about generative AI financial scams.

The Growing Threat of Deepfakes and Spear Phishing

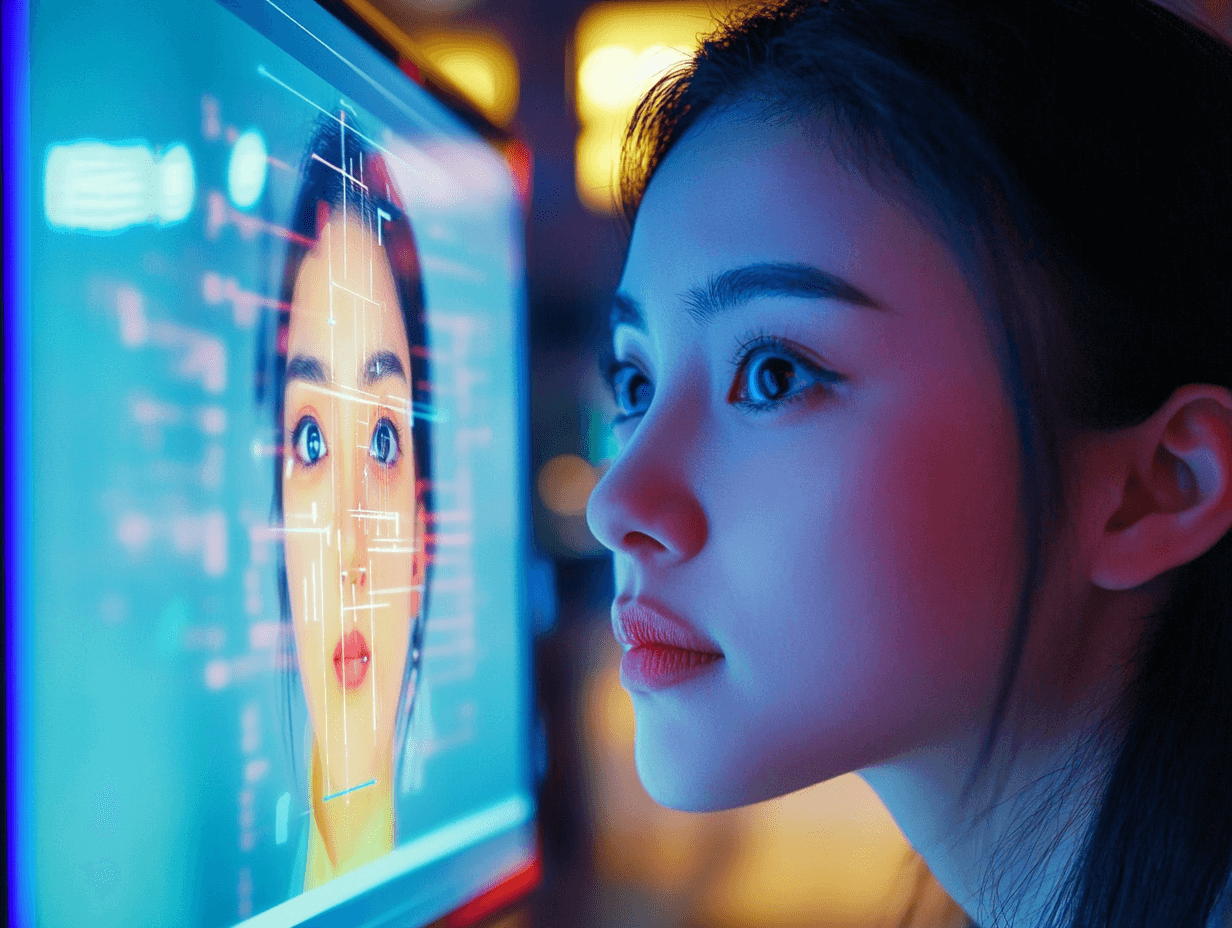

One of the most concerning applications of generative AI in financial scams is the creation of deepfakes. These are manipulated videos or audio recordings that can be used to impersonate real people, often in positions of authority like CEOs or executives. In a recent incident in Hong Kong, a finance employee was tricked into transferring $25.6 million after receiving a seemingly authentic video call from the company's CFO, which was later discovered to be a deepfake.

Generative AI also enables criminals to craft highly personalised spearphishing emails. These emails are designed to target specific individuals or organisations, often containing stolen information or plausible details obtained through readily available online data. The increased credibility of these emails due to AI-generated content makes them more likely to bypass traditional security measures, leading to potential financial losses.

Automation and APIs: Amplifying the Problem

While generative AI enhances the credibility of scams, the scale of the problem is further amplified by automation and the proliferation of online payment platforms. Criminals can now leverage AI to mass-produce phishing emails with minimal effort, significantly increasing the chances of successfully ensnaring unsuspecting victims. Additionally, the rise of Application Programming Interfaces (APIs) in the financial sector creates new vulnerabilities that can be exploited by malicious actors.

The Fight Back Against Generative AI Financial Scams: AI-powered Solutions and Enhanced Authentication

Enjoying this? Get more in your inbox.

Weekly AI news & insights from Asia.

The financial industry is not sitting idly by. Several organizations are developing countermeasures powered by their own generative AI models to detect and prevent fraudulent transactions. These models can identify anomalous patterns in financial activity and flag suspicious accounts used to launder stolen funds. You can learn more about how AI is changing financial security.

Furthermore, companies are exploring enhanced authentication methods to distinguish real identities from deepfaked ones. These methods might involve incorporating biometric authentication, such as voice recognition or facial recognition, into the verification process. For businesses, understanding what every worker needs to answer: What is your non-machine premium? can be key to adapting.

Protecting Yourself from AI-powered Scams

While the fight against AI-powered financial scams continues to evolve, individuals and businesses can take proactive steps to protect themselves:

Be cautious of unsolicited communication: Regardless of the sender, whether via email, phone call, or video call, verify the legitimacy of any request for financial information or money transfer before acting.,Implement strong authentication protocols: Businesses should enforce multi-factor authentication and establish clear procedures for verifying financial transactions, especially those involving large sums of money.,Stay informed: Keep yourself updated on the latest scamming tactics and educate others about these emerging threats. This is especially relevant in regions like Southeast Asia, where AI's trust deficit is a growing concern.

The Future of AI and Financial Security Amid Generative AI Financial Scams

The rapid development of generative AI poses a significant challenge to traditional security measures in the financial sector. While businesses and individuals adapt, continuous vigilance and awareness remain crucial to thwarting these evolving scams. As we navigate this rapidly changing landscape, one question remains: Will AI become the ultimate weapon in the fight against financial crime, or will it simply equip criminals with more sophisticated tools? Only time will tell. For more insights into the broader impact of AI, consider how AI is recalibrating the value of data.

Will generative AI make traditional security measures obsolete in the fight against financial scams? Let us know in the comments below!

Latest Comments (6)

This piece really hits home, doesn't it? It's not just financial fraud, mind you. Here in India, we're seeing deepfakes weaponised in all sorts of ways, from political disinformation to personal vendettas. My cousin's neighbour, bless her heart, nearly lost her life savings to a scammer impersonating her son using AI voice cloning just last month. It’s terrifying how easily these sophisticated tools can be exploited. This isn't just about protecting our bank accounts anymore; it's about safeguarding trust in the digital age. We need robust defenses and swift education, both in the cities and in our villages, before things get even more out of hand.

Spot on. This issue has really ballooned, especially with new tech making it easier for fraudsters. It's a proper headache for our financial institutions.

Wow, this is intense. Just stumbled across this, and it really makes you wonder: beyond the tech, how effective are our current legal frameworks in Singapore and broader Asia actually equipped to handle such rapidly evolving AI-powered fraud? It's a proper wild west out there.

Ah, *bonjour*, everyone. This article on deepfakes and AI in financial fraud in Asia really caught my eye. It’s funny, I was just reading something similar last week, about how these AI tools are getting so advanced. Here in France, we’re keeping a close watch on these developments too, as they’re not just a problem for Asia, are they? It’s a worldwide issue, this business of digital deception. The article highlights the sophistication, and that’s the scary *bit*. It makes you wonder how we’ll ever truly tell what’s real from what’s not, especially with voice and video. A proper *casse-tête*, this whole thing.

Wow, just came across this. It's really concerning how quickly deepfakes are becoming a weapon for these scammers. Heard a lot of stories back home about folks getting tricked; it's proper scary to think how believable these AI voices and faces are. Businesses here gotta really up their game against this.

This is really worrying, lah. Just read this article and it's confirming my fears about generative AI. Scammers here are always finding new ways, and deepfakes just make it so much harder to spot. I was just thinking about this the other day, actually. Definitely need to keep an eye on this space.

Leave a Comment