Learning

Google Gemini: How To Maximise Its Potential

Exploring Google Gemini AI, its unique features, and its applications and potential in Asia’s growing tech industry.

Published

1 year agoon

By

AIinAsia

TL/DR: Google Gemini AI

- Gemini offers unique capabilities, despite being less popular than ChatGPT and Copilot.

- It can answer queries, generate content, and integrate with Google apps, making it a versatile AI tool.

- Users can optimise their Gemini experience by rating responses, modifying answers, using voice commands, and managing conversations.

- Google Gemini’s potential in Asia’s growing tech industry is significant, with diverse applications across sectors.

Introduction:

In this article, we delve into Google Gemini, an AI tool that’s making a mark in the tech industry, particularly in Asia. While it may not be as well-known as OpenAI’s ChatGPT or Microsoft’s Copilot, Google Gemini offers a unique set of features that can make your life more convenient and efficient.

What is Google Gemini?

Google Gemini, formerly Bard, is an AI chatbot that allows users to interact with it using voice commands and receive spoken responses. It can provide information, generate content, and integrate with other Google apps and services, making it a versatile AI tool.

Getting Started with Google Gemini:

To access Gemini, visit the Gemini website and log in with your Google account. Once logged in, you can ask questions, provide your location for localised results, and even ask Gemini to compose content for you.

Optimising Your Gemini AI Experience:

Here are 14 ways to make the most of Google Gemini:

- Rate responses

- Modify Gemini’s responses

- Ask Gemini to Google it

- View other drafts

- Speak your request

- Have Gemini read its response aloud

- Revise your question

- Ask Gemini to revise a specific part of the response

- Get local information with precise location

- Upload images to Gemini

- Copy a response

- Publish a response

- Export a response

- Manage your conversations

Google Gemini AI in Asia’s Tech Landscape:

Asia, home to a rapidly growing tech industry, is a critical region for AI and AGI developments. Google Gemini’s multilingual capabilities make it a valuable tool in this diverse region. In India, Gemini can provide information in Hindi and English, making it accessible to a wider audience.

Gemini’s potential applications in Asia span various sectors. For education, it can help students learn and revise concepts. In business, it can assist in drafting emails or generating reports. And in the creative industry, it can aid in brainstorming ideas and creating content.

Case Study: Gemini AI in Asian Education:

Consider a school in Singapore where teachers use Gemini to create personalised learning materials for students. By asking Gemini to generate content based on specific topics and learning levels, teachers can provide resources tailored to each student’s needs, enhancing their learning experience.

The Future of Google Gemini in Asia:

As AI and AGI continue to evolve, so too will Google Gemini, with ongoing advancements in machine learning and natural language processing, we can expect Gemini to become even more intelligent, responsive, and user-friendly.

For tech-savvy individuals in Asia, this means more opportunities to leverage Gemini’s capabilities in their daily lives, from work and study to entertainment and communication.

Conclusion:

Google Gemini is a powerful AI tool that can revolutionise the way you interact with information. With its unique features and seamless integration with Google apps, it’s a tool worth exploring for tech enthusiasts in Asia and beyond.

Comment and Share:

Have you tried Google Gemini yet? Share your experiences in the comments below! Don’t forget to subscribe for updates on AI and AGI developments. We’d love to hear your thoughts on the future of these technologies in Asia.

You may also like:

- hhat is Google Gemini?

- Google’s Gemini: A Game-Changer for AI in Asia

- Google Sets Sights on Leading Global AI Development by 2024

- Or try Google Gemini AI for free by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

You may like

-

Which ChatGPT Model Should You Choose?

-

GPT-4.5 is here! A first look vs Gemini vs Claude vs Microsoft Copilot

-

How To Start Using AI Agents To Transform Your Business

-

Google’s New AI Search Mode—An Early Sneak Peek

-

ChatGPT’s New Custom Traits: What It Means for Personalised AI Interaction

-

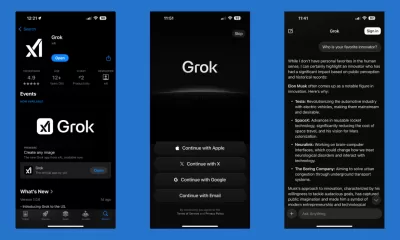

Grok AI Goes Free: Can It Compete With ChatGPT and Gemini?

Business

Build Your Own Agentic AI — No Coding Required

Want to build a smart AI agent without coding? Here’s how to use ChatGPT and no-code tools to create your own agentic AI — step by step.

Published

14 hours agoon

May 9, 2025By

AIinAsia

TL;DR — What You Need to Know About Agentic AI

- Anyone can now build a powerful AI agent using ChatGPT — no technical skills needed.

- Tools like Custom GPTs and Make.com make it easy to create agents that do more than chat — they take action.

- The key is to start with a clear purpose, test it in real-world conditions, and expand as your needs grow.

Anyone Can Build One — And That Includes You

Not too long ago, building a truly capable AI agent felt like something only Silicon Valley engineers could pull off. But the landscape has changed. You don’t need a background in programming or data science anymore — you just need a clear idea of what you want your AI to do, and access to a few easy-to-use tools.

Whether you’re a startup founder looking to automate support, a marketer wanting to build a digital assistant, or simply someone curious about AI, creating your own agent is now well within reach.

What Does ‘Agentic’ Mean, Exactly?

Think of an agentic AI as something far more capable than a standard chatbot. It’s an AI that doesn’t just reply to questions — it can actually do things. That might mean sending emails, pulling information from the web, updating spreadsheets, or interacting with third-party tools and systems.

The difference lies in autonomy. A typical chatbot might respond with a script or FAQ-style answer. An agentic AI, on the other hand, understands the user’s intent, takes appropriate action, and adapts based on ongoing feedback and instructions. It behaves more like a digital team member than a digital toy.

Step 1: Define What You Want It to Do

Before you dive into building anything, it’s important to get crystal clear on what role your agent will play.

Ask yourself:

- Who is going to use this agent?

- What specific tasks should it be responsible for?

- Are there repetitive processes it can take off your plate?

For instance, if you run an online business, you might want an agent that handles frequently asked questions, helps users track their orders, and flags complex queries for human follow-up. If you’re in consulting, your agent could be designed to book meetings, answer basic service questions, or even pre-qualify leads.

Be practical. Focus on solving one or two real problems. You can always expand its capabilities later.

Step 2: Pick a No-Code Platform to Build On

Now comes the fun part: choosing the right platform. If you’re new to this, I recommend starting with OpenAI’s Custom GPTs — it’s the most accessible option and designed for non-coders.

Custom GPTs allow you to build your own version of ChatGPT by simply describing what you want it to do. No technical setup required. You’ll need a ChatGPT Plus or Team subscription to access this feature, but once inside, the process is remarkably straightforward.

If you’re aiming for more complex automation — such as integrating your agent with email systems, customer databases, or CRMs — you may want to explore other no-code platforms like Make.com (formerly Integromat), Dialogflow, or Bubble.io. These offer visual builders where you can map out flows, connect apps, and define logic — all without needing to write a single line of code.

Step 3: Use ChatGPT’s Custom GPT Builder

Let’s say you’ve opted for the Custom GPT route — here’s how to get started.

First, log in to your ChatGPT account and select “Explore GPTs” from the sidebar. Click on “Create,” and you’ll be prompted to describe your agent in natural language. That’s it — just describe what the agent should do, how it should behave, and what tone it should take. For example:

“You are a friendly and professional assistant for my online skincare shop. You help customers with questions about product ingredients, delivery options, and how to track their order status.”

Once you’ve set the description, you can go further by uploading reference materials such as product catalogues, FAQs, or policies. These will give your agent deeper knowledge to draw from. You can also choose to enable additional tools like web browsing or code interpretation, depending on your needs.

Then, test it. Interact with your agent just like a customer would. If it stumbles, refine your instructions. Think of it like coaching — the more clearly you guide it, the better the output becomes.

Step 4: Go Further with Visual Builders

If you’re looking to connect your agent to the outside world — such as pulling data from a spreadsheet, triggering a workflow in your CRM, or sending a Slack message — that’s where tools like Make.com come in.

These platforms allow you to visually design workflows by dragging and dropping different actions and services into a flowchart-style builder. You can set up scenarios like:

- A user asks the agent, “Where’s my order?”

- The agent extracts key info (e.g. email or order number)

- It looks up the order via an API or database

- It responds with the latest shipping status, all in real time

The experience feels a bit like setting up rules in Zapier, but with more control over logic and branching paths. These platforms open up serious possibilities without requiring a developer on your team.

Step 5: Train It, Test It, Then Launch

Once your agent is built, don’t stop there. Test it with real people — ideally your target users. Watch how they interact with it. Are there questions it can’t answer? Instructions it misinterprets? Fix those, and iterate as you go.

Training doesn’t mean coding — it just means improving the agent’s understanding and behaviour by updating your descriptions, feeding it more examples, or adjusting its structure in the visual builder.

Over time, your agent will become more capable, confident, and useful. Think of it as a digital intern that never sleeps — but needs a bit of initial training to perform well.

Why Build One?

The most obvious reason is time. An AI agent can handle repetitive questions, assist users around the clock, and reduce the strain on your support or operations team.

But there’s also the strategic edge. As more companies move towards automation and AI-led support, offering a smart, responsive agent isn’t just a nice-to-have — it’s quickly becoming an expectation.

And here’s the kicker: you don’t need a big team or budget to get started. You just need clarity, curiosity, and a bit of time to explore.

Where to Begin

If you’ve got a ChatGPT Plus account, start by building a Custom GPT. You’ll get an immediate sense of what’s possible. Then, if you need more, look at integrating Make.com or another builder that fits your workflow.

The world of agentic AI is no longer reserved for the technically gifted. It’s now open to creators, business owners, educators, and anyone else with a problem to solve and a bit of imagination.

What kind of AI agent would you build — and what would you have it do for you first? Let us know in the comments below!

You may also like:

- How To Start Using AI Agents To Transform Your Business

- Revolution Ahead: Microsoft’s AI Agents Set to Transform Asian Workplaces

- AI Chatbots: 10 Best ChatGPTs in the ChatGPT Store

- Or tap here to try this out now at ChatGPT by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Learning

Your Next Computer Will Be AI Powered Glasses: The Future of AI + XR Is Here (And It’s Wearable)

Discover how AI-powered smart glasses and immersive XR headsets are transforming everyday computing. Watch Google’s jaw-dropping TED demo featuring Android XR, Gemini AI, and the future of augmented intelligence

Published

1 week agoon

May 2, 2025By

AIinAsia

How AI-powered smart glasses and spatial computing are redefining the next phase of the computing revolution.

TL;DR:

Forget everything you thought you knew about virtual reality. The real breakthrough? Smart glasses that remember your world. Headsets that organise your life. An AI assistant that sees, understands, and acts — on your terms. In this jaw-dropping live demo from TED, Google’s Shahram Izadi and team unveil Android XR, the fusion of augmented reality and AI that turns everyday computing into a seamless, immersive, context-aware experience.

▶️ Watch the full mind-blowing demo at the end of this article. Trust us — this is the one to share.

Why This Video Matters

For decades, computing has revolved around screens, keyboards, and siloed software. This TED Talk marks a hard pivot from that legacy — unveiling what comes next:

- Lightweight smart glasses and XR headsets

- Real-time, multimodal AI assistants (powered by Gemini)

- A future where tech doesn’t just support you — it remembers for you, translates, navigates, and even teaches

It’s not just augmented reality. It’s augmented intelligence.

Key Takeaways From the Presentation

1. The Convergence Has Arrived

AI and XR are no longer developing in parallel — they’re merging. With Android XR, co-developed by Google and Samsung, we see a unified operating system designed for everything from smart glasses to immersive headsets. And yes, it’s conversational, multimodal, and wildly capable.

2. Contextual Memory: Your AI Remembers What You Forget

In a standout demo, Gemini — the AI assistant — remembers where a user left their hotel keycard or which book was on a shelf moments ago. No manual tagging. No verbal cues. Just seamless, visual memory. This has massive implications for productivity, accessibility, and everyday utility.

3. Language Barriers? Gone.

Live translation, real-time multilingual dialogue, and accent-matching voice output. Gemini doesn’t just translate signs — it speaks with natural fluency in your dialect. This opens up truly global communication, from travel to customer support.

4. From Interfaces to Interactions

Say goodbye to clicking through menus. Whether on a headset or glasses, users interact using voice, gaze, and gesture. You’re not navigating a UI — you’re having a conversation with your device, and it just gets it.

5. Education + Creativity Get a Boost

From farming in Stardew Valley to identifying snowboard tricks on the fly, the demos show how AI can become a tutor, a co-pilot, and even a narrator. Whether you’re exploring Cape Town or learning to grow parsnips, Gemini is both informative and entertaining.

Why This Matters for Asia

The fusion of XR and AI is particularly exciting in Asia, where mobile-first users demand intuitive, culturally fluent, and multilingual tech. With lightweight wearables and hyper-personal AI, we’re looking at:

- Inclusive education tools for remote learners

- Smarter workforce training across languages and contexts

- Digital companions that can serve diverse populations — from elders to Gen Z creators

As Google rolls out this tech via Android XR, the opportunities for Asian developers, brands, and educators are immense.

Watch It Now: The Full TED Talk

Title: This is the moment AI + XR changes everything

Speaker: Shahram Izadi, Director of AR/XR at Google

Event: TED 2024

Runtime: ~17 minutes of pure wow

Final Thought

What’s most striking isn’t the hardware — it’s the human experience. Gemini doesn’t feel like software. It feels like a colleague, a coach, a memory aid, a translator, and a co-creator all in one.

We’re not just augmenting our surroundings.

We’re augmenting ourselves.

—

👀 Over to You:

What excites (or concerns) you most about AI-powered XR and AI-powered smart glasses? Could this be the beginning of post-smartphone computing?

Drop your thoughts in the comments below!

You may also like:

- Will Meta’s New AR Glasses Replace Smartphones by 2027?

- Meta’s AI-powered Smart Shades with Ray-Ban

- Perplexity Assistant: The New AI Contender Taking on ChatGPT and Gemini

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Learning

Beginner’s Guide to Using Sora AI Video

This friendly guide covers features and tips to help you transform simple text prompts into visually stunning videos while using Sora AI.

Published

3 months agoon

February 14, 2025By

AIinAsia

Hello, lovely readers! If you’ve ever dreamt of creating lively, imaginative videos straight from simple text prompts, then Sora AI is about to become your new best friend. Developed by OpenAI, the Sora AI text-to-video generator lets you transform words into dynamic video content. But before you dive in, there are a few tricks of the trade that’ll help you get the most out of this cutting-edge tool.

Table of Contents

- What Is Sora AI?

- Getting Started with Sora AI

- Crafting Effective Prompts

- Advanced Features of Sora AI

- Limitations of Sora AI

- Key Differences: Original Sora vs. Sora Turbo

- Incorporating Personal Assets

- General Guidelines for All Prompts

- Category-Specific Tips

- Prompt Refinement Checklist

- Real-World Applications of Sora AI

- Conclusion & Next Steps

What Is Sora AI?

Picture this: a magical AI tool that can generate videos from simple text descriptions, courtesy of OpenAI. Much like text-to-image generators (e.g., DALL·E or MidJourney), Sora AI uses a diffusion model to take your prompt—something like “A cat playing the piano on a moonlit rooftop”—and transform it into a short video clip.

- Creative Storytelling: Sora excels at conjuring cinematic or whimsical visuals.

- Cinematic Effects: You can try out film noir, 3D animation, or even a painterly vibe.

- Animation of Still Images: Animate a static photo (say, your favourite landmark) and watch it come to life!

Do keep in mind that Sora does have its quirks:

- Human Imagery Restrictions: They’re quite cautious about privacy and ethics.

- Occasional Inconsistencies: Some videos end up looking a bit wonky—think odd proportions or peculiar motion.

Getting Started with Sora AI

1. Accessing Sora

- Head over to Sora’s official website and sign in with your OpenAI login.

- If you’re in a region where Sora’s not yet available, a VPN might come in handy.

- Choose your plan: free or paid subscription. Premium users enjoy higher resolutions and longer clips.

2. Familiarising Yourself with the Interface

- Prompting Window: The space where you type your imaginative descriptions.

- Storyboard: A timeline-like tool for building multi-scene videos.

- Blend Editor: Lets you merge and transition between multiple clips.

- Remix Tool: Tweak or reinterpret older videos with fresh prompts.

3. Setting Up Video Parameters

- Aspect Ratio: 16:9 for widescreen, 1:1 for social media squares—take your pick!

- Resolution: Going for 1080p uses more credits but looks crisp.

- Video Length: Some plans allow up to 60 seconds.

Crafting Effective Prompts

A brilliant video is only as good as the prompt you feed Sora. Here’s what works:

- Use Clear and Concise Language

- Avoid baffling jargon.

- Example: “A futuristic cityscape at night with glowing neon signs, flying cars, and a robotic figure on a rooftop.”

- Incorporate Visual Styles

- You can say “watercolour,” “stop motion,” “film noir”—Sora will adapt.

- Example: “A black-and-white film noir scene of a detective under a flickering streetlight in the pouring rain.”

- Add Camera Techniques

- Want slow motion or a panoramic sweep? Just mention it.

- Example: “A slow-motion close-up of a flower blooming in a sunny meadow.”

- Set the Mood

- Describe lighting, weather, and emotional vibes to guide the model.

- Example: “A cosy living room at dusk, with warm lighting and light rain tapping on the window.”

Advanced Features of Sora AI

Sora AI comes packed with a few extra goodies:

- Storyboard Tool

- Perfect for plotting a mini-film. Arrange scenes before generating.

- Blend Editor

- Seamlessly merge multiple video segments.

- Remix Existing Videos

- Revisit or alter older clips with new prompts or styles.

- Looping Content

- Create endless loops for social media or eye-catching GIFs.

- Image-to-Video Conversion

- Turn static images into snazzy animated clips.

- Video Extension

- Add extra frames to lengthen an existing clip.

- Text-, Image-, and Video-to-Video Inputs (Sora Turbo)

- More ways to feed Sora your creative ideas.

- Remix, Re-Cut, and Blend Tools

- Remix: Swap or update elements in an already-generated clip.

- Re-Cut: Fine-tune specific parts of the video.

- Blend: Melt different objects or scenes together for unique transitions.

Limitations of Sora AI

Like any AI tool, Sora isn’t perfect. Here are the main caveats:

- Physical Accuracy

- Expect the occasional floating chair or bizarre object movement.

- Continuity and Object Permanence

- Longer sequences can sometimes have items popping in and out randomly.

- Video Duration Caps

- Even if you pay for Pro, you might be limited to under 60 seconds.

- Resolution Constraints

- 1080p is your limit for now.

- Performance and Queue Times

- At peak hours, you may find yourself twiddling your thumbs while Sora processes.

- Ethical & Moderation Limits

- You’ll be stopped if you try to generate something too controversial or featuring humans.

- Lack of Fine-Grained Control

- Beyond your text prompt, micromanaging details is tricky.

Key Differences: Original Sora vs. Sora Turbo

There’s the original Sora and the shiny upgraded version, Sora Turbo. Here’s a quick rundown:

- Speed and Efficiency

- Sora Turbo is a proper sprinter, generating multiple clips at once.

- Video Quality and Duration

- Original Sora could handle up to 1 minute, whereas Turbo caps each clip at about 20 seconds (though you can merge them later).

- New Features and Customisation

- Tools like Remix, Re-Cut, Loop, and a fancier Storyboard.

- Input Methods

- Turbo accepts text, images, and even video-to-video prompts.

- Accessibility

- Both are available if you’re on OpenAI Plus or Pro, but usage limits differ.

Incorporating Personal Assets

Want to insert your own pictures or short clips? No problem:

- Media Upload

- Upload images or mini videos by clicking the “+” or “Upload” button.

- Customisation

- Blend your media with AI-generated visuals, or add transitions and visual effects.

- Privacy Settings

- If you don’t fancy sharing your personal content, just disable “Publish to Explore”.

General Guidelines for All Prompts

- Brevity: Keep it under 120 words—short and sweet!

- Specificity: Focus on one or two main ideas.

- Imagery: Paint a clear mental picture for the AI.

- Avoid Sensitive Content: Don’t poke the moderation bear.

- Build Complexity Slowly: If you want something intricate, iterate step by step.

Category-Specific Tips

- Sequence Prompts

- Works: Clear transitions or progressions (e.g., “A knight travelling across a desert, discovering a hidden oasis”).

- Doesn’t Work: Muddled, overly abstract sequences.

- Example: “An epic duel between a Balrog and a Paladin Platypus in a desert world.”

- Human-Focused Prompts

- Works: Humorous or relatable actions (e.g., “A mime crossing a marathon finish line”).

- Doesn’t Work: Anything too philosophical or jam-packed with details.

- Example: “A man strolling through a snowstorm, wearing a helmet made of raw meat.”

- Animal-Focused Prompts

- Works: Fun, vibrant scenarios (e.g., “Cats dressed as wizards facing camera and casting spells”).

- Doesn’t Work: Animals performing too many abstract or contradictory actions at once.

- Example: “A sabre-toothed tiger padding along a glowing riverbank in a prehistoric forest.”

- Figure-Focused Prompts

- Works: Distinctive, stylised scenes (e.g., “A weathered robot scavenging in an abandoned city”).

- Doesn’t Work: Mashing too many cultural icons into a single prompt.

- Example: “A superhero cameo reminiscent of anime, delivering a massive punch that shakes the earth.”

- Location-Focused Prompts

- Works: Captivating environmental descriptions (e.g., “Drone footage of ancient tribes on a mountain at sunset”).

- Doesn’t Work: Overdoing the details so that the setting becomes cluttered.

- Example: “A neon-drenched cityscape welcoming the year 2078, fireworks included.”

Prompt Refinement Checklist

- Clarity: Is your description straightforward and easy to follow?

- Engagement: Does your prompt conjure a strong mental image or storyline?

- Focus: Avoid cramming 10 different big ideas into one prompt.

- Tone: Pick a vibe—playful, cinematic, dramatic—and stick to it.

- Content Sensitivity: Steer clear of copyrighted figures or explicit subject matter.

Real-World Applications of Sora AI

- Social Media: Short, snappy clips for Instagram, TikTok, or YouTube Shorts.

- Storytelling: Quick teasers or imaginative sketches for your next big idea.

- Education: Bring tutorials or lessons to life with short explainers.

- Marketing: Spice up ad campaigns with unique, AI-generated flair.

Conclusion & Next Steps

All in all, Sora AI is a splendid tool for spinning text into visual gold—especially if you love creative, short-form storytelling. It’s not flawless, mind you: longer or more complex prompts can trip it up. But as a starting point for playful, cinematic, or downright quirky videos, it’s in a league of its own.

For a more professional setting—like detailed brand adverts or longer educational videos—Sora might need a bit more polish to handle intricacy. Still, it’s well worth a try if you’re eager to push the boundaries of AI-generated content.

Happy creating, folks!

Disclaimer: This guide blends community wisdom and publicly available resources. Use at your own discretion, and have fun exploring the wild world of Sora AI!

You may also like:

- What Is Sora AI?

- Kling: A Chinese AI Video Model Outshining Sora

- You can also access Sora AI by tapping here (paid for service, not available in all countries yet)

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Build Your Own Agentic AI — No Coding Required

Which ChatGPT Model Should You Choose?

Neuralink Brain-Computer Interface Helps ALS Patient Edit and Narrate YouTube

Trending

-

Marketing2 weeks ago

Marketing2 weeks agoPlaybook: How to Use Ideogram.ai (no design skills required!)

-

Life2 weeks ago

Life2 weeks agoWhatsApp Confirms How To Block Meta AI From Your Chats

-

Business2 weeks ago

Business2 weeks agoChatGPT Just Quietly Released “Memory with Search” – Here’s What You Need to Know

-

Life5 days ago

Life5 days agoGeoffrey Hinton’s AI Wake-Up Call — Are We Raising a Killer Cub?

-

Business5 days ago

Business5 days agoOpenAI Faces Legal Heat Over Profit Plans — Are We Watching a Moral Meltdown?

-

Life4 days ago

Life4 days agoToo Nice for Comfort? Why OpenAI Rolled Back GPT-4o’s Sycophantic Personality Update

-

Life6 days ago

Life6 days agoAI Just Slid Into Your DMs: ChatGPT and Perplexity Are Now on WhatsApp

-

Business4 days ago

Business4 days agoIs Duolingo the Face of an AI Jobs Crisis — or Just the First to Say the Quiet Part Out Loud?