Business

Perplexity’s CEO Declares War on Google And Bets Big on an AI Browser Revolution

Perplexity CEO Aravind Srinivas is battling Google, partnering with Motorola, and launching a bold new AI browser. Discover why the fight for the future of browsing is just getting started.

Published

6 days agoon

By

AIinAsia

TL;DR (What You Need to Know in 30 Seconds)

- Perplexity’s CEO Aravind Srinivas is shifting from fighting Google’s search dominance to building an AI-first browser called Comet — betting browsers are the future of AI agents.

- Motorola will pre-install Perplexity on its new Razr phones, thanks partly to antitrust pressure weakening Google’s grip.

- Perplexity’s strategy? Build a browser that acts like an operating system, executing actions for users directly — while gathering the context needed to out-personalise ChatGPT.

The Browser Wars Are Back — But This Time, AI Is Leading the Charge

When Aravind Srinivas last spoke publicly about Perplexity, it was a David vs Goliath story: a small AI startup taking on Google’s search empire. Fast forward just one year, and the battle lines have moved. Now Srinivas is gearing up for an even bigger fight — for your browser.

Perplexity isn’t just an AI assistant anymore. It’s about to launch its own AI-powered browser, called Comet, next month. And according to Srinivas, it could redefine how digital assistants work forever.

A browser is essentially a containerised operating system. It lets you log into services, scrape information, and take actions — all on the client side. That’s how we move from answering questions to doing things for users.

This vision pits Perplexity not just against Google Search, but against Chrome itself — and the entire way we use the internet.

Fighting Google’s Grip on Phones — and Winning Small Battles

Google’s stranglehold over Android isn’t just about search. It’s about default apps, browser dominance, and OEM revenue sharing. Srinivas openly admits that if it weren’t for the Department of Justice (DOJ) antitrust trial against Google, deals like Perplexity’s latest partnership with Motorola might never have happened.

Thanks to that pressure, Motorola will now pre-install Perplexity on its new Razr devices — offering an alternative to Google’s AI (Gemini) for millions of users.

Still, it’s not easy. Changing your default assistant on Android still takes “seven or eight clicks,” Srinivas says — and Google reportedly pressured Motorola to ensure Gemini stays the default for system-level functions.

We have to be clever and fight. Distribution is everything.

Why Build a Browser?

It’s simple: Control.

- On Android and iOS, assistants are restricted.

- Apps like Uber, Spotify, and Instagram guard their data fiercely.

- AI agents can’t fully access app information to act intelligently.

But in a browser? Logged-in sessions, client-side scraping, reasoning over live pages — all become possible.

“Answering questions will become a commodity,” Srinivas predicts. “The real value will be actions — booking rides, finding songs, ordering food — across services, without users lifting a finger.”

Perplexity’s Comet browser will be the launchpad for this vision, eventually expanding from mobile to Mac and Windows devices.

And yes, they plan to fight Microsoft’s dominance on laptops too, where Copilot is increasingly bundled natively.

Building the Infrastructure for AI Memory

Personalisation isn’t just remembering what users searched for. Srinivas argues it’s about knowing your real-world behaviour — your online shopping, your social media browsing, your ridesharing history.

ChatGPT, he says, can’t see most of that.

Perplexity’s browser could.

By operating at the browser layer, Perplexity aims to gather the deepest context across apps and web activity — building the kind of memory and personalisation that other AI assistants can only dream of.

It’s an ambitious bet — but if it works, it could make Perplexity the most indispensable AI in your digital life.

New Frontiers (and Old Enemies)

Beyond Motorola, Perplexity is eyeing deals with telcos, laptop manufacturers, and OEMs globally. They’re cutting deals with publishers to avoid scraping lawsuits. They’re investing heavily in infrastructure, data distillation, and frontier AI models.

They even flirted with a bid for TikTok, though Srinivas admits ByteDance’s reluctance to part with its algorithm made it a long shot.

What’s clear is that scale, distribution, and control are the new prizes. And Perplexity is playing a long, tactical game to win them.

What do YOU think?

If browsers become the new battleground for AI, will Google lose not just search — but its grip on the entire internet? Let us know in the comments below.

You may also like:

- Perplexity Assistant: The New AI Contender Taking on ChatGPT and Gemini

- Perplexity’s Deep Research Tool is Reshaping Market Dynamics

- Or try Perplexity AI for free by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

You may like

-

Perplexity’s Deep Research Tool is Reshaping Market Dynamics

-

Microsoft & Perplexity Give DeepSeek Their Stamp of Approval

-

ChatGPT’s New Custom Traits: What It Means for Personalised AI Interaction

-

Perplexity Assistant: The New AI Contender Taking on ChatGPT and Gemini

-

Adrian’s Arena: Why I (Mostly) Switched from Google Search to Perplexity AI

-

Game-Changing Google Gemini Tips for Tech-Savvy Asians

Business

Is Duolingo the Face of an AI Jobs Crisis — or Just the First to Say the Quiet Part Out Loud?

Duolingo’s AI-first shift may signal the start of an AI jobs crisis — where companies quietly cut creative and entry-level roles in favour of automation.

Published

17 hours agoon

May 6, 2025By

AIinAsia

TL;DR — What You Need to Know

- Duolingo is cutting contractors and ramping up AI use, shifting towards an “AI-first” strategy.

- Journalists link this to a broader, creeping jobs crisis in creative and entry-level industries.

- It’s not robots replacing workers — it’s leadership decisions driven by cost-cutting and control.

Are We at the Brink of an AI Jobs Crisis

AI isn’t stealing jobs — companies are handing them over. Duolingo’s latest move might be the canary in the creative workforce coal mine.

Here’s the thing: we’ve all been bracing for some kind of AI-led workforce disruption — but few expected it to quietly begin with language learning and grammar correction.

This week, Duolingo officially declared itself an “AI-first” company, announcing plans to replace contractors with automation. But according to journalist Brian Merchant, the switch has been happening behind the scenes for a while now. First, it was the translators. Then the writers. Now, more roles are quietly dissolving into lines of code.

What’s most unsettling isn’t just the layoffs — it’s what this move represents. Merchant, writing in his newsletter Blood in the Machine, argues that we’re not watching some dramatic sci-fi robot uprising. We’re watching spreadsheet-era decision-making, dressed up in futuristic language. It’s not AI taking jobs. It’s leaders choosing not to hire people in the first place.

In fact, The Atlantic recently reported a spike in unemployment among recent college grads. Entry-level white collar roles, which were once stepping stones into careers, are either vanishing or being passed over in favour of AI tools. And let’s be honest — if you’re an exec balancing budgets and juggling board pressure, skipping a salary for a subscription might sound pretty tempting.

But there’s a bigger story here. The AI jobs crisis isn’t a single event. It’s a slow burn. A thousand small shifts — fewer freelance briefs, fewer junior hires, fewer hands on deck in creative industries — that are starting to add up.

As Merchant puts it:

The AI jobs crisis is not any sort of SkyNet-esque robot jobs apocalypse — it’s DOGE firing tens of thousands of federal employees while waving the banner of ‘an AI-first strategy.’” That stings. But it also feels… real.

So now we have to ask: if companies like Duolingo are laying the groundwork for an AI-powered future, who exactly is being left behind?

Are we ready to admit that the AI jobs crisis isn’t coming — it’s already here?

You may also like:

- The Rise of AI-Powered Weapons: Anduril’s $1.5 Billion Leap into the Future

- Get Access to OpenAI’s New GPT-4o Now!

- 10 Amazing GPT-4o Use Cases

- Or try the free version of ChatGPT by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Business

OpenAI Faces Legal Heat Over Profit Plans — Are We Watching a Moral Meltdown?

Former OpenAI employees and AI experts are urging US courts to stop OpenAI’s transition into a for-profit company, warning it could erode its commitment to humanity’s safety.

Published

1 day agoon

May 5, 2025By

AIinAsia

TL;DR — What You Need to Know

- Former OpenAI insiders say the company is straying from its nonprofit mission — and that could be dangerous.

- A legal letter urges US states to stop OpenAI’s transformation into a fully commercial venture.

- Critics argue the new structure would weaken its duty to humanity in favour of shareholder profits.

OpenAI For-Profit Transition: Could It Risk Humanity?

Former employees and academics are urging US courts to block OpenAI’s shift to a for-profit model, warning it could endanger humanity’s future.

The Moral Compass Is Spinning

OpenAI was founded with a mission so lofty it sounded almost utopian: ensure artificial intelligence benefits all of humanity. But fast forward to 2025, and that noble vision is under serious legal and ethical fire — from the very people who once helped build the company.

This week, a group of former OpenAI staffers, legal scholars, and even Nobel Prize winners sent an official plea to California and Delaware attorneys general: don’t let OpenAI go full for-profit. They claim such a move could put both the company’s original mission and humanity’s future at risk.

Among the voices is Nisan Stiennon, a former employee who isn’t mincing words. He warns that OpenAI’s pursuit of Artificial General Intelligence (AGI) — a theoretical AI smarter than humans — could have catastrophic outcomes. “OpenAI may one day build technology that could get us all killed,” he says. Cheery.

At the heart of the complaint is the fear that OpenAI’s transition into a public benefit corporation (PBC) would legally dilute its humanitarian responsibilities. While PBCs can consider the public good, they’re not required to prioritise it over shareholder profits. Todor Markov, another ex-OpenAI team member now at Anthropic, sums it up: “You have no recourse if they just decide to stop caring.”

This all adds up to a dramatic ethical tug-of-war — between commercial growth and moral responsibility. And let’s not forget that CEO Sam Altman has already weathered a scandal in 2023 involving secret updates, boardroom drama, and a five-day firing that ended with his reinstatement.

It’s juicy, yes. But also deeply important.

Because whether or not AGI is even technically possible right now, what we’re really watching is a battle over how much trust we’re willing to place in companies building our digital future.

So here’s the question that matters:

If humanity’s safety depends on who’s in control of AGI — do we really want it run like just another startup?

You may also like:

- Safe Superintelligence Inc: Pioneering AI Safety

- AI Fusion Powered Energy of the Future: A Chat with Sam Altman

- WhatsApp Confirms How To Block Meta AI From Your Chats

- Try the free version of ChatGPT here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Business

Shadow AI at Work: A Wake-Up Call for Business Leaders

AI tools like ChatGPT are quietly reshaping how people work – but nearly half of users admit to using them in risky or inappropriate ways.

Published

3 days agoon

May 4, 2025By

AIinAsia

TL;DR — What You Need To Know:

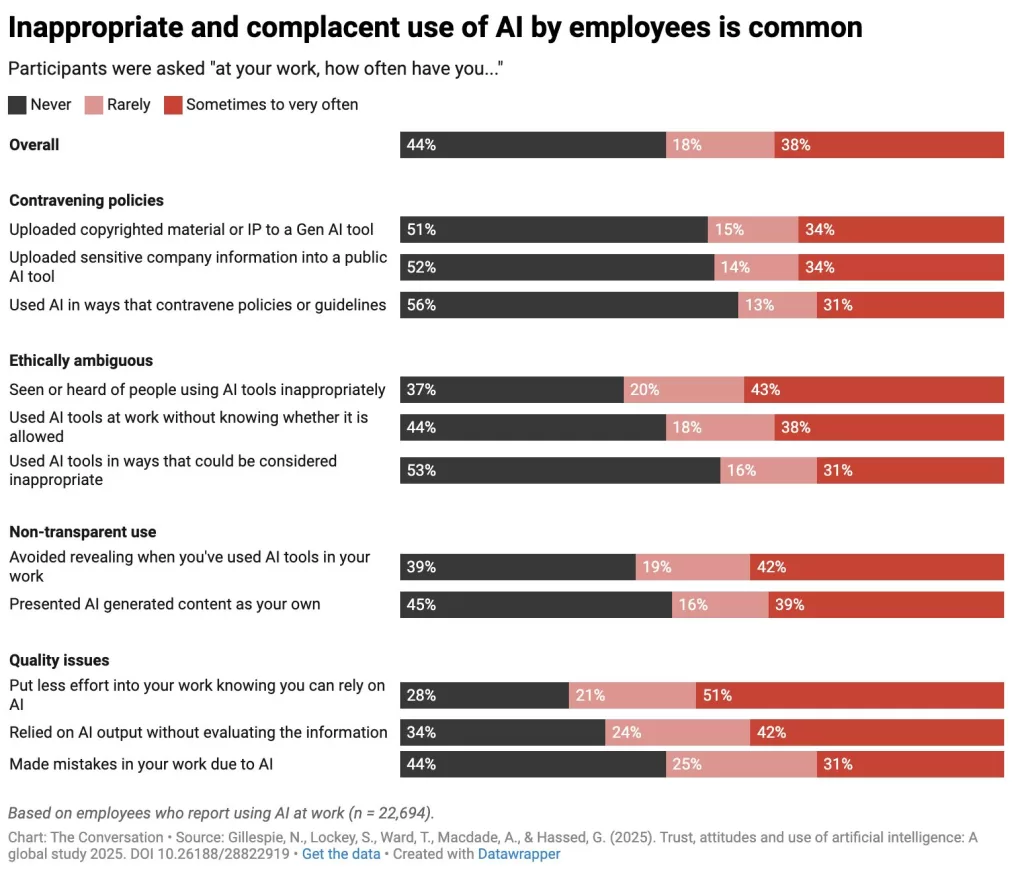

- 58% of workers use AI at work – but nearly half (47%) admit to using it in risky or non-compliant ways, like uploading sensitive data or hiding its use.

- ‘Shadow AI’ is rampant, with 61% not disclosing AI use, and 66% relying on its output without verifying – leading to mistakes, compliance risks, and reputational damage.

- Lack of AI literacy and governance is the root cause – only 34% of companies have AI policies, and less than half of employees have received any AI training.

AI at work is booming – but too often it’s happening in the shadows, unsafely and unchecked.

The Silent Surge of Shadow AI

Have you ever asked ChatGPT to polish a pitch, summarise a tedious report, or structure a tricky email to your boss? You’re far from alone.

A new global study surveying over 32,000 employees across 47 countries has confirmed what many of us already suspect: AI has gone mainstream in the workplace. 58% of workers say they’re using AI at work, with a third doing so weekly or even daily.

On the surface, that’s a good thing. AI use is being credited with boosts in efficiency (67%), information access (61%), innovation (59%), and work quality (58%).

But scratch a little deeper, and things get messy.

Almost Half Admit to Using AI Inappropriately

The report reveals a troubling undercurrent: 47% of AI users admit to inappropriate use, and 63% have seen colleagues doing the same.

What does “inappropriate” mean in this context?

- Uploading sensitive company or customer data into public tools like ChatGPT (48% admitted to this)

- Using AI against company policies (44%)

- Not checking AI’s output for accuracy (66%)

- Passing off AI-generated content as their own (55%)

This isn’t just a compliance issue — it’s a looming business risk. 56% of respondents say AI has caused them to make mistakes at work, and 35% believe it’s increased privacy or legal risk.

The Rise of Shadow AI

Perhaps most concerning is how much of this AI usage is happening under the radar.

Welcome to the world of Shadow AI — tools used without approval, oversight, or sometimes even awareness from employers. The stats are telling:

- 61% of workers have used AI without telling anyone

- 66% don’t know if it’s allowed

- 55% claim AI output as their own work

When AI becomes invisible, so do its risks. And when that’s combined with a lack of guardrails, the consequences can be both immediate and reputational.

Why Are Employees Going Rogue?

The blame doesn’t lie solely with staff. The report shows only 34% of companies have AI usage policies, and just 6% outright ban it. Even more damning, less than half (47%) of employees have had any AI-related training.

Meanwhile, there’s increasing pressure to use AI tools just to stay competitive — 50% of workers fear being left behind if they don’t.

In short: employees are navigating a fast-moving, high-stakes environment without a map or a guide.

What Can Businesses Do Now?

The takeaway isn’t to ban AI. It’s to lead its responsible use.

Here’s what forward-looking organisations should prioritise:

1. Develop clear AI policies

Outline what’s allowed, what’s not, and where the red lines are — especially around privacy, client data, and copyright.

2. Invest in AI literacy

Training matters. Employees with even basic AI training are more likely to verify outputs, use AI responsibly, and generate better performance gains.

3. Foster transparency, not fear

Creating a culture of psychological safety around AI use encourages disclosure and learning — not secrecy and shortcuts.

4. Implement oversight and accountability

You can’t manage what you can’t see. Systems to track and audit AI usage are crucial — not to control, but to support smarter, safer adoption.

A Tipping Point Moment

The AI boom is already here. But so are its risks.

The challenge now isn’t getting people to use AI — they’re already doing that. The real test is whether businesses can create the right culture, governance, and education before the next high-profile blunder becomes their own.

Final Thought

If your employees are already using AI tools – and 58% of them are – do you know how, when, and why? Or is your company just one upload away from a preventable crisis?

You may also like:

- Uncontrolled AI: A Growing Threat to Businesses

- Why Your Company Urgently Needs An AI Policy: Protect And Propel Your Business

- The Shocking Truth: How AI and ChatGPT Are Guzzling Our Energy

- Or try the free version of ChatGPT by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Too Nice for Comfort? Why OpenAI Rolled Back GPT-4o’s Sycophantic Personality Update

Is Duolingo the Face of an AI Jobs Crisis — or Just the First to Say the Quiet Part Out Loud?

OpenAI Faces Legal Heat Over Profit Plans — Are We Watching a Moral Meltdown?

Trending

-

Marketing2 weeks ago

Marketing2 weeks agoPlaybook: How to Use Ideogram.ai (no design skills required!)

-

Life1 week ago

Life1 week agoWhatsApp Confirms How To Block Meta AI From Your Chats

-

Business1 week ago

Business1 week agoChatGPT Just Quietly Released “Memory with Search” – Here’s What You Need to Know

-

Life2 days ago

Life2 days agoGeoffrey Hinton’s AI Wake-Up Call — Are We Raising a Killer Cub?

-

Business1 day ago

Business1 day agoOpenAI Faces Legal Heat Over Profit Plans — Are We Watching a Moral Meltdown?

-

Life3 days ago

Life3 days agoAI Just Slid Into Your DMs: ChatGPT and Perplexity Are Now on WhatsApp

-

Life6 days ago

Life6 days agoBalancing AI’s Cognitive Comfort Food with Critical Thought

-

Life2 days ago

Life2 days agoThe End of the Like Button? How AI Is Rewriting What We Want