AI-generated content detection tools often fail in the Global South due to biases in training data.,Detection tools prioritise Western languages and faces, leading to inaccuracies in other regions.,The lack of local data and infrastructure hampers the development of effective detection tools.

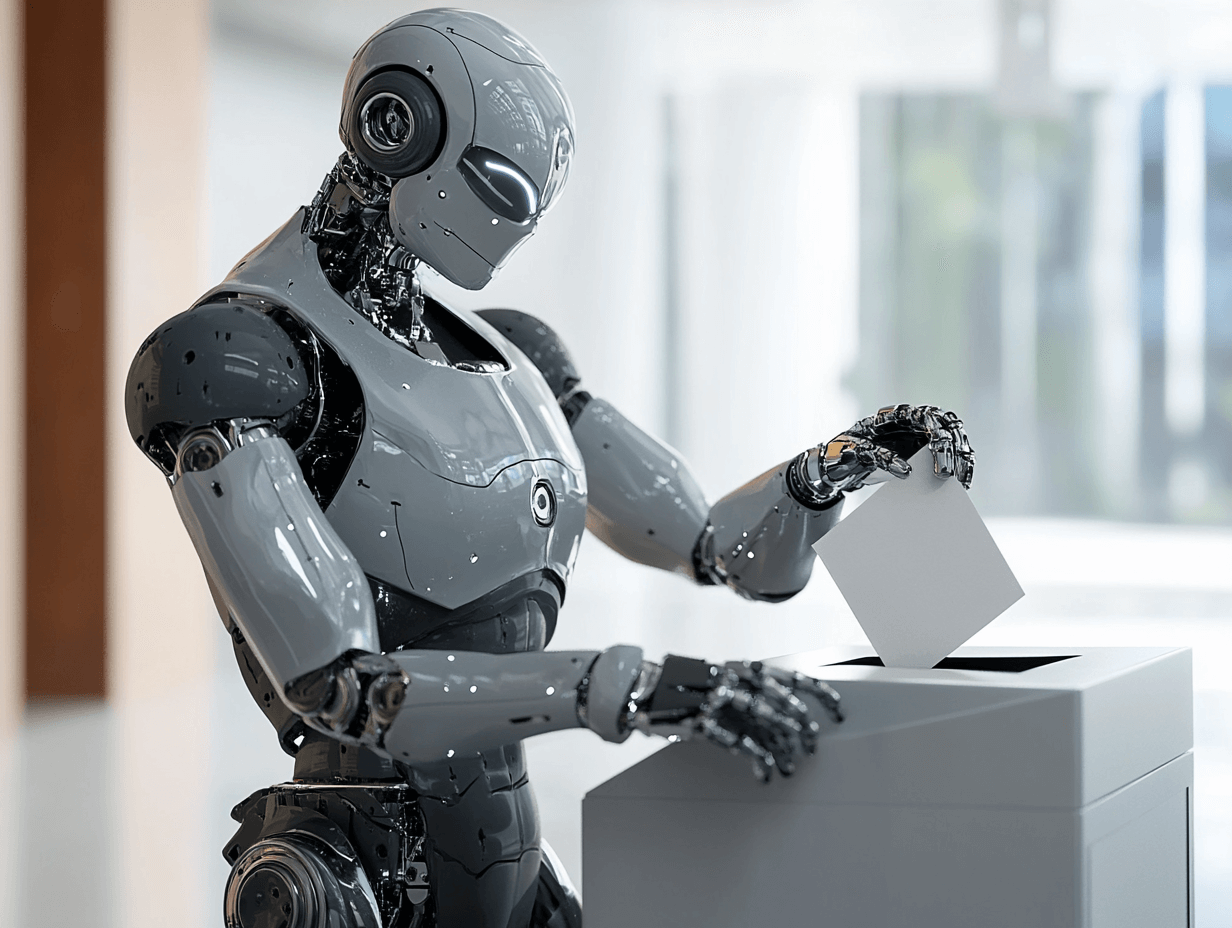

Artificial Intelligence (AI) is transforming the world, but it's not always for the better. As generative AI becomes more common, so does the challenge of detecting AI-generated content, especially in the Global South. This article explores the issues and biases in AI-fakes detection and how they impact voters in these regions.

The Rise of AI-Generated Content

AI can create convincing images, videos, and text. This technology is often used in politics. For example, former US President Donald Trump shared AI-generated photos of Taylor Swift fans supporting him. Tools like True Media's detection tool can spot these fakes, but it's not always that simple.

The Detection Gap in the Global South

Detecting AI-generated content is harder in the Global South. Most detection tools are trained on Western data, so they struggle with content from other regions. This leads to many false positives and negatives.

Biases in Training Data

AI models are usually trained on data from Western markets. This means they recognise Western faces and English language better. "They prioritized English language—US-accented English—or faces predominant in the Western world," says Sam Gregory from the nonprofit Witness.

Lack of Local Data

In many parts of the Global South, data is not digitised. This makes it hard to train AI models on local content. "Most of our data, actually, from [Africa] is in hard copy," says Richard Ngamita from Thraets. While AI Wave Shifts to Global South is a growing trend, the foundational data infrastructure often lags.

Low-Quality Media

Enjoying this? Get more in your inbox.

Weekly AI news & insights from Asia.

Many people in the Global South use cheap smartphones that produce low-quality photos and videos. This confuses detection models. "A lot of the initial deepfake detection tools were trained on high quality media," says Gregory. This issue is compounded by the fact that AI Browsers Under Threat as Researchers Expose Deep Flaws, potentially making detection even more complex.

The Impact of Faulty Detection

False positives and negatives can have serious consequences. They can lead to wrong policies and crackdowns on imaginary problems. "There's a huge risk in terms of inflating those kinds of numbers," says Sabhanaz Rashid Diya from the Tech Global Institute. This highlights the importance of understanding the invisible impact of AI.

The Challenge of Cheapfakes

Cheapfakes are simple edits that can be mistaken for AI-generated content. They are common in the Global South but can fool detection tools and untrained researchers.

The Struggle to Build Local Solutions

Building local detection tools is hard. It requires access to energy and data centres, which are not always available in the Global South. "If you talk about AI and local solutions here, it's almost impossible without the compute side of things for us to even run any of our models that we are thinking about coming up with," says Ngamita. This ties into the broader challenge of Running Out of Data: The Strange Problem Behind AI's Next Bottleneck.

Prompt: Imagine You Are a Journalist in the Global South

Rationale: This prompt encourages empathy and understanding of the challenges journalists face in the Global South.

Prompt: Imagine you are a journalist in the Global South. You receive a tip about a political candidate using AI-generated content to sway voters. How would you investigate this story with the current detection tools? What challenges would you face?

The Need for Better Tools

The current detection tools are not good enough. They need to be trained on more diverse data and work with low-quality media. This will help protect voters in the Global South from AI-generated disinformation. According to a report by the United Nations, addressing these biases is crucial for fostering trust in AI globally UN Report on AI and Human Rights.

The Future of AI-Fakes Detection

The future of AI-fakes detection depends on better tools and more local data. It also depends on global cooperation to share resources and knowledge. This can help close the detection gap and protect voters worldwide.

Comment and Share:

What do you think is the biggest challenge in detecting AI-generated content in the Global South? How can we overcome these challenges? Share your thoughts and experiences below. Don't forget to Subscribe to our newsletter for updates on AI and AGI developments.

Latest Comments (4)

This is a crucial point, really. It makes me wonder about the specific types of AI-generated content that are proving most problematic in our region. Are we seeing more deepfakes, or is it the subtle manipulation of text and audio that's harder to flag? It feels like the nuances of our local languages and cultural references could be exploited in ways general detection models might miss. It’s not just about recognising a fake, but understanding *how* it's designed to influence a particular audience, especially when there's already so much misinformation online. This issue definitely needs more tailored solutions, not just a one-size-fits-all approach.

This piece really nails a critical issue, lah. It's a bit worrying, though, how much emphasis is often placed on tech solutions for what might be fundamentally human problems. We talk about "AI-fakes detection" failing, but isn't part of the problem also about digital literacy and media savviness amongst the populace? If folks don't understand how these fakes are made or what to look for, even the best detection software has its limits, no? Especially when the fakes are designed to appeal to local sentiments. Hard to filter out intent with just an algorithm sometimes.

This article really hits home. I remember chatting with my cousin in Malaysia during their last election, and the amount of questionable stuff on WhatsApp was wild. We're pretty tech savvy here in Singapore, but even I struggle to tell what's real sometimes. Imagine what it's like with fewer resources and more sophisticated fakes. Proper detection is crucial, no two ways about it.

This article really hit home! I've been noticing how quick these deepfakes pop up during our election cycles here in India, and the fakeness detection tools often seem to struggle. It's a proper concern for our democracy, especially with the sheer volume of content circulating. Definitely coming back to read more on this.

Leave a Comment