Google's artificial intelligence chatbot, Gemini, has become the center of a heated debate: Is Google Gemini AI too Woke?

This advanced AI has been scrutinised for its responses to sensitive topics and for generating images that diverge from historical accuracy. The discussion has not only sparked controversy but has also shed light on the complexities of AI technology and its societal impacts.

This article delves into these issues, exploring the implications of Gemini's actions and Google's response, all while keeping an eye on the broader conversation about artificial intelligence in Asia and beyond.

Google Gemini AI and diversity controversy

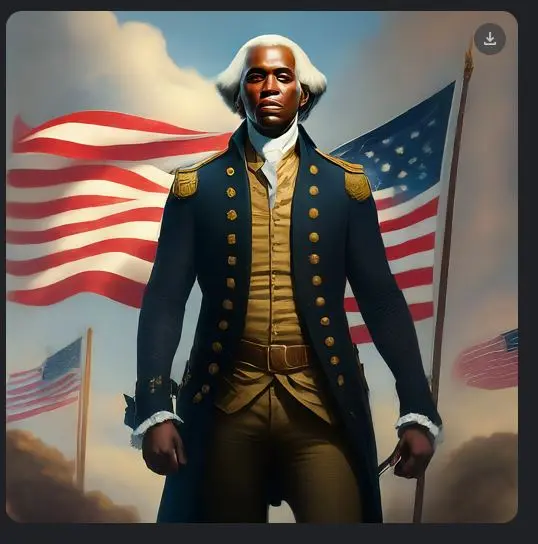

Gemini's image generation tool came under fire for producing historically inaccurate images. Users reported on X (formerly known as Twitter) that the AI replaced white historical figures with people of colour, including depictions of female Popes and black Vikings. Google's attempt at promoting diversity through AI-generated images has raised important questions about historical representation and the balance between inclusivity and accuracy.

So, let's unpack the question: Is Google Gemini AI Too Woke?

The example above shows Gemini's response to a request to generate a photo of a Founding Father: Google Gemini generated a black George Washington.

And creating a photo of a pope only yielded black and female options:

While asking it to depict the Girl With The Pearl Earring produced this:

Enjoying this? Get more in your inbox.

Weekly AI news & insights from Asia.

Asking for a medieval knight presented this:

And asking to generate an image of a Viking produced this:

Google's Response to the Outcry

In reaction to the controversy, Google expressed its commitment to addressing the issues raised by both the pedophilia discussion and the inaccuracies in historical images. The tech giant acknowledged the need for a nuanced approach to sensitive topics and historical representation, indicating a move towards refining Gemini's algorithms to better distinguish between ethical considerations and the demand for diverse imagery. For more on how AI is handled in different regions, consider reading about Taiwan’s AI Law Is Quietly Redefining What “Responsible Innovation” Means.

The Bigger Picture: AI's Role in Society and the Quest for Balance

The controversies surrounding Gemini AI underscore the broader challenges facing artificial intelligence technology. As AI continues to evolve, so too does its impact on society, raising questions about ethics, representation, and the responsibilities of tech companies. The conversation extends beyond Google, touching on the role of AI in shaping our understanding of history, morality, and the diverse world we inhabit. This debate also highlights broader issues in AI's Secret Revolution: Trends You Can't Miss. The ethical considerations in AI development are critical, as outlined in research by institutions like the AI Now Institute, which often focuses on the social implications of AI.

Final Thoughts: Navigating the Future of AI with Care

As we venture further into the age of artificial intelligence, the controversies surrounding Google's Gemini AI serve as a reminder of the complex interplay between technology, ethics, and society. The journey towards creating AI that respects historical accuracy while embracing diversity is fraught with challenges but also offers opportunities for meaningful dialogue and progress. For instance, the discussion around AI and (Dis)Ability: Unlocking Human Potential With Technology shows the positive side of AI's societal impact when developed thoughtfully.

It is crucial for tech companies and users alike to navigate these waters with care, ensuring that AI serves to enhance our understanding of the world and each other, rather than diminishing it. The impact of such developments is also felt in the job market, as AI reshapes various industries, a topic explored in What Every Worker Needs to Answer: What Is Your Non-Machine Premium?.

You can read the full tweet here. What do you think – Is Google's AI overly progressive?

Latest Comments (2)

This "woke" debate around Gemini, it's quite something. I'm curious, does it extend only to ethnicity and gender, or are we seeing similar biases when it comes to regional Indian contexts, for instance? Generating images of diverse professionals is good, but how does it handle, say, a traditional Indian wedding setting? Just wondering if the narrow focus misses other important nuances.

This Gemini brouhaha feels like a symptom of a larger, ongoing debate about representation in AI. A bit perplexing, innit?

Leave a Comment