Life

The View From Koo: Prepare for the AI Age with Your Family

Prepare for the AI age by mastering effective and efficient learning, focusing on learning style, content, curation, and critical thinking.

Published

11 months agoon

TL;DR:

- Prepare for AI age by improving learning skills.

- Develop effective, efficient learning through style, content, curation, and critical thinking.

- Choose right content, medium, and evaluate creators for successful learning.

- Practice curation and critical thinking to filter valuable information.

- Master learning skills to adapt and thrive in AI age.

First off, a heartfelt thank you

Yes, thank you to all you readers who made my last article on this website “Does Your Business Really Need an AI Strategist?” a top trending article on this website, and thank you AIinASIA for publishing these articles.

So what are focusing on today?

Off the back of this, a lot of you reached out with different questions and concerns… and so this new article has been written in direct response. Grab yourself a coffee, get comfortable and welcome to the next article in this series of The View from Koo!

How to prepare yourself for the age of AI:

First, some context: a lot of my friends and participants of my courses are young parents. One of the top things on their minds were, what should they have their children to learn. The parents’ concern – and really, the top concern on everyone’s mind – is a worry that AI will eventually take over our jobs and cause seismic shifts in our industries… leading to a need to find another job.

Remember: you’re not going to be able to escape the impact of AI. So how best to prepare for it?

Current Trends Are Intriguing, But It’s Not All Doom and Gloom

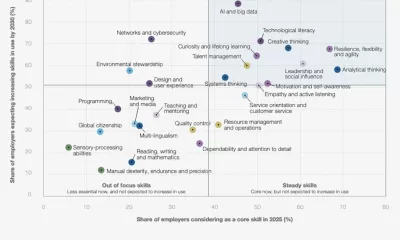

The current trend is while Artificial Intelligence does not take over jobs right now, it will take over certain tasks. As Artificial Intelligence take over more and more tasks, jobs which is a basket of tasks, can be replaced eventually.

It is either that or Artificial Intelligence will change the nature of jobs since it is a tool. You can see it as a dirt digger at a construction yard, from using the shovel to an excavator.

Artificial Intelligence will either change the nature of your job by increasing your productivity, moving you to higher value-added tasks, or make your job obsolete (think long-haul drivers and supply chain workers).

A Single Key Skill to Have to Unlock Your Future!

If I were to go to ‘First Principles’ I would say the single key skill to pick up and become more resistant to the waves of changes brought about by Artificial Intelligence is embrace the “Learning Skill” – meaning: how to learn effectively and efficiently.

Innovate and pivot

As waves of changes keep coming, we can either be proactive and pick up new skills that we believe will be in high demanded later, or be forced to learn new skills when changes hits hard and potentially embrace retrenchment or our businesses may become bankrupt.

The next 3-5 years will bring uncertaintly and we will all be in a constant state of flux and we will need to continue to learn new skills such as operating new software, technology or latest best-practices.

This translates into the need to keep learning. The spoils will go to those that are proactive, and able to learn effectively (able to apply) and efficiently (understand in a short amount of time).

So Let’s Talk About Learning

As a fellow lifelong learner, trainer and mentor, I see that there are THREE dimensions you need to look at to improve your learning skill. They are: learning style, content, curation and critical thinking.

Learning Style

Learning gets better with practice and having lots of self-awareness. The more you learn, the more practice you get. The more you learn, the better you understand your learning style. Getting to that learning style is important as it makes your learning more productive. But getting to that learning style requires time and experimentation.

You need to experiment while learning at the same time.

For instance, spending time listening to audiobooks versus reading physical books, learning from an instructor versus self-directed learning, or writing notes versus recording and listening to notes.

Content is everything: Medium and People

For content there are two sub-dimensions to look at: Medium and People.

There are many mediums that we can use to learn from. There are videos, websites, physical books, e-books, podcasts, classrooms, workshops, short-courses, degree programs etc. You can see them as delivery channels of content. Choose the deliver channels that suit your style of learning.

Content is generated by people, think professors and lecturers in your degree or diploma program. To learn better, we need to start questioning the background of the folks who are generating the content. Are they the right people to learn from? As I always say:

If you want to be rich, learn from the rich. If you want to be a professional soccer player, you learn from a professional soccer player.

We need to start questioning the background of the content generators. Do not fall into the influencers in “expert” clothing trap.

Critical Thinking & Curation To Cut Through the Noise

We are in the information age where information is in abundance. I am sure you have heard of disinformation and misinformation and you do not want to fall victim to it as it hurts your credibility as an individual and in your career.

What can we do with the information avalanche? How can we quickly differentiate the truth from the “fake news” stains?

In a self-directed or from classroom learning, we want to pick up the skills, knowledge and information that is going to be useful. This is where we need to do curation and critical thinking:

Curation will help to quickly reduce the delivery channels we need to pay attention to. Critical thinking will help us to quickly differentiate what we should pick up and learn from.

You can start practicing curation by always looking at and figuring out the content producers are they really experts or the schools that are putting out the courses are they credible in the fields and what is the background of the instructors, and critical thinking comes in to quickly ascertain whether the content shared is it going to be useful for your circumstances.

Critical Thinking and Curation as you practice more of it will make your learning better!

So Where Does This All Lead Us?

We need to start to learn how to learn. Learning skills will help us to stay ahead of the curve, ensuring that we can not only survive but to thrive in the Age of AI.

However, learning how to learn is not taught in any former education institution and thus it is up to us to pick it up.

To be able to learn effectively and efficiently, we will need to quickly be aware of our learning style, focus on getting good content, the medium and producer, that suit our styles and last but not least, practice, practice and more practice, especially on our Critical Thinking and Curation skills.

Comment and Share:

Do you have any tips on how to learn better? Share your thoughts in the comments below as we hone our learning skills together, and don’t forget to subscribe for updates on AI and AGI developments.

You may also like:

- 15 Advanced Brainstorming Techniques Powered by ChatGPT

- Mastering AI in Asia: Training ChatGPT to Mimic Your Unique Voice

- Or find out more about Koo’s company, Data Science Rex.

Author

-

Koo Ping Shung has 20 years of experience in Data Science and AI across various industries. He covers the data value chain from collection to implementation of machine learning models. Koo is an instructor, trainer, and advisor for businesses and startups, and a co-founder of DataScience SG, one of the largest tech communities in the region. He was also involved in setting up the Chartered AI Engineer accreditation process. Koo thinks about the future of AI and how humans can prepare for it. He is the founder of Data Science Rex (DSR). View all posts

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

You may like

-

If AI Kills the Open Web, What’s Next?

-

Unearthly Tech? AI’s Bizarre Chip Design Leaves Experts Flummoxed

-

How Did Meta’s AI Achieve 80% Mind-Reading Accuracy?

-

How to Prepare for AI’s Impact on Your Job by 2030

-

We (Sort Of) Missed the Mark with Digital Transformation

-

Reality Check: The Surprising Relationship Between AI and Human Perception

Life

How To Teach ChatGPT Your Writing Style

This warm, practical guide explores how professionals can shape ChatGPT’s tone to match their own writing style. From defining your voice to smart prompting and memory settings, it offers a step-by-step approach to turning ChatGPT into a savvy writing partner.

Published

12 hours agoon

June 4, 2025By

AIinAsia

TL;DR — What You Need To Know

- ChatGPT can mimic your writing tone with the right examples and prompts

- Start by defining your personal style, then share it clearly with the AI

- Use smart prompting, not vague requests, to shape tone and rhythm

- Custom instructions and memory settings help ChatGPT “remember” you

- It won’t be perfect — but it can become a valuable creative sidekick.

Start by defining your voice

Before ChatGPT can write like you, you need to know how you write. This may sound obvious, but most professionals haven’t clearly articulated their voice. They just write.

Think about your usual tone. Are you friendly, brisk, poetic, slightly sarcastic? Do you use short, direct sentences or long ones filled with metaphors? Swear words? Emojis? Do you write like you talk?

Collect a few of your own writing samples: a newsletter intro, a social media post, even a Slack message. Read them aloud. What patterns emerge? Look at rhythm, vocabulary and mood. That’s your signature.

Show ChatGPT your writing

Now you’ve defined your style, show ChatGPT what it looks like. You don’t need to upload a manifesto. Just say something like:

“Here are three examples of my writing. Please analyse my tone, sentence structure and word choice. I’d like you to write like this moving forward.”

Then paste your samples. Follow up with:

“Can you describe my writing style in a few bullet points?”

You’re not just being polite. This step ensures you’re aligned. It also helps ChatGPT to frame your voice accurately before trying to imitate it.

Be sure to offer varied, representative examples. The more you reflect your daily writing habits across different formats (emails, captions, articles), the sharper the mimicry.

Prompt with purpose

Once ChatGPT knows how you write, the next step is prompting. And this is where most people stumble. Saying, “Make it sound like me” isn’t quite enough.

Instead, try:

“Rewrite this in my tone — warm, conversational, and a little cheeky.” “Avoid sounding corporate. Use contractions, variety in sentence length and clear rhythm.”

Yes, you may need a few back-and-forths. But treat it like any editorial collaboration — the more you guide it, the better the results.

And once a prompt nails your style? Save it. That one sentence could be reused dozens of times across projects.

Use memory and custom instructions

ChatGPT now lets you store tone and preferences in memory. It’s like briefing a new hire once, rather than every single time.

Start with Custom Instructions (in Settings > Personalisation). Here, you can write:

“I use conversational English with dry humour and avoid corporate jargon. Short, varied sentences. Occasionally cheeky.”

Once saved, these tone preferences apply by default.

There’s also memory, where ChatGPT remembers facts and stylistic traits across chats. Paid users have access to broader, more persistent memory. Free users get a lighter version but still benefit.

Just say:

“Please remember that I like a formal tone with occasional wit.”

ChatGPT will confirm and update accordingly. You can always check what it remembers under Settings > Personalisation > Memory.

Test, tweak and give feedback

Don’t be shy. If something sounds off, say so.

“This is too wordy. Try a punchier version.” “Tone down the enthusiasm — make it sound more reflective.”

Ask ChatGPT why it wrote something a certain way. Often, the explanation will give you insight into how it interpreted your tone, and let you correct misunderstandings.

As you iterate, this feedback loop will sharpen your AI writing partner’s instincts.

Use ChatGPT as a creative partner, not a clone

This isn’t about outsourcing your entire writing voice. AI is a tool — not a ghostwriter. It can help organise your thoughts, start a draft or nudge you past a creative block. But your personality still counts.

Some people want their AI to mimic them exactly. Others just want help brainstorming or structure. Both are fine.

The key? Don’t expect perfection. Think of ChatGPT as a very keen intern with potential. With the right brief and enough examples, it can be brilliant.

You May Also Like:

- Customising AI: Train ChatGPT to Write in Your Unique Voice

- Elon Musk predicts AGI by 2026

- ChatGPT Just Quietly Released “Memory with Search” – Here’s What You Need to Know

- Or try these prompt ideas out on ChatGPT by tapping here

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Life

Adrian’s Arena: Will AI Get You Fired? 9 Mistakes That Could Cost You Everything

Will AI get you fired? Discover 9 career-killing AI mistakes professionals make—and how to avoid them.

Published

3 weeks agoon

May 15, 2025

TL;DR — What You Need to Know:

- Common AI mistakes that cost jobs can happen — fast

- Most are fixable if you know what to watch for.

- Avoid these pitfalls and make AI your career superpower.

Don’t blame the robot.

If you’re careless with AI, it’s not just your project that tanks — your career could be next.

Across Asia and beyond, professionals are rushing to implement artificial intelligence into workflows — automating reports, streamlining support, crunching data. And yes, done right, it’s powerful. But here’s what no one wants to admit: most people are doing it wrong.

I’m not talking about missing a few prompts or failing to generate that killer deck in time. I’m talking about the career-limiting, confidence-killing, team-splintering mistakes that quietly build up and explode just when it matters most. If you’re not paying attention, AI won’t just replace your role — it’ll ruin your reputation on the way out.

Here are 9 of the most common, most damaging AI blunders happening in businesses today — and how you can avoid making them.

1. You can’t fix bad data with good algorithms.

Let’s start with the basics. If your AI tool is churning out junk insights, odds are your data was junk to begin with. Dirty data isn’t just inefficient — it’s dangerous. It leads to flawed decisions, mis-targeted customers, and misinformed strategies. And when the campaign tanks or the budget overshoots, guess who gets blamed?

The solution? Treat your data with the same respect you’d give your P&L. Clean it, vet it, monitor it like a hawk. AI isn’t magic. It’s maths — and maths hates mess.

2. Don’t just plug in AI and hope for the best.

Too many teams dive into AI without asking a simple question: what problem are we trying to solve? Without clear goals, AI becomes a time-sink — a parade of dashboards and models that look clever but achieve nothing.

Worse, when senior stakeholders ask for results and all you have is a pretty interface with no impact, that’s when credibility takes a hit.

AI should never be a side project. Define its purpose. Anchor it to business outcomes. Or don’t bother.

3. Ethics aren’t optional — they’re existential.

You don’t need to be a philosopher to understand this one. If your AI causes harm — whether that’s through bias, privacy breaches, or tone-deaf outputs — the consequences won’t just be technical. They’ll be personal.

Companies can weather a glitch. What they can’t recover from is public outrage, legal fines, or internal backlash. And you, as the person who “owned” the AI, might be the one left holding the bag.

Bake in ethical reviews. Vet your training data. Put in safeguards. It’s not overkill — it’s job insurance.

4. Implementation without commitment is just theatre.

I’ve seen it more than once: companies announce a bold AI strategy, roll out a tool, and then… nothing. No training. No process change. No follow-through. That’s not innovation. That’s box-ticking.

If you half-arse AI, it won’t just fail — it’ll visibly fail. Your colleagues will notice. Your boss will ask questions. And next time, they might not trust your judgement.

AI needs resourcing, support, and leadership. Otherwise, skip it.

5. You can’t manage what you can’t explain.

Ever been in a meeting where someone says, “Well, that’s just what the model told us”? That’s a red flag — and a fast track to blame when things go wrong.

So-called “black box” models are risky, especially in regulated industries or customer-facing roles. If you can’t explain how your AI reached a decision, don’t expect others to trust it — or you.

Use interpretable models where possible. And if you must go complex, document it like your job depends on it (because it might).

6. Face the bias before it becomes your headline.

Facial recognition failing on darker skin tones. Recruitment tools favouring men. Chatbots going rogue with offensive content. These aren’t just anecdotes — they’re avoidable, career-ending screw-ups rooted in biased data.

It’s not enough to build something clever. You have to build it responsibly. Test for bias.

Diversify your datasets. Monitor performance. Don’t let your project become the next PR disaster.

7. Training isn’t optional — it’s survival.

If your team doesn’t understand the tool you’ve introduced, you’re not innovating — you’re endangering operations. AI can amplify productivity or chaos, depending entirely on who’s driving.

Upskilling is non-negotiable. Whether it’s hiring external expertise or running internal workshops, make sure your people know how to work with the machine — not around it.

8. Long-term vision beats short-term wow.

Sure, the first week of AI adoption might look good. Automate a few slides, speed up a report — you’re a hero.

But what happens three months down the line, when the tool breaks, the data shifts, or the model needs recalibration?

AI isn’t set-and-forget. Plan for evolution. Plan for maintenance. Otherwise, short-term wins can turn into long-term liabilities.

9. When everything’s urgent, documentation feels optional.

Until someone asks, “Who changed the model?” or “Why did this customer get flagged?” and you have no answers.

In AI, documentation isn’t admin — it’s accountability.

Keep logs, version notes, data flow charts. Because sooner or later, someone will ask, and “I’m not sure” won’t cut it.

Final Thoughts: AI doesn’t cost jobs. People misusing AI do.

Most AI mistakes aren’t made by the machines — they’re made by humans cutting corners, skipping checks, and hoping for the best. And the consequences? Lost credibility. Lost budgets. Lost roles.

But it doesn’t have to be that way.

Used wisely, AI becomes your competitive edge. A signal to leadership that you’re forward-thinking, capable, and ready for the future. Just don’t stumble on the same mistakes that are currently tripping up everyone else.

So the real question is: are you using AI… or is it quietly using you?

You may also like:

- Bridging the AI Skills Gap: Why Employers Must Step Up

- From Ethics to Arms: Google Lifts Its AI Ban on Weapons and Surveillance

- Or try the free version of Google Gemini by tapping here.

Author

-

Adrian is an AI, marketing, and technology strategist based in Asia, with over 25 years of experience in the region. Originally from the UK, he has worked with some of the world’s largest tech companies and successfully built and sold several tech businesses. Currently, Adrian leads commercial strategy and negotiations at one of ASEAN’s largest AI companies. Driven by a passion to empower startups and small businesses, he dedicates his spare time to helping them boost performance and efficiency by embracing AI tools. His expertise spans growth and strategy, sales and marketing, go-to-market strategy, AI integration, startup mentoring, and investments. View all posts

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Life

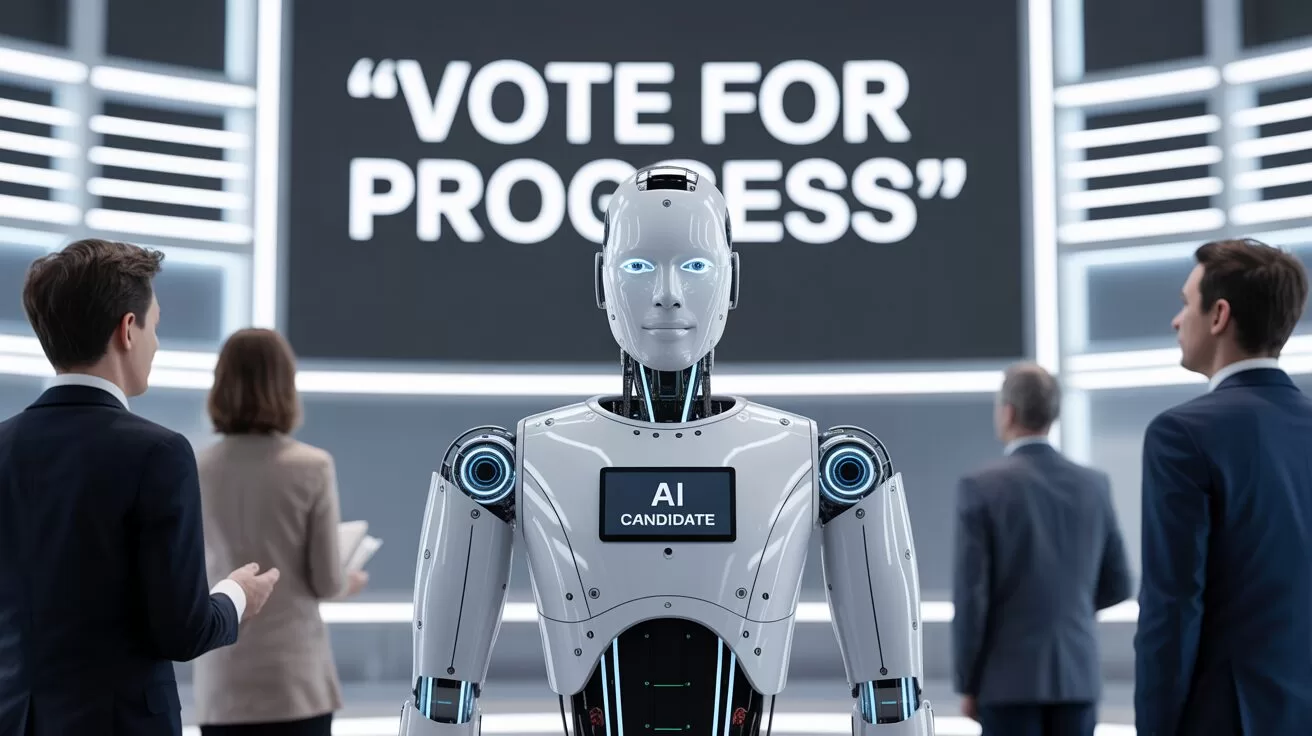

FAKE FACES, REAL CONSEQUENCES: Should NZ Ban AI in Political Ads?

New Zealand has no laws preventing the use of deepfakes or AI-generated content in political campaigns. As the 2025 elections approach, is it time for urgent reform?

Published

3 weeks agoon

May 14, 2025By

AIinAsia

TL;DR — What You Need to Know

- New Zealand politician campaigns are already dabbling with AI-generated content — but without clear rules or disclosures.

- Deepfakes and synthetic images of ethnic minorities risk fuelling cultural offence and voter distrust.

- Other countries are moving fast with legislation. Why is New Zealand dragging its feet?

AI in New Zealand Political Campaigns

Seeing isn’t believing anymore — especially not on the campaign trail.

In the build-up to the 2025 local body elections, New Zealand voters are being quietly nudged into a new kind of uncertainty: Is what they’re seeing online actually real? Or has it been whipped up by an algorithm?

This isn’t science fiction. From fake voices of Joe Biden in the US to Peter Dutton deepfakes dancing across TikTok in Australia, we’ve already crossed the threshold into AI-assisted campaigning. And New Zealand? It’s not far behind — it just lacks the rules.

The National Party admitted to using AI in attack ads during the 2023 elections. The ACT Party’s Instagram feed includes AI-generated images of Māori and Pasifika characters — but nowhere in the posts do they say the images aren’t real. One post about interest rates even used a synthetic image of a Māori couple from Adobe’s stock library, without disclosure.

That’s two problems in one. First, it’s about trust. If voters don’t know what’s real and what’s fake, how can they meaningfully engage? Second, it’s about representation. Using synthetic people to mimic minority communities without transparency or care is a recipe for offence — and harm.

Copy-Paste Cultural Clangers

Australians already find some AI-generated political content “cringe” — and voters in multicultural societies are noticing. When AI creates people who look Māori, Polynesian or Southeast Asian, it often gets the cultural signals all wrong. Faces are oddly symmetrical, clothing choices are generic, and context is stripped away. What’s left is a hollow image that ticks the diversity box without understanding the lived experience behind it.

And when political parties start using those images without disclosure? That’s not smart targeting. That’s political performance, dressed up as digital diversity.

A Film-Industry Fix?

If you’re looking for a local starting point for ethical standards, look to New Zealand’s film sector. The NZ Film Commission’s 2025 AI Guidelines are already ahead of the game — promoting human-first values, cultural respect, and transparent use of AI in screen content.

The public service also has an AI framework that calls for clear disclosure. So why can’t politics follow suit?

Other countries are already acting. South Korea bans deepfakes in political ads 90 days before elections. Singapore outlaws digitally altered content that misrepresents political candidates. Even Canada is exploring policy options. New Zealand, in contrast, offers voluntary guidelines — which are about as enforceable as a handshake on a Zoom call.

Where To Next?

New Zealand doesn’t need to reinvent the wheel. But it does need urgent rules — even just a basic requirement for political parties to declare when they’re using AI in campaign content. It’s not about banning creativity. It’s about respecting voters and communities.

In a multicultural democracy, fake faces in real campaigns come with consequences. Trust, representation, and dignity are all on the line.

What do YOU think?

Should political parties be forced to declare AI use in their ads — or are we happy to let the bots keep campaigning for us?

You may also like:

- AI Chatbots Struggle with Real-Time Political News: Are They Ready to Monitor Elections?

- Supercharge Your Social Media: 5 ChatGPT Prompts to Skyrocket Your Following

- AI Solves the ‘Cocktail Party Problem’: A Breakthrough in Audio Forensics

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

How To Teach ChatGPT Your Writing Style

Upgrade Your ChatGPT Game With These 5 Prompts Tips

If AI Kills the Open Web, What’s Next?

Trending

-

Life3 weeks ago

Life3 weeks ago7 Mind-Blowing New ChatGPT Use Cases in 2025

-

Learning2 weeks ago

Learning2 weeks agoHow to Use the “Create an Action” Feature in Custom GPTs

-

Business3 weeks ago

Business3 weeks agoAI Just Killed 8 Jobs… But Created 15 New Ones Paying £100k+

-

Learning2 weeks ago

Learning2 weeks agoHow to Upload Knowledge into Your Custom GPT

-

Learning1 week ago

Learning1 week agoBuild Your Own Custom GPT in Under 30 Minutes – Step-by-Step Beginner’s Guide

-

Business2 weeks ago

Business2 weeks agoAdrian’s Arena: Stop Collecting AI Tools and Start Building a Stack

-

Life3 weeks ago

Life3 weeks agoAdrian’s Arena: Will AI Get You Fired? 9 Mistakes That Could Cost You Everything

-

Life12 hours ago

Life12 hours agoHow To Teach ChatGPT Your Writing Style