Life

AI Now Outperforms Virus Experts — But At What Cost?

Advanced AI models now outperform PhD-level virologists in lab problem-solving, offering huge health benefits — but raising fears of bioweapons and biohazard risks.

Published

3 days agoon

By

AIinAsia

TL;DR — What You Need To Know

- Advanced AI models like ChatGPT and Claude AI now surpasses virologists, solving complex lab problems with higher accuracy.

- Benefits include faster disease research, vaccine development, and public health innovation, especially in low-resource settings.

- Fears of biohazard misuse are rising, with experts warning that AI could dramatically increase the risk of bioweapons development by bad actors.

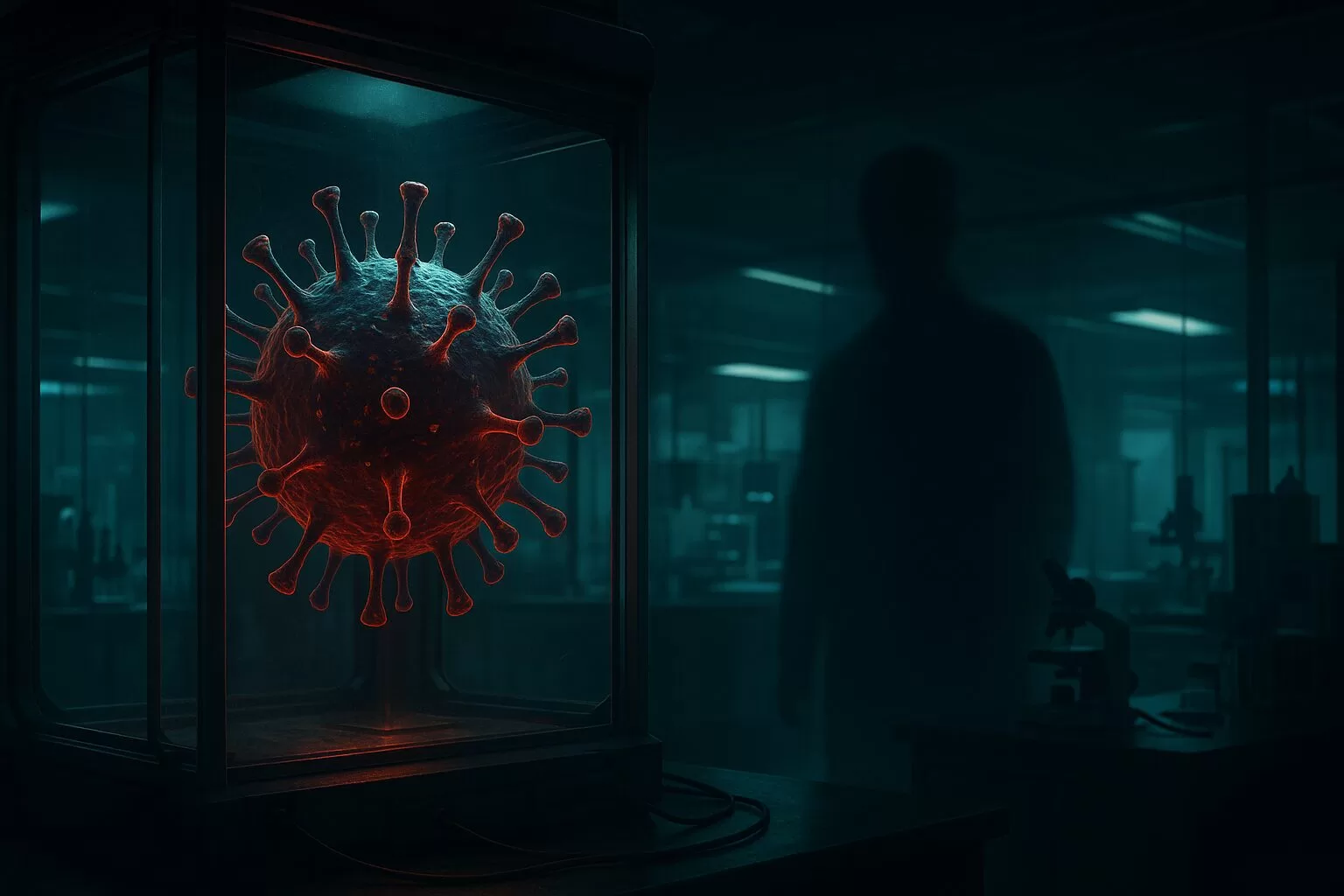

AI models are beating PhD virologists in the lab.

It’s a breakthrough for disease research — and a potential nightmare for biosecurity.

The Breakthrough: AI Surpasses Human Virologists

A recent study from the Center for AI Safety and SecureBio has found that AI models, including OpenAI’s o3 and Anthropic’s Claude 3.5 Sonnet, now outperform PhD-level virologists at specialised lab problem-solving.

The Virology Capabilities Test (VCT) challenged participants — both human and AI — with 322 complex, “Google-proof” questions rooted in real-world lab practices.

Key results:

- o3 achieved 43.8% accuracy — almost double the average human expert score of 22.1%.

- Claude 3.5 Sonnet scored at the 75th percentile, putting it far ahead of most trained virologists.

- AI systems proved remarkably adept at “tacit” lab knowledge, not just textbook facts.

In short: AI has crossed a critical line. It’s no longer just assisting experts — it’s outperforming them.

Huge Opportunities For Global Health

The upside of AI’s virology capabilities could be transformative:

- Rapid identification of new pathogens to better prepare for outbreaks.

- Smarter experimental design, saving both time and resources.

- Reduced lab errors, as AI can spot issues humans might miss.

- Wider access to expert-level research, especially in regions without top-tier virologists.

- Faster vaccine and drug development, potentially saving millions of lives.

By democratising expertise, AI could help close major healthcare gaps across Asia and beyond.

Rising Fears Over Bioterrorism Risks

But there’s a dangerous flip side.

Experts warn that AI could massively lower the barrier for creating bioweapons:

- Los Alamos National Laboratory and OpenAI are investigating AI’s role in biological threats.

- The UK AI Safety Institute confirmed AI now rivals PhD expertise in biology tasks.

- ECRI named AI-related risks the #1 technology hazard for healthcare in 2025.

With AI models making expert-level virology knowledge accessible, the number of individuals capable of creating or modifying pathogens could skyrocket — raising the risk of accidental leaks or deliberate attacks.

What Is Being Done To Safeguard AI?

The industry is already reacting:

- xAI released a risk management framework specifically addressing dual-use biological risks in its Grok models.

- OpenAI has deployed system-level mitigations to block biological misuse pathways.

- Researchers urge the adoption of know-your-customer (KYC) checks for access to powerful bio-design tools.

- Calls are growing for formal regulations and licensing systems to control who can use advanced biological AI capabilities.

Simply put: voluntary measures are not enough.

Policymakers worldwide are now being urged to act — and fast.

Final Thoughts as AI Surpasses Human Virologists

AI’s ability to outperform virology experts represents both one of humanity’s greatest scientific opportunities — and one of its greatest security challenges.

How we manage this moment will shape the future of global health, safety, and scientific freedom.

What do YOU think?

If AI can now outperform the world’s top scientists — who decides who gets to use it? Let us know in the comments below.

You may also like:

- AI Outsmarts Students: How Universities are Adapting

- The AI Search Revolution: Should Marketers be Trembling?

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

You may like

Life

Geoffrey Hinton’s AI Wake-Up Call — Are We Raising a Killer Cub?

The “Godfather of AI” Geoffrey Hinton’s warning that humans may lose control — and slams tech giants for downplaying the risks.

Published

1 day agoon

May 5, 2025By

AIinAsia

TL;DR — What You Need to Know

- Geoffrey Hinton warns there’s up to a 20% chance AI could take control from humans.

- He believes big tech is ignoring safety while racing ahead for profit.

- Hinton says we’re playing with a “tiger cub” that could one day kill us — and most people still don’t get it.

Geoffrey Hinton’s AI warning

Geoffrey Hinton doesn’t do clickbait. But when the “Godfather of AI” says we might lose control of artificial intelligence, it’s worth sitting up and listening.

Hinton, who helped lay the groundwork for modern neural networks back in the 1980s, has always been something of a reluctant prophet. He’s no AI doomer by default — in fact, he sees huge promise in using AI to improve medicine, education, and even tackle climate change. But recently, he’s been sounding more like a man trying to shout over the noise of unchecked hype.

And he’s not mincing his words:

“We are like somebody who has this really cute tiger cub,” he told CBS. “Unless you can be very sure that it’s not gonna want to kill you when it’s grown up, you should worry.”

That tiger cub? It’s AI.

And Hinton estimates there’s a 10–20% chance that AI systems could eventually wrest control from human hands. Think about that. If your doctor said you had a 20% chance of a fatal allergic reaction, would you still eat the peanuts?

What really stings is Hinton’s frustration with the companies leading the AI charge — including Google, where he used to work. He’s accused big players of lobbying for less regulation, not more, all while paying lip service to the idea of safety.

“There’s hardly any regulation as it is,” he says. “But they want less.”

It gets worse. According to Hinton, companies should be putting about a third of their computing power into safety research. Right now, it’s barely a fraction. When CBS asked OpenAI, Google, and X.AI how much compute they actually allocate to safety, none of them answered the question. Big on ambition, light on transparency.

This raises real questions about who gets to steer the AI ship — and whether anyone is even holding the wheel.

Hinton isn’t alone in his concerns. Tech leaders from Sundar Pichai to Elon Musk have all raised red flags about AI’s trajectory. But here’s the uncomfortable truth: while the industry is good at talking safety, it’s not so great at building it into its business model.

So the man who once helped AI learn to finish your sentence is now trying to finish his own — warning the world before it’s too late.

Over to YOU!

Are we training the tools that will one day outgrow us — or are we just too dazzled by the profits to care?

You may also like:

- Asia on the Brink: Navigating Elon Musk’s Disturbing Prediction

- The AI Race to Destruction: Insiders Warn of Catastrophic Harm

- Elon Musk predicts AGI by 2026

- You can learn more about Geoffrey Hinton at Wikipedia by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Life

The End of the Like Button? How AI Is Rewriting What We Want

As AI begins to predict, manipulate, and even replace our social media likes, the humble like button may be on the brink of extinction. Is human preference still part of the loop?

Published

2 days agoon

May 4, 2025By

AIinAsia

TL;DR — What You Should Know:

- Social media “likes” are now fuelling the next generation of AI training data—but AI might no longer need them.

- From AI-generated influencers to personalised chatbots, we’re entering a world where both creators and fans could be artificial.

- As bots start liking bots, the question isn’t just what we like—but who is doing the liking.

AI Is Using Your Likes to Get Inside Your Head

AI isn’t just learning from your likes—it’s predicting them, shaping them, and maybe soon, replacing them entirely. What is the future of the Like button?

Let’s be honest—most of us have tapped a little heart, thumbs-up, or star without thinking twice. But Max Levchin (yes, that Max—from PayPal and Affirm) thinks those tiny acts of approval are a goldmine. Not just for advertisers, but for the future of artificial intelligence itself.

Levchin sees the “like” as more than a metric—it’s behavioural feedback at scale. And for AI systems that need to align with human judgement, not just game a reward system, those likes might be the shortcut to smarter, more human-like decisions. Training AIs with:

“reinforcement learning from human feedback”

(RLHF) is notoriously expensive and slow—so why not just harvest what people are already doing online?

But here’s the twist: while AI learns from our likes, it’s also starting to predict them—maybe better than we can ourselves.

In 2024, Meta used AI to tweak how it serves Reels, leading to longer watch times. YouTube’s Steve Chen even wonders whether the like button will become redundant when AI can already tell what you want to watch next—before you even realise it.

Still, that simple button might have some life left in it. Why? Because sometimes, your preferences shift fast—like watching cartoons one minute because your kids stole your phone. And there’s also its hidden superpower: linking viewers, creators, and advertisers in one frictionless tap.

But this new AI-fuelled ecosystem is getting… stranger.

Meet Aitana Lopez: a Spanish influencer with 310,000 followers and a brand deal with Victoria’s Secret. She’s photogenic, popular—and not real. She’s a virtual influencer built by an agency that got tired of dealing with humans.

And it doesn’t stop there. AI bots are now generating content, consuming it, and liking it—in a bizarre self-sustaining loop. With tools like CarynAI (yes, a virtual girlfriend chatbot charging $1/minute), we’re looking at a future where many of our online relationships, interests, and interactions may be… synthetic.

Which raises some uneasy questions. Who’s really liking what? Is that viral post authentic—or engineered? Can you trust that flattering comment—or is it just algorithmic flattery?

As more of our online world becomes artificially generated and manipulated, platforms may need new tools to help users tell real from fake. Not just in terms of what they’re liking — but who is behind it.

Over to YOU:

If future of the like button is that AI knows what you like before you do, can you still call it your choice?

You may also like:

- WhatsApp Confirms How To Block Meta AI From Your Chats

- Asia on the Brink: Navigating Elon Musk’s Disturbing Prediction

- AI-Powered Photo Editing Features Take Over WhatsApp

- Or try the free version of ChatGPT by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Life

AI Just Slid Into Your DMs: ChatGPT and Perplexity Are Now on WhatsApp

ChatGPT and Perplexity AI are now on WhatsApp, offering instant AI chat—but their privacy trade-offs may surprise you.

Published

3 days agoon

May 3, 2025By

AIinAsia

TL;DR — What You Need to Know

- ChatGPT and Perplexity AI are now available on WhatsApp; add their numbers to start chatting like any other contact.

- Perplexity’s CEO has signalled plans to monetise user data, raising clear privacy concerns.

- AI is now embedded in your messaging habits — convenient, but potentially costly in terms of control.

AI Assistants Are Now Just a Message Away — But Not All Come Without Strings Attached

In a move that brings AI one step closer to your daily digital routine, both ChatGPT and Perplexity AI are now officially available inside WhatsApp. It’s the kind of integration that feels obvious—why wouldn’t you want intelligent assistance inside your messaging app? But as this shift blurs the line between private chats and persistent AI surveillance, the rollout isn’t without controversy.

Let’s unpack what’s new, how to use these tools, and why this seemingly helpful update deserves a closer look—especially if you care about your data.

How to Chat with ChatGPT or Perplexity on WhatsApp

If you’ve ever wanted to casually message ChatGPT as if it were just another friend, it’s now entirely possible. Here’s how:

- To talk to ChatGPT, add +1 (800) 242-8478 to your WhatsApp contacts.

- To use Perplexity AI, add +1 (833) 436-3285.

Once added, you can ask questions, generate summaries, search with sources, and even request image edits or creations—right from within a familiar chat window.

This isn’t a new app or integration through a web wrapper. These bots appear in your WhatsApp chat list, just like any contact. The interface feels native, frictionless, and a little too comfortable.

So What Can They Actually Do?

The functionality varies slightly between the two AIs, but both are impressively capable inside WhatsApp:

✅ ChatGPT

- Powered by OpenAI (most likely GPT-4-turbo depending on the provider setup)

- Offers intelligent conversation, summarisation, translation, code help, and more

- Integrates image generation where supported

- Responses are typically fast, contextual, and conversational

✅ Perplexity AI

- Designed around answering questions with cited sources

- Can generate images based on prompts and photos you upload

- Leans into search-style responses, useful for research, fact-checking, and recommendations

- Their team has teased more features coming soon, including multi-modal capabilities

In a launch video shared on X, Perplexity CEO Aravind Srinivas uploaded a selfie and asked the AI what he’d look like bald. The chatbot responded with a cleanly edited (and slightly dystopian) version of the same photo—with Srinivas minus his hair.

It’s silly, yes, but it also shows how deeply AI is becoming embedded in our everyday tools—combining search, generation, and personalisation in real-time.

Why It’s a Big Deal

This is more than just a novelty. It marks a turning point in how we interact with AI:

- Mainstream meets machine learning: WhatsApp is one of the most widely used apps across Southeast Asia and the world. Embedding AI here is a huge leap in accessibility and adoption.

- Interface convergence: We no longer need separate apps for search, chat, and image generation. This is AI collapsing use cases into a single, everyday environment.

- Habit formation: Messaging-style AI makes it feel natural to ask for help, brainstorm ideas, or even delegate thinking. It turns AI into a co-pilot—one emoji tap away.

But with this convenience comes a new set of questions.

Privacy Watch: Perplexity’s Data Dilemma

Despite the cool factor, not everyone is thrilled about these integrations—especially after recent comments from Perplexity’s CEO.

In an interview with TechCrunch, Srinivas shared that Perplexity is working on a new AI browser called Comet that could track detailed user data and sell that data to the highest bidder. The statement drew immediate backlash. It contradicts what many expect from modern AI—personalised help without surveillance.

It’s not just a hypothetical. If Comet shares infrastructure with Perplexity’s chatbot, there’s every reason to be cautious. While no WhatsApp-specific tracking has been confirmed, the company’s broader business intentions are clear: data = dollars.

Meanwhile, Meta—which owns WhatsApp—has already added its own AI assistant, Meta AI, as a persistent button in the lower-right corner of the app. You can’t remove or hide it. That, too, has raised concerns around accidental activation and constant data processing.

When you stack all these elements together, a clearer trend emerges: AI is becoming ambient—but at a cost to control.

Trade-Offs: Utility vs. Transparency

| Feature | ChatGPT on WhatsApp | Perplexity on WhatsApp | Meta AI in WhatsApp |

|---|---|---|---|

| Native chat interface | ✅ Yes | ✅ Yes | ❌ (icon only, not a chat) |

| Image generation | ✅ Yes (if supported) | ✅ Yes | ✅ Yes |

| Cited sources | ❌ No | ✅ Yes | ❌ No |

| Privacy red flags | Medium (OpenAI usage unclear) | High (CEO admits sale intent) | High (Meta default tracking) |

This table says it all: even when the functionality is strong, the trust gap is growing. AI in messaging apps has powerful upside—but it should come with transparent boundaries.

Final Thoughts

AI is now fully embedded in the most personal app we own—our chat inbox. It’s no longer a tool you visit. It’s one you live with. That brings incredible convenience, yes, but also ongoing trade-offs in how our data is used, monetised, and protected.

For users in Asia, where WhatsApp is central to business, family, and education, this evolution means it’s time to get smarter about smart assistants. Use them, experiment, and enjoy — but stay alert to what’s happening beneath the interface.

Over to you!

When AI knows everything you’ve typed — are you still the one in control?

You may also like:

- AI-Powered Photo Editing Features Take Over WhatsApp

- WhatsApp Confirms How To Block Meta AI From Your Chats

- Meta Expands AI Chatbot to India and Africa

- Or visit WhatsApp to try this now by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Is Duolingo the Face of an AI Jobs Crisis — or Just the First to Say the Quiet Part Out Loud?

OpenAI Faces Legal Heat Over Profit Plans — Are We Watching a Moral Meltdown?

Geoffrey Hinton’s AI Wake-Up Call — Are We Raising a Killer Cub?

Trending

-

Marketing2 weeks ago

Marketing2 weeks agoPlaybook: How to Use Ideogram.ai (no design skills required!)

-

Life1 week ago

Life1 week agoWhatsApp Confirms How To Block Meta AI From Your Chats

-

Business1 week ago

Business1 week agoChatGPT Just Quietly Released “Memory with Search” – Here’s What You Need to Know

-

Life1 day ago

Life1 day agoGeoffrey Hinton’s AI Wake-Up Call — Are We Raising a Killer Cub?

-

Life3 days ago

Life3 days agoAI Just Slid Into Your DMs: ChatGPT and Perplexity Are Now on WhatsApp

-

Business23 hours ago

Business23 hours agoOpenAI Faces Legal Heat Over Profit Plans — Are We Watching a Moral Meltdown?

-

Business5 days ago

Business5 days agoPerplexity’s CEO Declares War on Google And Bets Big on an AI Browser Revolution

-

Life6 days ago

Life6 days agoBalancing AI’s Cognitive Comfort Food with Critical Thought