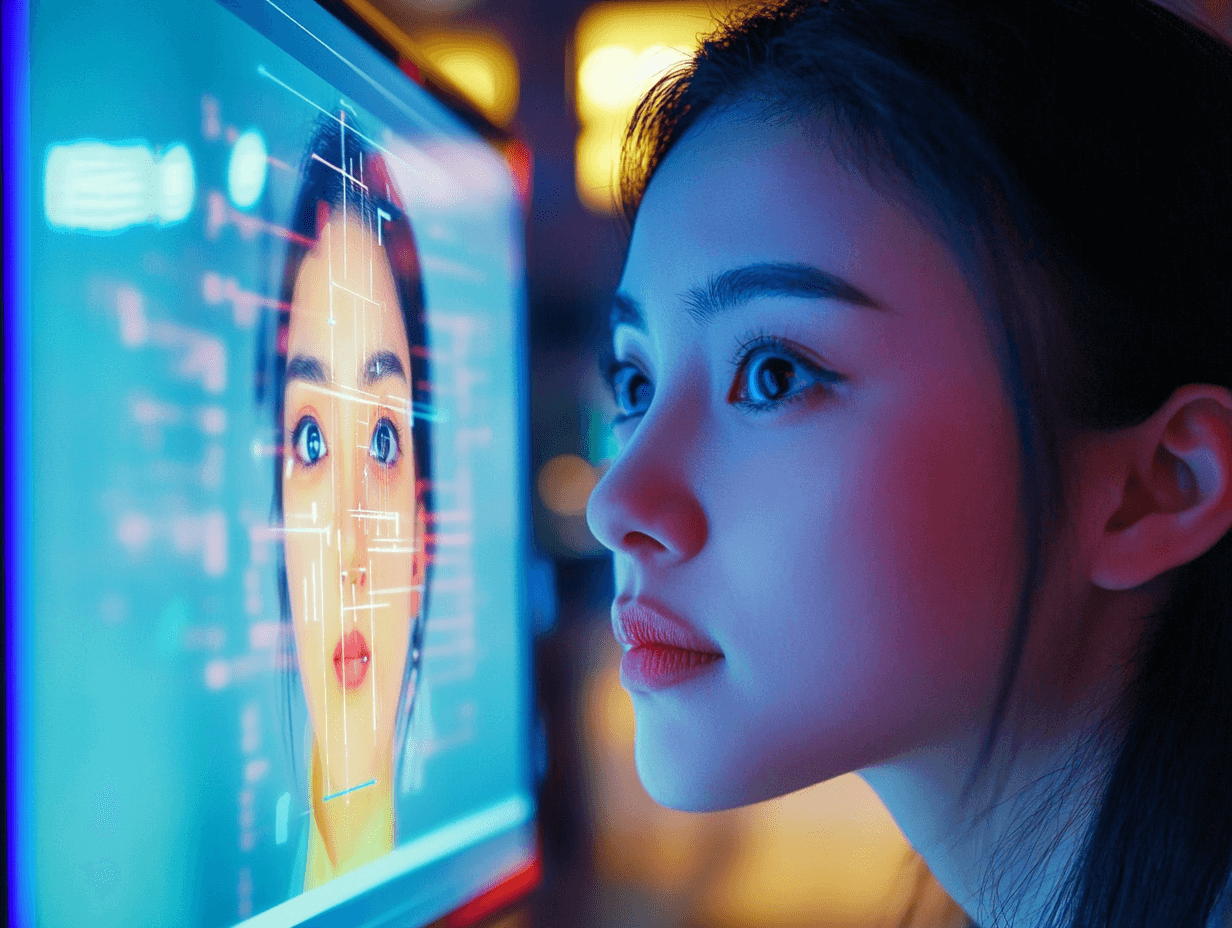

Stanford psychologist Michal Kosinski claims his AI can predict intelligence, sexual preferences, and political leanings from facial scans.,His research raises ethical concerns and has implications for privacy and civil liberties.,The accuracy of these models is not 100%, leading to potential misuse and discrimination.

Imagine a world where artificial intelligence (AI) can tell your intelligence, sexual preferences, and political leanings just by looking at your face. This isn't a scene from a dystopian sci-fi movie; it's the reality we're facing today. Michal Kosinski, a psychologist from Stanford University, claims his AI can do just that. His findings, published in various studies, have sparked a heated debate about the ethical implications of such technology.

The Science Behind the Claims

Kosinski's research focuses on using facial recognition to predict personal traits. In one study, he devised a model that could accurately predict a person's political beliefs with 72% accuracy, compared to 55% by humans. This model analyses facial features to make these predictions.

"Given the widespread use of facial recognition, our findings have critical implications for the protection of privacy and civil liberties." - Michal Kosinski

"Given the widespread use of facial recognition, our findings have critical implications for the protection of privacy and civil liberties." - Michal Kosinski

Ethical Concerns and Implications

While Kosinski argues that his research is a warning to policymakers about the potential dangers of facial recognition, many see it as a Pandora's box. The use cases for this technology are alarming. For instance, if AI can predict sexual orientation with high accuracy, it could be used to discriminate against queer people.

In 2017, Kosinski co-published a paper about a facial recognition model that could predict sexual orientation with 91% accuracy. This research was criticised by the Human Rights Campaign and GLAAD as "dangerous and flawed."

Real-World Examples of Misuse

We already have real-world examples of facial recognition gone wrong. Rite Aid was accused of unfairly targeting minorities as shoplifters using facial recognition technology. Similarly, Macy's incorrectly blamed a man for a violent robbery he didn't commit.

Enjoying this? Get more in your inbox.

Weekly AI news & insights from Asia.

These incidents highlight the potential for misuse and the need for strict regulations. The accuracy of these models is not 100%, which means people can be wrongly targeted. There are growing concerns about AI Browsers Under Threat as Researchers Expose Deep Flaws.

The Dangers of Publishing Such Research

While Kosinski's intention may be to warn about the dangers of facial recognition, publishing such research can also provide detailed instructions for misuse. It's like giving burglars a blueprint to rob your house.

"Publishing such research can also provide detailed instructions for misuse. It's like giving burglars a blueprint to rob your house."

"Publishing such research can also provide detailed instructions for misuse. It's like giving burglars a blueprint to rob your house."

The Future of Facial Recognition

The future of facial recognition is uncertain. On one hand, it has the potential to revolutionise various industries. On the other hand, it poses significant threats to privacy and civil liberties. The discussion around AI and (Dis)Ability: Unlocking Human Potential With Technology shows the complex balance.

Potential Benefits

Security: Facial recognition can enhance security measures in public places.,Efficiency: It can streamline processes like border control and airport security.

Potential Risks

Discrimination: The technology can be used to target and discriminate against certain groups.,Privacy Invasion: It can invade personal privacy by revealing sensitive information.

A Call for Regulation

The ethical implications of facial recognition technology are too significant to ignore. While the potential benefits are enticing, the risks are too high. There is an urgent need for regulation to ensure that this technology is used responsibly. For instance, Taiwan’s AI Law Is Quietly Redefining What “Responsible Innovation” Means. The European Union has also been working on comprehensive AI regulations, as detailed in reports from the European Parliament.

Comment and Share

What are your thoughts on the ethical implications of facial recognition technology? Do you think it should be regulated more strictly? Share your opinions in the comments below and Subscribe to our newsletter for updates on AI and AGI developments.

Latest Comments (3)

This is a right good piece, thank you for sharing it. It makes one wonder, if AI can truly infer our "deepest secrets" from a mere snapshot, what does that mean for our everyday privacy, especially with the sheer number of surveillance cameras sprouting up everywhere these days? The implications are quite unnerving, truth be told.

While Kosinski's work is certainly thought provoking, I reckon we're jumping the gun a bit. Surely, our 'deepest secrets' are still safe from a mere algorithm. It's more about pattern recognition, innit? Perhaps we overstate AI's mystical abilities and understate human complexity. Just my two cents.

Coming back to this, it’s not just about predicting deep secrets is it? The real worry, for me lah, is how these systems could *create* new biases. Like if the algorithm is trained on skewed data, it might start flagging innocent folks as suspicious. That’s a whole different kettle of fish than just 'revealing' something.

Leave a Comment