News

Voice From the Grave: Netflix’s AI Clone of Murdered Influencer Sparks Outrage

Netflix’s use of AI to recreate murder victim Gabby Petito’s voice in American Murder: Gabby Petito has ignited a firestorm of controversy.

Published

3 months agoon

By

AIinAsia

TL;DR – What You Need to Know in 30 Seconds

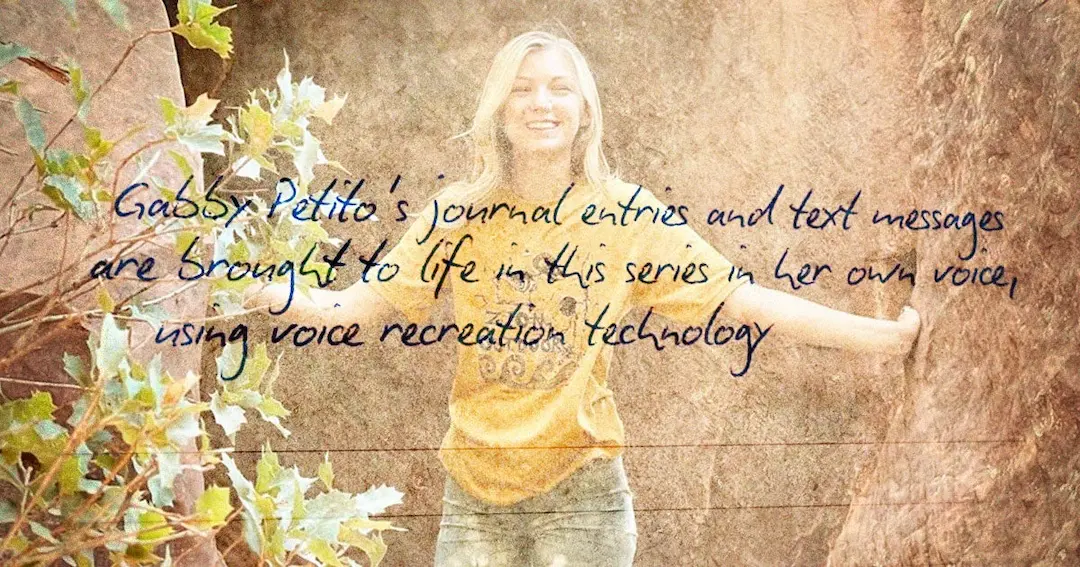

- Gabby Petito’s Voice Cloned by AI: Netflix used generative AI to replicate the murdered influencer’s voice, narrating her own texts and journals in American Murder: Gabby Petito.

- Massive Public Outcry: Viewers say it’s “deeply unsettling,” violating a murder victim’s memory without her explicit consent.

- Family Approved – But Is That Enough? Gabby’s parents supposedly supported the decision, but critics argue that the emotional authenticity just isn’t there and it sets an alarming precedent.

- Growing Trend in AI ‘Docu-Fiction’: Netflix has toyed with AI imagery before. With the rising popularity of AI tools, this might just be the beginning of posthumous voice cloning in media.

Netflix’s AI Voice Recreation of Gabby Petito Sparks Outrage: The True Crime Documentary Stirs Ethical Concerns

In a world where true crime has become almost as popular as cat videos, Netflix’s latest release, American Murder: Gabby Petito, has turned heads in a way nobody quite expected. The streaming giant, never one to shy away from sensational storytelling, has gone high-tech – or, depending on your perspective, crossed a line – by using generative AI to recreate the voice of Gabby Petito, a 22-year-old social media influencer tragically murdered in August 2021.

But has this avant-garde approach led to a meaningful tribute, or merely morphed a devastating real-life tragedy into a tech sideshow? Let’s delve into the unfolding drama, hear what critics are saying, and weigh up whether Netflix has jumped the shark with its AI voice cloning.

The Case That Shocked Social Media

Gabby Petito’s story captured headlines in 2021. According to the FBI, the young influencer was murdered by her fiancé, Brian Laundrie, during a cross-country road trip. Their relationship, peppered across social media, gave the world a stark and heart-wrenching view of what was happening behind the scenes. Gabby’s disappearance, followed by the discovery of her body, ignited massive public scrutiny and online sleuthing.

So, when Netflix announced it was developing a true crime documentary about Gabby’s case, titled American Murder: Gabby Petito, the internet was all ears. True crime fans tuned in for the premiere on Monday, only to find a surprising disclosure in the opening credits: Petito’s text messages and journal entries would be “brought to life” in what Netflix claims is “her own voice, using voice recreation technology” (Netflix, 2023).

A Techy Twist… or Twisted Tech?

Let’s talk about that twist. Viewers soon discovered that the series literally put Gabby’s words in Gabby’s mouth, using AI-generated audio. But is this a touching homage, or a gruesome gimmick?

- One viewer on X (formerly Twitter) called the move a “deeply unsettling use of AI.” (X user, 2023)

- Another posted: “That is absolutely NOT okay. She’s a murder victim. You are violating her again.” (X user, 2023)

These remarks sum up the general outcry across social platforms. The main concern? That Gabby’s voice was effectively hijacked and resurrected without her explicit consent. Her parents, however, appear to have greenlit the decision, offering Gabby’s journals and personal writings to help shape the script.

“We had so much material from her parents that we were able to get. At the end of the day, we wanted to tell the story as much through Gabby as possible. It’s her story.”

Their stance is clear: the production team sees voice recreation as a means to bring Gabby’s point of view into sharper focus. But a lot of viewers remain uneasy, saying they’d prefer archived recordings if authenticity was the goal.

A Tradition of Controversy

Netflix is no stranger to boundary-pushing in its true crime productions. Last year, eagle-eyed viewers noticed American Manhunt: The Jennifer Pan Story featured images that appeared generative or manipulated by AI. In many respects, this is just another addition to a growing trend of digital trickery in modern storytelling.

Outlets like 404 Media have further reported a surge in YouTube channels pumping out “true crime AI slop” – random, possibly fabricated stories produced by generative AI and voice cloning. As these tools become more widespread, it’s increasingly hard to tell fact from fiction, let alone evaluate ethical ramifications.

“It can’t predict what her intonation would have been, and it’s just gross to use it,” one Reddit user wrote in response to the Gabby Petito doc. “At the very least I hope they got consent from her family… I just don’t like the precedent it sets for future documentaries either.” (Redditor, 2023)

This points to a key dilemma: how do we prevent well-meaning storytellers from overstepping moral boundaries with technology designed to replicate or reconstruct the dead? Are we treading on sensitive territory, or is this all simply the new face of docu-drama?

Where Do We Draw the Line After the Netflix AI Voice Controversy

For defenders of American Murder: Gabby Petito, it boils down to parental endorsement. If Gabby’s parents gave the green light, then presumably they believed this approach would honour their daughter’s memory. Some argue that hearing Gabby’s voice – albeit synthesised – might create a deeper emotional connection for viewers and reinforce that she was more than just a headline.

“In all of our docs, we try to go for the source and the people closest to either the victims who are not alive or the people themselves who have experienced this,” explains producer Julia Willoughby Nason. “That’s really where we start in terms of sifting through all the data and information that comes with these huge stories.” (Us Weekly, 2023)

On the other hand, there’s a fair amount of hand-wringing, and for good reason. The true crime genre is already fraught with ethical pitfalls, often seen as commodifying tragic incidents for mainstream entertainment. Voice cloning a victim’s words can come off as invasive, especially for a crime so recent and so visible on social media.

One user captured the discomfort perfectly:

“I understand they had permission from the parents, but that doesn’t make it feel any better,” adding that the AI model sounded, “monotone, lacking in emotion… an insult to her.” (X user, 2023)

And that’s the heart of it. Even with family approval, it’s a sensitive business reanimating someone’s voice once they can no longer speak for themselves. For many, the question becomes: is it a truly fitting tribute, or simply a sensational feature?

Ethics or Entertainment?

Regardless of where you land on the debate, it’s clear that Netflix’s choice marks a new chapter in how technology can (and likely will) be used to re-create missing or murdered people in media. As AI becomes more powerful and more prevalent, it’s not much of a stretch to imagine docu-series about other public figures employing the same approach.

“This world hates women so much,”** another user tweeted,** expressing the view that once again, a woman’s agency in her own narrative has been undermined. (X user, 2023)

Is that a fair assessment, or simply an overstatement borne of the outrage cycle? If Gabby’s story can be told in her own words, with parental involvement, should we not appreciate the effort? Or is the line drawn at artificially resurrecting her voice – a voice that was forcibly taken from her?

So, we’re left to debate a tech-fuelled question: Is it beautiful homage, or a blatant overreach? Given that we’re fast marching into an era where AI blurs the lines of reality, the Netflix approach is just one sign of things to come.

With generative AI firmly planting its flag in creative storytelling, where do you think the moral boundary lies?

You may also like:

- A Chatbot with a Fear of Death?

- Revolutionising Crime-Solving: AI Detectives on the Beat

- Watch the controversial Netflix show by tapping here

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

You may like

-

The Three AI Markets Shaping Asia’s Future

-

Adobe Jumps into AI Video: Exploring Firefly’s New Video Generator

-

How Singtel Used AI to Bring Generations Together for Singapore’s SG60

-

New York Times Encourages Staff to Use AI for Headlines and Summaries

-

How Will AI Skills Impact Your Career and Salary in 2025?

-

Beginner’s Guide to Using Sora AI Video

News

If AI Kills the Open Web, What’s Next?

Exploring how AI is transforming the open web, the rise of agentic AI, and emerging monetisation models like microtransactions and stablecoins.

Published

4 days agoon

May 28, 2025By

AIinAsia

The web is shifting from human-readable pages to machine-mediated experiences with AI impacting the future of the open web. What comes next may be less open—but potentially more useful.

TL;DR — What You Need To Know

- AI is reshaping web navigation: Google’s AI Overviews and similar tools provide direct answers, reducing the need to visit individual websites.

- Agentic AI is on the rise: Autonomous AI agents are beginning to perform tasks like browsing, shopping, and content creation on behalf of users.

- Monetisation models are evolving: Traditional ad-based revenue is declining, with microtransactions and stablecoins emerging as alternative monetisation methods.

- The open web faces challenges: The shift towards AI-driven interactions threatens the traditional open web model, raising concerns about content diversity and accessibility.

The Rise of Agentic AI

The traditional web, characterised by human users navigating through hyperlinks and search results, is undergoing a transformation. AI-driven tools like Google’s AI Overviews now provide synthesised answers directly on the search page, reducing the need for users to click through to individual websites.

This shift is further amplified by the emergence of agentic AI—autonomous agents capable of performing tasks such as browsing, shopping, and content creation without direct human intervention. For instance, Opera’s new AI browser, Opera Neon, can automate internet tasks using contextual awareness and AI agents.

These developments suggest a future where AI agents act as intermediaries between users and the web, fundamentally altering how information is accessed and consumed.

Monetisation in the AI Era

The traditional ad-based revenue model that supported much of the open web is under threat. As AI tools provide direct answers, traffic to individual websites declines, impacting advertising revenues.

In response, new monetisation strategies are emerging. Microtransactions facilitated by stablecoins offer a way for users to pay small amounts for content or services, enabling creators to earn revenue directly from consumers. Platforms like AiTube are integrating blockchain-based payments, allowing creators to receive earnings through stablecoins across multiple protocols.

This model not only provides a potential revenue stream for content creators but also aligns with the agentic web’s emphasis on seamless, automated interactions.

The Future of the Open Web

The open web, once a bastion of free and diverse information, is facing significant challenges. The rise of AI-driven tools and platforms threatens to centralise information access, potentially reducing the diversity of content and perspectives available to users.

However, efforts are underway to preserve the open web’s principles. Initiatives like Microsoft’s NLWeb aim to create open standards that allow AI agents to access and interact with web content in a way that maintains openness and interoperability.

The future of the web may depend on balancing the efficiency and convenience of AI-driven tools with the need to maintain a diverse and accessible information ecosystem.

What Do YOU Think?

As AI impacts the future of the open web, we must consider how to preserve the values of openness, diversity, and accessibility. How can we ensure that the web remains a space for all voices, even as AI agents become the primary means of navigation and interaction?

You may also like:

- Top 10 AI Trends Transforming Asia by 2025

- Build Your Own Agentic AI — No Coding Required

- Is AI Really Paying Off? CFOs Say ‘Not Yet’

- Or tap here to explore the free version of Claude AI.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

News

GPT-5 Is Less About Revolution, More About Refinement

This article explores OpenAI’s development of GPT-5, focusing on improving user experience by unifying AI tools and reducing the need for manual model switching. It includes insights from VP of Research Jerry Tworek on token growth, benchmarks, and the evolving role of humans in the AI era.

Published

1 week agoon

May 22, 2025By

AIinAsia

OpenAI’s next model isn’t chasing headlines—it’s building a smoother, smarter user experience with fewer interruptions the launch of GPT-5 unified tools.

TL;DR — What You Need To Know

- GPT-5 aims to unify OpenAI’s tools, reducing the need for switching between models

- The Operator screen agent is due for an upgrade, with a push towards becoming a desktop-level assistant

- Token usage continues to rise, suggesting growing AI utility and infrastructure demand

- Benchmarks are losing their relevance, with real-world use cases taking centre stage

- OpenAI believes AI won’t replace humans but may reshape human labour roles

A more cohesive AI experience, not a leap forward

While GPT-4 dazzled with its capabilities, GPT-5 appears to be a quieter force, according to OpenAI’s VP of Research, Jerry Tworek. Speaking during a recent Reddit Q&A with the Codex team, Tworek described the new model as a unifier—not a disruptor.

“We just want to make everything our models can currently do better and with less model switching,” Tworek said. That means streamlining the experience so users aren’t constantly toggling between tools like Codex, Operator, Deep Research and memory functions.

For OpenAI, the future lies in integration over invention. Instead of introducing radically new features, GPT-5 focuses on making the existing stack work together more fluidly. This approach marks a clear departure from the hype-heavy rollouts often associated with new model versions.

Operator: from browser control to desktop companion

One of the most interesting pieces in this puzzle is Operator, OpenAI’s still-experimental screen agent. Currently capable of basic browser navigation, it’s more novelty than necessity. But that may soon change.

An update to Operator is expected “soon,” with Tworek hinting it could evolve into a “very useful tool.” The goal? A kind of AI assistant that handles your screen like a power user, automating online tasks without constantly needing user prompts.

The update is part of a broader push to make AI tools feel like one system, rather than a toolkit you have to learn to assemble. That shift could make screen agents like Operator truly indispensable—especially in Asia, where mobile-first behaviour and app fragmentation often define the user journey.

Integration efforts hit reality checks

Originally, OpenAI promised that GPT-5 would merge the GPT and “o” model series into a single omnipotent system. But as with many grand plans in AI, the reality was less elegant.

In April, CEO Sam Altman admitted the challenge: full integration proved more complex than expected. Instead, the company released o3 and o4-mini as standalone models, tailored for reasoning.

Tworek confirmed that the vision of reduced model switching is still alive—but not at the cost of model performance. Users will still see multiple models under the hood; they just might not have to choose between them manually.

Tokens and the long road ahead

If you think the token boom is a temporary blip, think again. Tworek addressed a user scenario where AI assistants might one day process 100 tokens per second continuously, reading sensors, analysing messages, and more.

That, he says, is entirely plausible. “Even if models stopped improving,” Tworek noted, “they could still deliver a lot of value just by scaling up.”

This perspective reflects a strategic bet on infrastructure. OpenAI isn’t just building smarter models; it’s betting on broader usage. Token usage becomes a proxy for economic value—and infrastructure expansion the necessary backbone.

Goodbye benchmarks, hello real work

When asked to compare GPT with rivals like Claude or Gemini, Tworek took a deliberately contrarian stance. Benchmarks, he suggested, are increasingly irrelevant.

“They don’t reflect how people actually use these systems,” he explained, noting that many scores are skewed by targeted fine-tuning.

Instead, OpenAI is doubling down on real-world tasks as the truest test of model performance. The company’s ambition? To eliminate model choice altogether. “Our goal is to resolve this decision paralysis by making the best one.”

The human at the helm

Despite AI’s growing power, Tworek offered a thoughtful reminder: some jobs will always need humans. While roles will evolve, the need for oversight won’t go away.

“In my view, there will always be work only for humans to do,” he said. The “last job,” he suggested, might be supervising the machines themselves—a vision less dystopian, more quietly optimistic.

For Asia’s fast-modernising economies, that might be a signal to double down on education, critical thinking, and human-centred design. The jobs of tomorrow may be less about doing, and more about directing.

You May Also Like:

- ChatGPT-5 Is Coming in 2024: Sam Altman

- Revolutionise Your Designs with Canva’s AI-Powered Magic Tools

- Revolutionising Critical Infrastructure: How AI is Becoming More Reliable and Transparent

- Or tap here to try the free version of ChatGPT.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Business

Apple’s China AI pivot puts Washington on edge

Apple’s partnership with Alibaba to deliver AI services in China has sparked concern among U.S. lawmakers and security experts, highlighting growing tensions in global technology markets.

Published

2 weeks agoon

May 21, 2025By

AIinAsia

As Apple courts Alibaba for its iPhone AI partnership in China, U.S. lawmakers see more than just a tech deal taking shape.

TL;DR — What You Need To Know

- Apple has reportedly selected Alibaba’s Qwen AI model to power its iPhone features in China

- U.S. lawmakers and security officials are alarmed over data access and strategic implications

- The deal has not been officially confirmed by Apple, but Alibaba’s chairman has acknowledged it

- China remains a critical market for Apple amid declining iPhone sales

- The partnership highlights the growing difficulty of operating across rival tech spheres

Apple Intelligence meets the Great Firewall

Apple’s strategic pivot to partner with Chinese tech giant Alibaba for delivering AI services in China has triggered intense scrutiny in Washington. The collaboration, necessitated by China’s blocking of OpenAI services, raises profound questions about data security, technological sovereignty, and the intensifying tech rivalry between the United States and China. As Apple navigates declining iPhone sales in the crucial Chinese market, this partnership underscores the increasing difficulty for multinational tech companies to operate seamlessly across divergent technological and regulatory environments.

Apple Intelligence Meets Chinese Regulations

When Apple unveiled its ambitious “Apple Intelligence” system in June, it marked the company’s most significant push into AI-enhanced services. For Western markets, Apple seamlessly integrated OpenAI’s ChatGPT as a cornerstone partner for English-language capabilities. However, this implementation strategy hit an immediate roadblock in China, where OpenAI’s services remain effectively banned under the country’s stringent digital regulations.

Faced with this market-specific challenge, Apple initiated discussions with several Chinese AI leaders to identify a compliant local partner capable of delivering comparable functionality to Chinese consumers. The shortlist reportedly included major players in China’s burgeoning AI sector:

- Baidu, known for its Ernie Bot AI system

- DeepSeek, an emerging player in foundation models

- Tencent, the social media and gaming powerhouse

- Alibaba, whose open-source Qwen model has gained significant attention

While Apple has maintained its characteristic silence regarding partnership details, recent developments strongly suggest that Alibaba’s Qwen model has emerged as the chosen solution. The arrangement was seemingly confirmed when Alibaba’s chairman made an unplanned reference to the collaboration during a public appearance.

“Apple’s decision to implement a separate AI system for the Chinese market reflects the growing reality of technological bifurcation between East and West. What we’re witnessing is the practical manifestation of competing digital sovereignty models.”

Washington’s Mounting Concerns

The revelation of Apple’s China-specific AI strategy has elicited swift and pronounced reactions from U.S. policymakers. Members of the House Select Committee on China have raised alarms about the potential implications, with some reports indicating that White House officials have directly engaged with Apple executives on the matter.

Representative Raja Krishnamoorthi of the House Intelligence Committee didn’t mince words, describing the development as “extremely disturbing.” His reaction encapsulates broader concerns about American technological advantages potentially benefiting Chinese competitors through such partnerships.

Greg Allen, Director of the Wadhwani A.I. Centre at CSIS, framed the situation in competitive terms:

“The United States is in an AI race with China, and we just don’t want American companies helping Chinese companies run faster.”

The concerns expressed by Washington officials and security experts include:

- Data Sovereignty Issues: Questions about where and how user data from AI interactions would be stored, processed, and potentially accessed

- Model Training Advantages: Concerns that the vast user interactions from Apple devices could help improve Alibaba’s foundational AI models

- National Security Implications: Worries about whether sensitive information could inadvertently flow through Chinese servers

- Regulatory Compliance: Questions about how Apple will navigate China’s content restrictions and censorship requirements

In response to these growing concerns, U.S. agencies are reportedly discussing whether to place Alibaba and other Chinese AI companies on a restricted entity list. Such a designation would formally limit collaboration between American and Chinese AI firms, potentially derailing arrangements like Apple’s reported partnership.

Commercial Necessities vs. Strategic Considerations

Apple’s motivation for pursuing a China-specific AI solution is straightforward from a business perspective. China remains one of the company’s largest and most important markets, despite recent challenges. Earlier this spring, iPhone sales in China declined by 24% year over year, highlighting the company’s vulnerability in this critical market.

Without a viable AI strategy for Chinese users, Apple risks further erosion of its market position at precisely the moment when AI features are becoming central to consumer technology choices. Chinese competitors like Huawei have already launched their own AI-enhanced smartphones, increasing pressure on Apple to respond.

“Apple faces an almost impossible balancing act. They can’t afford to offer Chinese consumers a second-class experience by omitting AI features, but implementing them through a Chinese partner creates significant political exposure in the U.S.

The situation is further complicated by China’s own regulatory environment, which requires foreign technology companies to comply with data localisation rules and content restrictions. These requirements effectively necessitate some form of local partnership for AI services.

A Blueprint for the Decoupled Future?

Whether Apple’s partnership with Alibaba proceeds as reported or undergoes modifications in response to political pressure, the episode provides a revealing glimpse into the fragmenting global technology landscape.

As digital ecosystems increasingly align with geopolitical boundaries, multinational technology firms face increasingly complex strategic decisions:

- Regionalised Technology Stacks: Companies may need to develop and maintain separate technological implementations for different markets

- Partnership Dilemmas: Collaborations beneficial in one market may create political liabilities in others

- Regulatory Navigation: Operating across divergent regulatory environments requires sophisticated compliance strategies

- Resource Allocation: Developing market-specific solutions increases costs and complexity

What we’re seeing with Apple and Alibaba may become the norm rather than the exception. The era of frictionless global technology markets is giving way to one where regional boundaries increasingly define technological ecosystems.

Looking Forward

For now, Apple Intelligence has no confirmed launch date for the Chinese market. However, with new iPhone models traditionally released in autumn, Apple faces mounting time pressure to finalise its AI strategy.

The company’s eventual approach could signal broader trends in how global technology firms navigate an increasingly bifurcated digital landscape. Will companies maintain unified global platforms with minimal adaptations, or will we see the emergence of fundamentally different technological experiences across major markets?

As this situation evolves, it highlights a critical reality for the technology sector: in an era of intensifying great power competition, even seemingly routine business decisions can quickly acquire strategic significance.

You May Also Like:

- Alibaba’s AI Ambitions: Fueling Cloud Growth and Expanding in Asia

- Apple Unleashes AI Revolution with Apple Intelligence: A Game Changer in Asia’s Tech Landscape

- Apple and Meta Explore AI Partnership

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Upgrade Your ChatGPT Game With These 5 Prompts Tips

If AI Kills the Open Web, What’s Next?

Build Your Own Custom GPT in Under 30 Minutes – Step-by-Step Beginner’s Guide

Trending

-

Life3 weeks ago

Life3 weeks ago7 Mind-Blowing New ChatGPT Use Cases in 2025

-

Learning2 weeks ago

Learning2 weeks agoHow to Use the “Create an Action” Feature in Custom GPTs

-

Business3 weeks ago

Business3 weeks agoAI Just Killed 8 Jobs… But Created 15 New Ones Paying £100k+

-

Tools3 weeks ago

Tools3 weeks agoEdit AI Images on the Go with Gemini’s New Update

-

Learning6 days ago

Learning6 days agoBuild Your Own Custom GPT in Under 30 Minutes – Step-by-Step Beginner’s Guide

-

Learning2 weeks ago

Learning2 weeks agoHow to Upload Knowledge into Your Custom GPT

-

Business1 week ago

Business1 week agoAdrian’s Arena: Stop Collecting AI Tools and Start Building a Stack

-

Life2 weeks ago

Life2 weeks agoAdrian’s Arena: Will AI Get You Fired? 9 Mistakes That Could Cost You Everything