News

DeepSeek Dilemma: AI Ambitions Collide with South Korean Privacy Safeguards

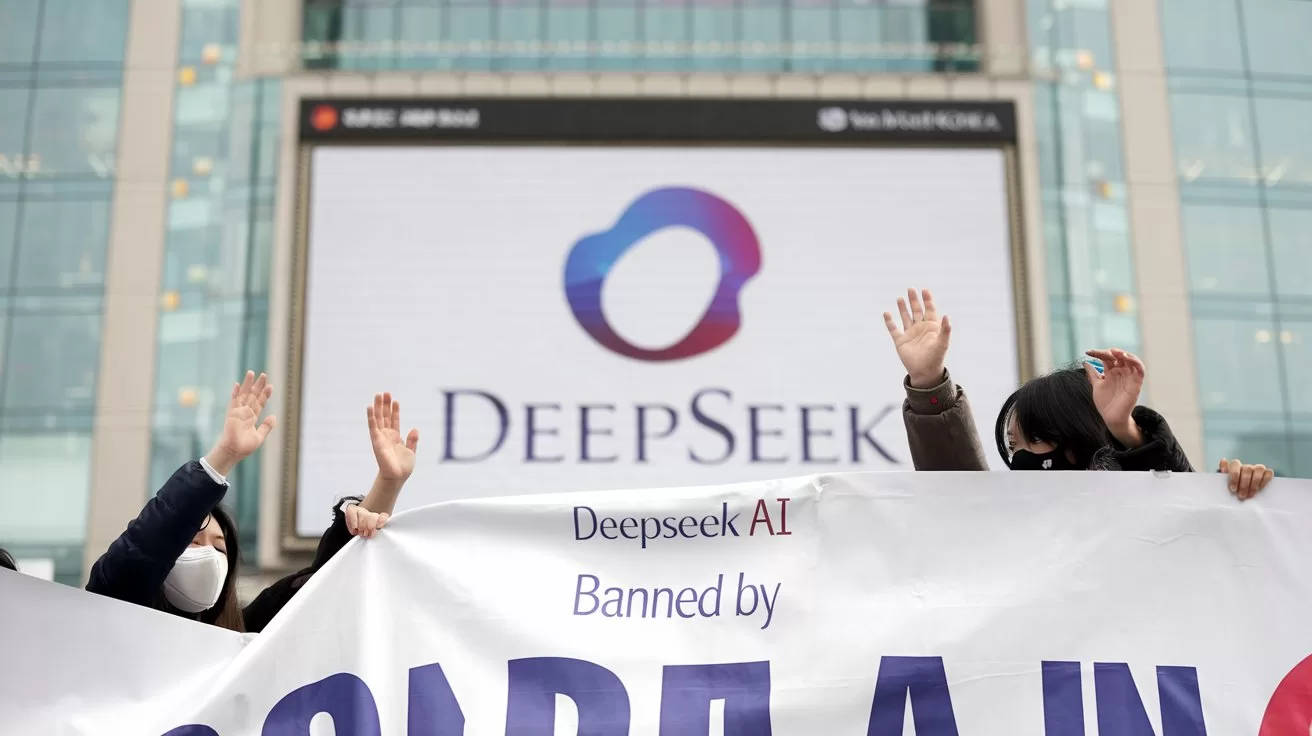

South Korea blocks new downloads of China’s DeepSeek AI app over data privacy concerns, highlighting Asia’s newer scrutiny of AI innovators.

Published

2 months agoon

By

AIinAsia

TL;DR – What You Need to Know in 30 Seconds

- DeepSeek Blocked: South Korea’s PIPC temporarily halted new downloads of DeepSeek’s AI app over data privacy concerns.

- Data to ByteDance: The Chinese lab reportedly transferred user data to ByteDance, triggering regulatory alarm bells.

- Existing Users: Current DeepSeek users in South Korea can still access the service, but are advised not to input personal info.

- Global Caution: Australia, Italy, and Taiwan have also taken steps to block or limit DeepSeek usage on security grounds.

- Founders & Ambitions: DeepSeek (founded by Liang Feng in 2023) aims to rival ChatGPT with its open-source AI model.

- Future Uncertain: DeepSeek needs to comply with South Korean privacy laws to lift the ban, raising questions about trust and tech governance in Asia.

DeepSeek AI Privacy in South Korea—What Do We Already Know?

Regulators in Asia are flexing their muscles to ensure compliance with data protection laws. The most recent scuffle? South Korea’s Personal Information Protection Commission (PIPC) has temporarily restricted the Chinese AI Lab DeepSeek’s flagship app from being downloaded locally, citing—surprise, surprise—privacy concerns. This entire saga underscores how swiftly governments are moving to keep a watchful eye on foreign AI services and the data that’s whizzing back and forth in the background.

So, pop the kettle on, and let’s dig into everything you need to know about DeepSeek, the backlash it’s received, the bigger picture for AI regulation in Asia, and why ByteDance keeps cropping up in headlines yet again. Buckle up for an in-depth look at how the lines between innovation, privacy, and geopolitics continue to blur.

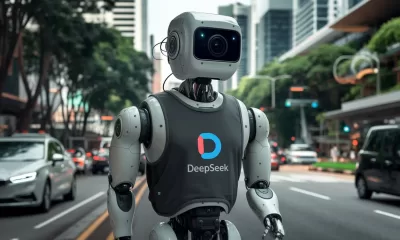

1. A Quick Glimpse: The DeepSeek Origin Story

DeepSeek is a Chinese AI lab based in the vibrant city of Hangzhou, renowned as a hotbed for tech innovation. Founded by Liang Feng in 2023, this up-and-coming outfit entered the AI race by releasing DeepSeek R1, a free, open-source reasoning AI model that aspires to give OpenAI’s ChatGPT a run for its money. Yes, you read that correctly—they want to go toe-to-toe with the big boys, and they’re doing so by handing out a publicly accessible, open-source alternative. That’s certainly one way to make headlines.

But the real whirlwind started the moment DeepSeek decided to launch its chatbot service in various global markets, including South Korea. AI enthusiasts across the peninsula, always keen on exploring new and exciting digital experiences, jumped at the chance to test DeepSeek’s capabilities. After all, ChatGPT had set the bar high for AI-driven conversation, but more competition is typically a good thing—right?

2. The Dramatic Debut in South Korea

South Korea is famous for its ultra-connected society, blazing internet speeds, and fervent tech-savvy populace. New AI applications that enter the market usually either get a hero’s welcome or run into a brick wall of caution. DeepSeek managed both: its release in late January saw a flurry of downloads from curious users, but also raised eyebrows at regulatory agencies.

If you’re scratching your head wondering what exactly happened, here’s the gist: The Personal Information Protection Commission (PIPC), the country’s data protection watchdog, requested information from DeepSeek about how it collects and processes personal data. It didn’t take long for the PIPC to raise multiple red flags. As part of the evaluation, the PIPC discovered that DeepSeek had shared South Korean user data with none other than ByteDance, the parent company of TikTok. Now, ByteDance, by virtue of its global reach and Chinese roots, has often been in the crosshairs of governments worldwide. So, it’s safe to say that linking up with ByteDance in any form can ring alarm bells for data regulators.

3. PIPC’s Temporary Restriction: “Hold on, Not So Fast!”

Citing concerns about the app’s data collection and handling practices, the PIPC advised that DeepSeek should be temporarily blocked from local app stores. This doesn’t mean that if you’re an existing DeepSeek user, your app just disappears into thin air. The existing service, whether on mobile or web, still operates. But if you’re a brand-new user in South Korea hoping to download DeepSeek, you’ll be greeted by a big, fat “Not Available” message until further notice.

The PIPC also took the extra step of recommending that current DeepSeek users in South Korea refrain from typing any personal information into the chatbot until the final decision is made. “Better safe than sorry” seems to be the approach, or in simpler terms: They’re telling users to put that personal data on lockdown until DeepSeek can prove it’s abiding by Korean privacy laws.

All in all, this is a short-term measure meant to urge DeepSeek to comply with local regulations. According to the PIPC, downloads will be allowed again once the Chinese AI lab agrees to play by South Korea’s rulebook.

4. “I Didn’t Know!”: DeepSeek’s Response

In the aftermath of the announcement, DeepSeek appointed a local representative in South Korea—ostensibly to show sincerity, cooperation, and a readiness to comply. In a somewhat candid admission, DeepSeek said it had not been fully aware of the complexities of South Korea’s privacy laws. This statement has left many scratching their heads, especially given how data privacy is front-page news these days.

Still, DeepSeek has assured regulators and the public alike that it will collaborate closely to ensure compliance. No timelines were given, but observers say the best guess is “sooner rather than later,” considering the potential user base and the importance of the South Korean market for an ambitious AI project looking to go global.

5. The ByteDance Factor: Why the Alarm?

ByteDance is something of a boogeyman in certain jurisdictions, particularly because of its relationship with TikTok. Officials in several countries have expressed worries about personal data being funnelled to Chinese government agencies. Whether that’s a fair assessment is still up for debate, but it’s enough to create a PR nightmare for any AI or tech firm found to be sending data to ByteDance—especially if it’s doing so without crystal-clear transparency or compliance with local laws.

Now, we know from the PIPC’s investigation that DeepSeek had indeed transferred user data of South Korean users to ByteDance. We don’t know the precise nature of this data, nor do we know the volume. But for regulators, transferring data overseas—especially to a Chinese entity—raises the stakes concerning privacy, national security, and potential espionage risks. In other words, even the possibility that personal data could be misused is enough to make governments jump into action.

6. The Wider Trend: Governments Taking a Stand

South Korea is hardly the first to slam the door on DeepSeek. Other countries and government agencies have also expressed wariness about the AI newcomer:

- Australia: Has outright prohibited the use of DeepSeek on government devices, citing security concerns. This effectively follows the same logic that some governments have used to ban TikTok on official devices.

- Italy: The Garante (Italy’s data protection authority) went so far as to instruct DeepSeek to block its chatbot in the entire country. Talk about a strong stance!

- Taiwan: The government there has banned its departments from using DeepSeek’s AI solutions, presumably for similar security and privacy reasons.

But let’s not forget: For every country that shuts the door, there might be another that throws it wide open, because AI can be massively beneficial if harnessed correctly. Innovation rarely comes without a few bumps in the road, after all.

7. The Ministry of Trade, Energy, & More: Local Pushback from South Korea

Interestingly, not only did the PIPC step in, but South Korea’s Ministry of Trade, Industry and Energy, local police, and a state-run firm called Korea Hydro & Nuclear Power also blocked access to DeepSeek on official devices. You’ve got to admit, that’s a pretty heavyweight line-up of cautionary folks. If the overarching sentiment is “No way, not on our machines,” it suggests the apprehension is beyond your average “We’re worried about data theft.” These are critical agencies, dealing with trade secrets, nuclear power plants, and policing—so you can only imagine the caution that’s exercised when it comes to sensitive data possibly leaking out to a foreign AI platform.

The move mirrors the steps taken in other countries that have regulated or banned the use of certain foreign-based applications on official devices—especially anything that can transmit data externally. Safety first, and all that.

8. Privacy, Data Sovereignty, and the AI Frontier

Banning or restricting an AI app is never merely about code and servers. At the heart of all this is a debate around data sovereignty, national security, and ethical AI development. Privacy laws vary from one country to another, making it a veritable labyrinth for a new AI startup to navigate. China and the West have different ways of regulating data. As a result, an AI model that’s legally kosher in Hangzhou could be a breach waiting to happen in Seoul.

On top of that, data is the new oil, as they say, and user data is the critical feedstock for AI models. The more data you can gather, the more intelligent your system becomes. But this only works if your data pipeline is in line with local and international regulations (think GDPR in Europe, PIPA in South Korea, etc.). Step out of line, and you could be staring at multi-million-dollar fines, or worse—an outright ban.

9. The Competition with ChatGPT: A Deeper AI Context

DeepSeek’s R1 model markets itself as a competitor to OpenAI’s ChatGPT. ChatGPT, as we know, has garnered immense popularity worldwide, with millions of users employing it for everything from drafting emails to building software prototypes. If you want to get your AI chatbot on the global map these days, you’ve got to go head-to-head with ChatGPT (or at least position yourself as a worthy alternative).

But offering a direct rival to ChatGPT is no small task. You need top-tier language processing capabilities, a robust training dataset, a slick user interface, and a good measure of trust from your user base. The trust bit is where DeepSeek appears to have stumbled. Even if the technical wizardry behind R1 is top-notch, privacy missteps can overshadow any leaps in technology. The question is: Will DeepSeek be able to recover from this reputational bump and prove itself as a serious contender? Or will it end up as a cautionary tale for every AI startup thinking of going global?

10. AI Regulation in Asia: The New Normal?

For quite some time, Asia has been a buzzing hub of AI innovation. China, in particular, has a thriving AI ecosystem with a never-ending stream of startups. Singapore, Japan, and South Korea are also major players, each with its own unique approach to AI governance.

In South Korea specifically, personal data regulations have become tighter to keep pace with the lightning-fast digital transformation. The involvement of the PIPC in such a high-profile case sends a clear message: If you’re going to operate in our market, you’d better read our laws thoroughly. Ignorance is no longer a valid excuse.

We’re likely to see more of these regulatory tussles as AI services cross borders at the click of a mouse. With the AI arms race heating up, each country is attempting to carve out a space for domestic innovators while safeguarding the privacy of citizens. And as AI becomes more advanced—incorporating images, voice data, geolocation info, and more—expect these tensions to multiply. The cynics might say it’s all about protecting local industry, but the bigger question is: How do we strike the right balance between fostering innovation and ensuring data security?

11. The Geopolitical Undercurrents

Yes, this is partly about AI. But it’s also about politics, pure and simple. Relations between China and many Western or Western-aligned nations have been somewhat frosty. Every technology that emerges from China is now subject to intense scrutiny. This phenomenon isn’t limited to AI. We saw it with Huawei and 5G infrastructure. We’ve seen it with ByteDance and TikTok. We’re now witnessing it with DeepSeek.

From one perspective, you could argue it’s a rational protective measure for countries that don’t want critical data in the hands of an increasingly influential geopolitical rival. From another perspective, you might say it’s stifling free competition and punishing legitimate Chinese tech innovation. Whichever side you lean towards, the net effect is that Chinese firms often face an uphill battle getting their services accepted abroad.

Meanwhile, local governments in Asia are increasingly mindful of possible negative public sentiment. The last thing a regulatory authority wants is to be caught off guard while sensitive user data is siphoned off. Thus, you get sweeping measures like app bans and device restrictions. In essence, there’s a swirl of business, politics, and technology colliding in a perfect storm of 21st-century complexities.

12. The Road Ahead for DeepSeek

Even with this temporary ban, it’s not curtains for DeepSeek in South Korea. The PIPC has mentioned quite explicitly that the block is only in place until the company addresses its concerns. Once DeepSeek demonstrates full compliance with privacy legislation—and presumably clarifies the data transfer situation to ByteDance—things might smoothen out. Whether or not they’ll face penalties is still an open question.

The bigger challenge is reputational. In the modern digital economy, trust is everything, especially for an AI application that relies on user input. The second a data scandal rears its head, user confidence can evaporate. DeepSeek will need to show genuine transparency: maybe a revised privacy policy, robust data security protocols, and a clear explanation of how user data is processed and stored.

At the same time, DeepSeek must also push forward on improving the AI technology itself. If they can’t deliver an experience that truly rivals ChatGPT or other established chatbots, then all the privacy compliance in the world won’t mean much.

DeepSeek AI Privacy—A Wrap-Up

At the end of the day, it’s a rocky start for DeepSeek in one of Asia’s most discerning markets. Yet, these regulatory clashes aren’t all doom and gloom. They illustrate that countries like South Korea are serious about adopting AI but want to make sure it’s done responsibly. Regulatory oversight might slow down the pace of innovation, but perhaps it’s a necessary speed bump to ensure that user data and national security remain safeguarded.

In the grand scheme, what’s happening with DeepSeek is indicative of a broader pattern. As AI proliferates, expect governments to impose stricter controls and more thorough compliance checks. Startups will need to invest in compliance from day one. Meanwhile, big players like ByteDance will continue to be magnets for controversy and suspicion.

For the curious, once the dust settles, we’ll see if DeepSeek emerges stronger, with a robust privacy framework, or limps away bruised from the entire affair. Let’s not forget they are still offering an open-source AI model, which is a bold and democratic approach to AI development. If they can balance that innovative spirit with data protection responsibilities, we could have a genuine ChatGPT challenger in our midst.

What Do YOU Think?

Is the DeepSeek saga a precursor to a world where national borders and strict data laws finally rein in the unchecked spread of AI, or will innovation outpace regulation once again—forcing governments to play perpetual catch-up?

There you have it, folks. The ongoing DeepSeek drama is a microcosm of the great AI wave that’s sweeping the world, shining a spotlight on issues of data protection, national security, and global competition. No matter which side of the fence you’re on, one thing is clear: the future of AI will be shaped as much by regulators and lawmakers as by visionary tech wizards. Subscribe to keep up to date on the latest happenings in Asia.

Yoy may also like:

- DeepSeek in Singapore: AI Miracle or Security Minefield?

- DeepSeek’s Rise: The $6M AI Disrupting Silicon Valley’s Billion-Dollar Game

- Or try DeepSeek (where permitted!) by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

You may like

-

Can PwC’s new Agent OS Really Make AI Workflows 10x Faster?

-

OpenAI’s New ChatGPT Image Policy: Is AI Moderation Becoming Too Lax?

-

Tencent Joins China’s AI Race with New T1 Reasoning Model Launch

-

Reality Check: The Surprising Relationship Between AI and Human Perception

-

DeepSeek in Singapore: AI Miracle or Security Minefield?

-

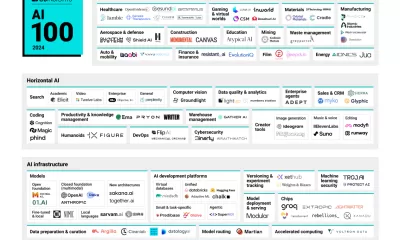

AI 100: The Hottest AI Startups of 2024 – Who’s In, Who’s Winning, and What’s Next for Asia?

News

OpenAI’s New ChatGPT Image Policy: Is AI Moderation Becoming Too Lax?

ChatGPT now generates previously banned images of public figures and symbols. Is this freedom overdue or dangerously permissive?

Published

3 weeks agoon

March 30, 2025By

AIinAsia

TL;DR – What You Need to Know in 30 Seconds

- ChatGPT can now generate images of public figures, previously disallowed.

- Requests related to physical and racial traits are now accepted.

- Controversial symbols are permitted in strictly educational contexts.

- OpenAI argues for nuanced moderation rather than blanket censorship.

- Move aligns with industry trends towards relaxed content moderation policies.

Is AI Moderation Becoming Too Lax?

ChatGPT just got a visual upgrade—generating whimsical Studio Ghibli-style images that quickly became an internet sensation. But look beyond these charming animations, and you’ll see something far more controversial: OpenAI has significantly eased its moderation policies, allowing users to generate images previously considered taboo. So, is this a timely move towards creative freedom or a risky step into a moderation minefield?

ChatGPT’s new visual prowess

OpenAI’s latest model, GPT-4o, introduces impressive image-generation capabilities directly inside ChatGPT. With advanced photo editing, sharper text rendering, and improved spatial representation, ChatGPT now rivals specialised image AI tools.

But the buzz isn’t just about cartoonish visuals; it’s about OpenAI’s major shift on sensitive content moderation.

Moving beyond blanket bans

Previously, if you asked ChatGPT to generate an image featuring public figures—say Donald Trump or Elon Musk—it would simply refuse. Similarly, requests for hateful symbols or modifications highlighting racial characteristics (like “make this person’s eyes look more Asian”) were strictly off-limits.

No longer. Joanne Jang, OpenAI’s model behaviour lead, explained the shift clearly:

“We’re shifting from blanket refusals in sensitive areas to a more precise approach focused on preventing real-world harm. The goal is to embrace humility—recognising how much we don’t know, and positioning ourselves to adapt as we learn.”

In short, fewer instant rejections, more nuanced responses.

Exactly what’s allowed now?

With this update, ChatGPT can now depict public figures upon request, moving away from selectively policing celebrity imagery. OpenAI will allow individuals to opt-out if they don’t want AI-generated images of themselves—shifting control back to users.

Controversially, ChatGPT also now accepts previously prohibited requests related to sensitive physical traits, like ethnicity or body shape adjustments, sparking fresh debate around ethical AI usage.

Handling the hottest topics

OpenAI is cautiously permitting requests involving controversial symbols—like swastikas—but only in neutral or educational contexts, never endorsing harmful ideologies. GPT-4o also continues to enforce stringent protections, especially around images involving children, setting even tighter standards than its predecessor, DALL-E 3.

Yet, loosening moderation around sensitive imagery has inevitably reignited fierce debates over censorship, freedom of speech, and AI’s ethical responsibilities.

A strategic shift or political move?

OpenAI maintains these changes are non-political, emphasising instead their longstanding commitment to user autonomy. But the timing is provocative, coinciding with increasing regulatory pressure and scrutiny from politicians like Republican Congressman Jim Jordan, who recently challenged tech companies about perceived biases in AI moderation.

This relaxation of restrictions echoes similar moves by other tech giants—Meta and X have also dialled back content moderation after facing similar criticisms. AI image moderation, however, poses unique risks due to its potential for widespread misinformation and cultural distortion, as Google’s recent controversy over historically inaccurate Gemini images has demonstrated.

What’s next for AI moderation?

ChatGPT’s new creative freedom has delighted users, but the wider implications remain uncertain. While memes featuring beloved animation styles flood social media, this same freedom could enable the rapid spread of less harmless imagery. OpenAI’s balancing act could quickly draw regulatory attention—particularly under the Trump administration’s more critical stance towards tech censorship.

The big question now: Where exactly do we draw the line between creative freedom and responsible moderation?

Let us know your thoughts in the comments below!

You may also like:

- China’s Bold Move: Shaping Global AI Regulation with Watermarks

- China’s Bold Move: Shaping Global AI Regulation with Watermarks

- Or try ChatGPT now by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

News

Tencent Joins China’s AI Race with New T1 Reasoning Model Launch

Tencent launches its powerful new T1 reasoning model amid growing AI competition in China, while startup Manus gains major regulatory and media support.

Published

3 weeks agoon

March 27, 2025By

AIinAsia

TL;DR – What You Need to Know in 30 Seconds

- Tencent has launched its upgraded T1 reasoning model

- Competition heats up in China’s AI market

- Beijing spotlights Manus

- Manus partners with Alibaba’s Qwen AI team

The Tencent T1 Reasoning Model Has Launched

Tencent has officially launched the upgraded version of its T1 reasoning model, intensifying competition within China’s already bustling artificial intelligence sector. Announced on Friday (21 March), the T1 reasoning model promises significant enhancements over its preview edition, including faster responses and improved processing of lengthy texts.

In a WeChat announcement, Tencent highlighted T1’s strengths, noting it “keeps the content logic clear and the text neat,” while maintaining an “extremely low hallucination rate,” referring to the AI’s tendency to generate accurate, reliable outputs without inventing false information.

The Turbo S Advantage

The T1 model is built on Tencent’s own Turbo S foundational language technology, introduced last month. According to Tencent, Turbo S notably outpaces competitor DeepSeek’s R1 model when processing queries, a claim backed up by benchmarks Tencent shared in its announcement. These tests showed T1 leading in several key knowledge and reasoning categories.

Tencent’s latest launch comes amid heightened rivalry sparked largely by DeepSeek, a Chinese startup whose powerful yet affordable AI models recently stunned global tech markets. DeepSeek’s success has spurred local companies like Tencent into accelerating their own AI investments.

Beijing Spotlights Rising AI Star Manus

The race isn’t limited to tech giants. Manus, a homegrown AI startup, also received a major boost from Chinese authorities this week. On Thursday, state broadcaster CCTV featured Manus for the first time, comparing its advanced AI agent technology favourably against more traditional chatbot models.

Manus became a sensation globally after unveiling what it claims to be the world’s first truly general-purpose AI agent, capable of independently making decisions and executing tasks with minimal prompting. This autonomy differentiates it sharply from existing chatbots such as ChatGPT and DeepSeek.

Crucially, Manus has now cleared significant regulatory hurdles. Beijing’s municipal authorities confirmed that a China-specific version of Manus’ AI assistant, Monica, is fully registered and compliant with the country’s strict generative AI guidelines, a necessary step before public release.

Further strengthening its domestic foothold, Manus recently announced a strategic partnership with Alibaba’s Qwen AI team, a collaboration likely to accelerate the rollout of Manus’ agent technology across China. Currently, Manus’ agent is accessible only via invite codes, with an eager waiting list already surpassing two million.

The Race Has Only Just Begun

With Tencent’s T1 now officially in play and Manus gaining momentum, China’s AI competition is clearly heating up, promising exciting innovations ahead. As tech giants and ambitious startups alike push boundaries, China’s AI landscape is becoming increasingly dynamic—leaving tech enthusiasts and investors eagerly watching to see who’ll take the lead next.

What do YOU think?

Could China’s AI startups like Manus soon disrupt Silicon Valley’s dominance, or will giants like Tencent keep the competition at bay?

You may also like:

Tencent Takes on DeepSeek: Meet the Lightning-Fast Hunyuan Turbo S

DeepSeek in Singapore: AI Miracle or Security Minefield?

Alibaba’s AI Ambitions: Fueling Cloud Growth and Expanding in Asia

Learn more by tapping here to visit the Tencent website.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

News

Google’s Gemini AI is Coming to Your Chrome Browser — Here’s the Inside Scoop

Google is integrating Gemini AI into Chrome browser through a new experimental feature called Gemini Live in Chrome (GLIC). Here’s everything you need to know.

Published

4 weeks agoon

March 25, 2025By

AIinAsia

TL;DR – What You Need to Know in 30 Seconds

- Google is integrating Gemini AI into its Chrome browser via an experimental feature called Gemini Live in Chrome (GLIC).

- GLIC adds a clickable Gemini icon next to Chrome’s window controls, opening a floating AI assistant modal.

- Currently being tested in Chrome Canary, the feature aims to streamline AI interactions without leaving the browser.

Welcoming Google’s Gemini AI to Your Chrome Browser

If there’s one thing tech giants love more than AI right now, it’s finding new ways to shove that AI into everything we use. And Google—never one to be left behind—is apparently stepping up their game by sliding their Gemini AI directly into your beloved Chrome browser. Yep, that’s the buzz on the digital street!

This latest AI adventure popped up thanks to eagle-eyed folks at Windows Latest, who spotted intriguing code snippets hidden in Google’s Chrome Canary version. Canary, if you haven’t played with it before, is Google’s playground version of Chrome. It’s the spot where they test all their wild and wonderful experimental features, and it looks like Gemini’s next up on stage.

Say Hello to GLIC: Gemini Live in Chrome

They’re calling this new integration “GLIC,” which stands for “Gemini Live in Chrome.” (Yes, tech companies never resist a snappy acronym, do they?) According to the early glimpses from Canary, GLIC isn’t quite ready for primetime yet—no shock there—but the outlines are pretty clear.

Once activated, GLIC introduces a nifty Gemini icon neatly tucked up beside your usual minimise, maximise, and close window buttons. Click it, and a floating Gemini assistant modal pops open, ready and waiting for your prompts, questions, or random curiosities.

Prefer a less conspicuous spot? Google’s thought of that too—GLIC can also nestle comfortably in your system tray, offering quick access to Gemini without cluttering your browser interface.

Why Gemini in Chrome Actually Makes Sense

Having Gemini hanging out front and centre in Chrome feels like a smart move—especially when you’re knee-deep in tabs and need quick answers or creative inspiration on the fly. No more toggling between browser tabs or separate apps; your AI assistant is literally at your fingertips.

But let’s keep expectations realistic here—this is still Canary we’re talking about. Features here often need plenty of polish and tweaking before making it to the stable Chrome we all rely on. But the potential? Definitely exciting.

What’s Next?

For now, we’ll keep a close eye on GLIC’s developments. Will Gemini revolutionise how we interact with Chrome, or will it end up another quirky experiment? Either way, Google’s bet on AI is clearly ramping up, and we’re here for it. Don’t forget to sign up to our occasional newsletter to stay informed about this and other happenings around AI in Asia and beyond.

Stay tuned—we’ll share updates as soon as Google lifts the curtains a bit further.

You may also like:

- Revolutionising Search: Google’s New AI Features in Chrome

- Google Gemini: How To Maximise Its Potential

- Google Gemini: The Future of AI

- Try Google Carnary by tapping here — be warned, it can be unstable!

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

AI Career Guide: Land Your Next Job with Our AI Playbook

Will AI Take Your Job—or Supercharge Your Career?

Can AI Videos Really Boost Your Brand’s Authenticity?

Trending

-

Business3 weeks ago

Business3 weeks agoCan PwC’s new Agent OS Really Make AI Workflows 10x Faster?

-

Life3 weeks ago

Life3 weeks agoAI-pril Fools! How AI is Outsmarting Our Best Pranks

-

Life2 weeks ago

Life2 weeks agoWhich Jobs Will AI Kill by 2030? New WEF Report Reveals All

-

Life1 week ago

Life1 week agoAI Career Guide: Land Your Next Job with Our AI Playbook

-

Business2 weeks ago

Business2 weeks agoWill AI Take Your Job—or Supercharge Your Career?

-

Marketing2 weeks ago

Marketing2 weeks agoWill AI Kill Your Marketing Job by 2030?

-

Tools2 weeks ago

Tools2 weeks agoCan AI Videos Really Boost Your Brand’s Authenticity?

-

Business2 weeks ago

Business2 weeks agoThe Three AI Markets Shaping Asia’s Future