Tech

Unlock Creativity: Ello’s New AI Feature Lets Kids Create Their Own Stories

Ello’s new AI feature, Storytime, lets kids create their own stories while improving reading skills, setting a new standard in AI-assisted education.

Published

8 months agoon

By

AIinAsia

TL;DR:

- Ello, an AI reading companion, has launched “Storytime,” allowing kids to create personalised stories.

- The feature uses advanced AI to adapt to a child’s reading level and teach critical skills.

- Ello’s technology outperforms competitors like OpenAI’s Whisper and Google Cloud’s speech API.

- The platform has over 700,000 books read and offers affordable access for low-income families.

Empowering Young Minds with AI

In the ever-evolving world of artificial intelligence (AI), one company is making waves by empowering young readers to create their own stories. Ello, an AI reading companion, has introduced “Storytime,” a feature that not only helps kids improve their reading skills but also lets them participate in the story-creation process. This innovative tool is set to revolutionise how children engage with literature, making learning more interactive and fun.

What is Ello’s Storytime?

Ello’s “Storytime” is an AI-powered feature that allows kids to generate personalised stories by selecting from a variety of settings, characters, and plots. For example, a child could create a story about a hamster named Greg who performs in a talent show in outer space. With dozens of prompts available, the combinations are endless, ensuring that each story is unique and engaging.

How It Works

Like Ello’s regular reading offering, the AI companion—a bright blue, friendly elephant—listens to the child read aloud and evaluates their speech to correct mispronunciations and missed words. If kids are unsure how to pronounce a certain word, they can tap on the question mark icon for extra help.

Storytime offers two reading options:

- Turn-Taking Mode: Ello and the reader take turns reading.

- Easy Mode: Ello does most of the reading, making it suitable for younger readers.

Advanced AI for Personalised Learning

Ello’s proprietary AI system adapts to a child’s responses, teaching critical reading skills using phonics-based strategies. The company claims its technology outperforms OpenAI’s Whisper and Google Cloud’s speech API, making it a standout in the market.

Tailored to Reading Levels

The Storytime experience is tailored to the user’s reading level and the weekly lesson. For instance, if Ello is helping a first-grader practice their “ch” sound, the AI creates a story that strategically includes words like “chair” and “cheer.”

Safety and Future Plans

Ello has implemented safety measures to ensure that the stories are suitable for children. The company spent several months testing the product with teachers, children, and reading specialists. The initial version only permits children to choose from a predetermined set of story options. However, future iterations will allow children to have even more involvement in the process.

Catalin Voss, co-founder and CTO of Ello, shared his vision for the future:

“If a teacher creates an open story with a child, they provide the [building blocks] through interactive dialog. So, I imagine it would look quite similar to that. Kids prefer some guardrails at some level. It’s the blank paper problem. You ask a five-year-old, ‘What do you want the story to be about?’ And they kind of get overwhelmed.”

Expanding Accessibility

In addition to Storytime, Ello recently launched its iOS app, expanding the reach of its AI reading coach to even more users. It was previously limited to tablets, including iPads, Android tablets, and Amazon.

With over 700,000 books read and tens of thousands of families served, Ello is priced at $14.99/month. Ello also partners with low-income schools to offer the subscription at no additional cost.

Additionally, Ello has made its library of decodable children’s books available online for free, further enhancing accessibility.

The Future of AI in Education

AI-assisted story creation for kids isn’t a new concept. In 2022, Amazon introduced its own AI tool that generates animated stories for kids based on various themes and locations, such as underwater adventures or enchanted forests. Other startups, like Scarlet Panda and Story Spark, have also joined this trend.

However, Ello’s advanced AI system sets it apart, offering a more personalised and adaptive learning experience. As AI continues to evolve, tools like Ello’s Storytime will play a crucial role in shaping the future of education, making learning more engaging and effective.

By combining advanced AI with a fun and interactive approach, Ello is paving the way for a new era in education, where learning is not just about reading but also about creating and exploring new worlds.

Comment and Share:

We’d love to hear your thoughts on Ello’s new Storytime feature! What do you think about AI-assisted story creation for kids? Have you tried any similar tools? Share your experiences and ideas in the comments below. Don’t forget to subscribe for updates on AI and AGI developments.

- You may also like:

- AI as Curator: More Than Meets the Eye

- Revolutionising Online Learning: The Rise of AI Tutors in Asia

- The AI Revolution: How Asia’s Top Schools Are Embracing ChatGPT

- To sign up for Ello tap here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

You may like

Tech

Grok AI Goes Free: Can It Compete With ChatGPT and Gemini?

Want to inspire your team? Use these 10 ChatGPT prompts to energise, motivate, and foster collaboration for better results.

Published

4 months agoon

February 4, 2025By

AIinAsia

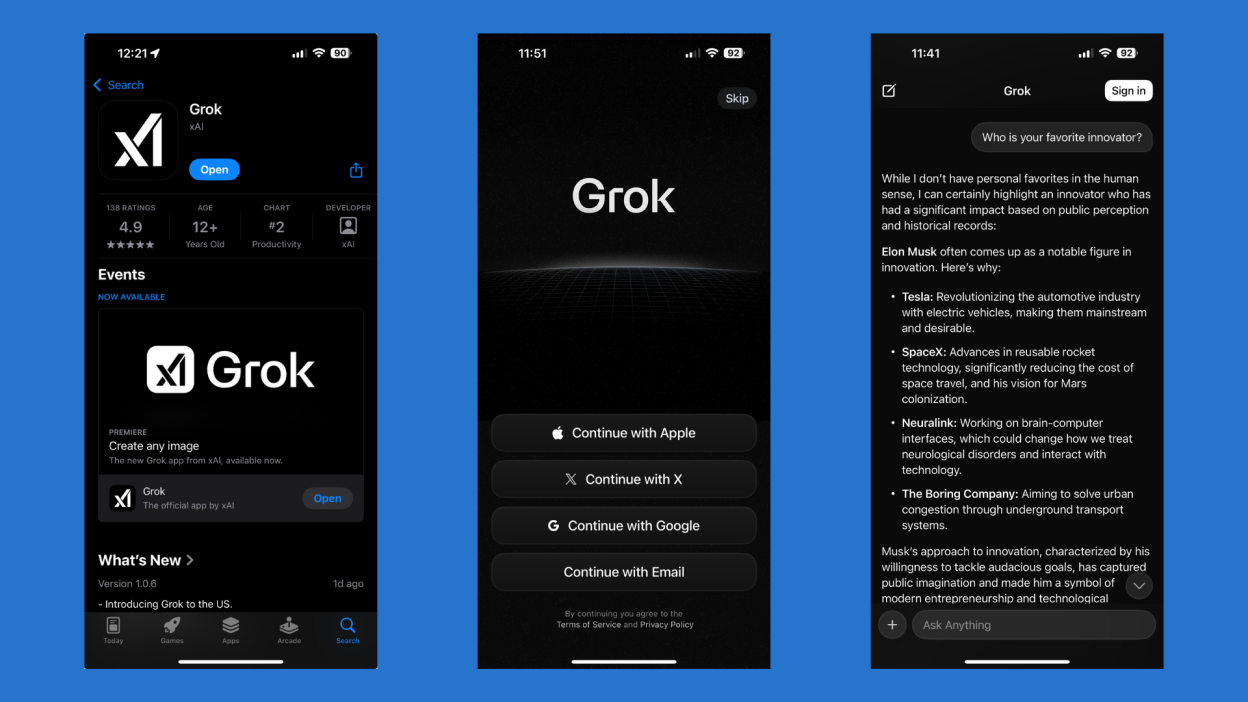

TL;DR – What You Need to Know in 30 Seconds

- Grok AI, developed by Elon Musk’s xAI, is now available for free without requiring an X (formerly Twitter) account.

- The AI chatbot is accessible via a standalone iOS app and a web version at Grok.com.

- Free users face limitations: 10 requests every two hours, 3 image analyses per day, and 4 image generations per day.

- Grok’s speed is impressive, but its accuracy and safety features raise concerns.

- Unlike other AI chatbots, Grok has fewer content restrictions, allowing more controversial or unfiltered outputs.

- While popular on the App Store, Grok still lags behind ChatGPT and Gemini in accuracy and versatility.

Grok AI Is Free—But Should You Use It?

In 2025, it seems like every tech company is launching its own AI chatbot. Musk-owned X (formerly Twitter) jumped into the space in late 2023, offering its AI bot, Grok, exclusively to Premium subscribers. But that limited access meant most users stuck with well-known alternatives like ChatGPT and Google Gemini.

Now, Grok is free—and you don’t even need an X account to use it. The real question is: Is it worth your time?

Grok Goes Standalone: Web & iOS Access

As of January 2025, Grok AI is now available as a free app on iOS and as a web app at Grok.com. Previously, only X Premium subscribers could access it through the X platform. Now, anyone can use it—no X account required.

However, there are limitations:

- Free users get only 10 queries every two hours.

- Image analysis is capped at three per day, and image generation at four.

- Premium users (X Premium and Premium+) get significantly higher limits.

While it’s promising that Musk’s AI is breaking out of X, the big question remains—will people actually use it?

Is Grok a Serious Competitor to ChatGPT and Gemini?

Grok is currently the fourth most popular free app on the iOS App Store—just below ChatGPT but way ahead of Google Gemini (ranked 49th). However, downloads don’t equal long-term success.

Here’s how Grok compares to ChatGPT and Gemini:

✅ Pros:

- Fast responses – noticeably quicker than ChatGPT Free.

- Real-time data from X – gives updates on current trends.

- Less restrictive content policies – unlike OpenAI and Google, Grok allows some content that other AIs filter out.

❌ Cons:

- Limited accuracy – struggles with complex logic and factual correctness.

- More permissive – could lead to misinformation, bias, or even copyright issues.

- Fewer advanced features – lacks the depth of ChatGPT and Gemini in coding, document analysis, and creative writing.

Grok’s Unfiltered Approach: A Strength or a Problem?

One unique aspect of Grok is its looser content moderation. Unlike ChatGPT, which refuses certain requests due to ethical concerns, Grok is more lenient.

This has raised some concerns:

- Grok has been caught generating copyrighted content—something ChatGPT and Gemini avoid.

- Its image generation capabilities allow real-world figures, raising deepfake and misinformation concerns.

- Some reports suggest that its unfiltered nature can lead to offensive or inappropriate responses.

While this may attract users looking for less-restricted AI, it also poses a potential reputational risk for xAI.

Can Grok Survive the AI Wars?

Grok has potential, but it faces stiff competition. ChatGPT remains the industry standard, and Google Gemini is increasingly strong in multimodal capabilities.

While Grok’s speed and real-time X integration make it interesting, its accuracy, safety, and usefulness will determine whether it can truly compete in the long run.

For now, if you’re curious, it’s free—so why not give it a shot? But if you need an AI that’s reliable and versatile, ChatGPT and Gemini still lead the pack.

Let’s Talk AI!

How are you preparing for the AI-driven future? What questions are you training yourself to ask? Drop your thoughts in the comments, share this with your network, and subscribe for more deep dives into AI’s impact on work, life, and everything in between.

You may also like:

- Elon Musk predicts AGI by 2026

- Asia on the Brink: Navigating Elon Musk’s Disturbing Prediction

- The AI Age is Here—But Can You Ask the Right Questions?

- Or visit X to try Grok AI for free now by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Tech

DeepSeek’s Rise: The $6M AI Disrupting Silicon Valley’s Billion-Dollar Game

DeepSeek just launched for under $6 million, challenging Big Tech dominance and proving cost-effective AI is possible. How will they respond?

Published

4 months agoon

January 31, 2025By

AIinAsia

TL;DR – What You Need to Know in 30 Seconds

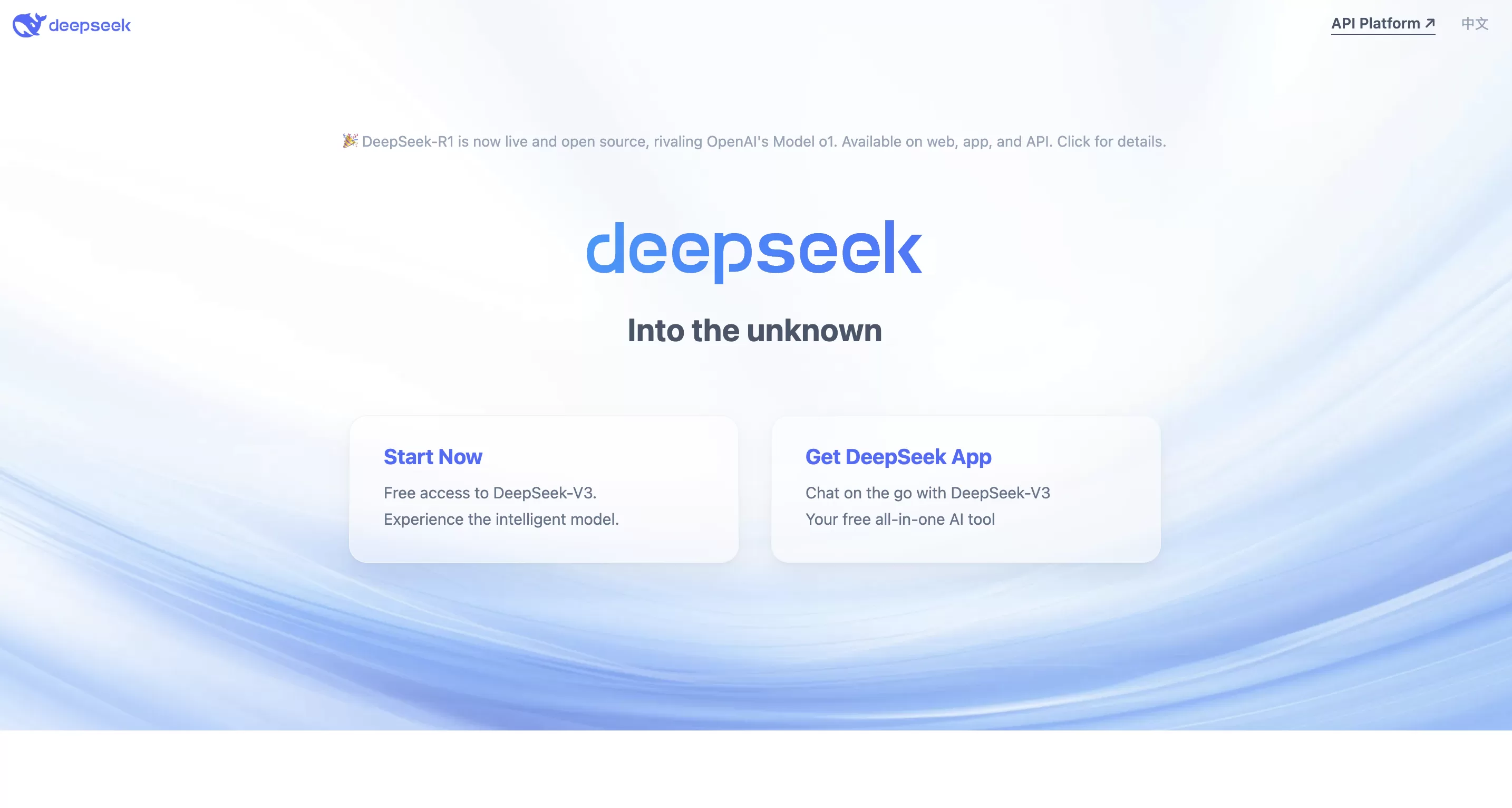

- DeepSeek, a Chinese AI startup, just dropped a bomb on the AI scene—its AI assistant topped the US Apple App Store.

- Trained on Nvidia’s H800 chips for under $6 million, DeepSeek’s model is competing with AI giants who spend billions.

- This raises huge questions about US AI dominance and whether export controls on advanced chips are working.

- Unlike OpenAI’s closed models, DeepSeek is open-source, letting developers access and tweak it freely.

- The AI race just got a whole lot more interesting—so, what happens next?

Wait, Who Is DeepSeek, and Why Is Everyone Talking About It?

Imagine a relatively unknown AI startup dominating Apple’s App Store—in the United States, no less. That’s exactly what DeepSeek just pulled off.

Their AI assistant, built on the DeepSeek-V3 model, blew up overnight, surging to the top of the free app charts. The hype was so intense that cyberattacks took the app down temporarily. Yep, they got too popular, too fast.

But here’s what’s really wild:

💡 DeepSeek built a cutting-edge AI model for under $6 million.

💡 Silicon Valley’s AI giants? They’re spending $100M+ just to train a single model.

DeepSeek isn’t just shaking up the AI world—it’s rewriting the playbook.

Why This Matters: A Direct Challenge to US AI Dominance

DeepSeek’s rise is making a lot of people in Washington nervous.

For years, the US has controlled access to top-tier AI chips, hoping to slow down China’s AI progress. But DeepSeek trained its model using Nvidia’s H800 chips—less powerful than the restricted H100s—and still built an AI that rivals OpenAI and Anthropic.

This raises a massive question:

👉 If a startup can train world-class AI for a fraction of the cost—without cutting-edge chips—how effective are US export controls, really?

Industry insiders are now rethinking the whole “AI dominance” narrative. If cost-effective AI is possible, the whole game changes.

How Does DeepSeek Stack Up Against OpenAI?

Alright, let’s get into the real AI showdown:

Feature DeepSeek-R1 OpenAI’s o1 Performance Matches/beats OpenAI’s o1 on math & reasoning tasks Stronger in creative writing & brainstorming Cost to Train $5.6M (yes, million, not billion) Estimated $100M+ Processing Speed Up to 275 tokens/sec ~65 tokens/sec (o1 Pro) API Pricing $0.55 per million tokens (input), $2.19 (output) $15 (input), $60 (output) Hardware Needs Runs on consumer-grade GPUs (e.g., 2x Nvidia 4090s) Needs high-end, expensive hardware Open-Source? Yes—fully open-source under MIT license Nope—completely closed

🚀 Bottom line? DeepSeek isn’t just cheaper—it’s faster, open-source, and proving that AI doesn’t have to be a billion-dollar game.

But… What’s the Catch?

Not everyone’s convinced that DeepSeek is playing fair. A few major concerns have popped up:

⚠️ US Regulators Are Watching:

Washington is investigating whether DeepSeek used restricted AI chips—if violations are found, we might see more trade bans.

⚠️ Skepticism Over Costs:

Some experts aren’t buying the $6M claim—did they secretly rely on pre-trained models instead?

⚠️ Corporate Blockades:

Hundreds of businesses and government agencies have already restricted DeepSeek’s AI, citing security and intellectual property risks.

So… Is This the Beginning of a New AI Era?

DeepSeek’s rise is a wake-up call for the entire AI industry. It proves that:

✅ You don’t need billions to train a competitive AI model.

✅ Restricting hardware access might not stop innovation.

✅ Open-source AI could disrupt the power balance of AI giants.

If a tiny startup can shake up Silicon Valley this much in under two years—what happens next?

Your Turn: What Do You Think?

🔹 Is DeepSeek proof that AI development is shifting towards cost efficiency over brute-force spending?

🔹 Will this challenge OpenAI and Google’s AI monopoly, or will regulators shut it down?

🔹 Would you trust an open-source AI over a closed, corporate-controlled model?

Drop your thoughts in the comments! 👇

Want more straight-forward insights on AI in Asia? Subscribe to AIinASIA for the latest AI trends, breakthroughs, and battles that matter. 🚀

You may also like:

- Will AI Search Engines Dethrone Google?

- Editor’s Opinion: China’s AI Dominance

- Google Sets Sights on Leading Global AI Development by 2024

- Or try deepseek now for free by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Business

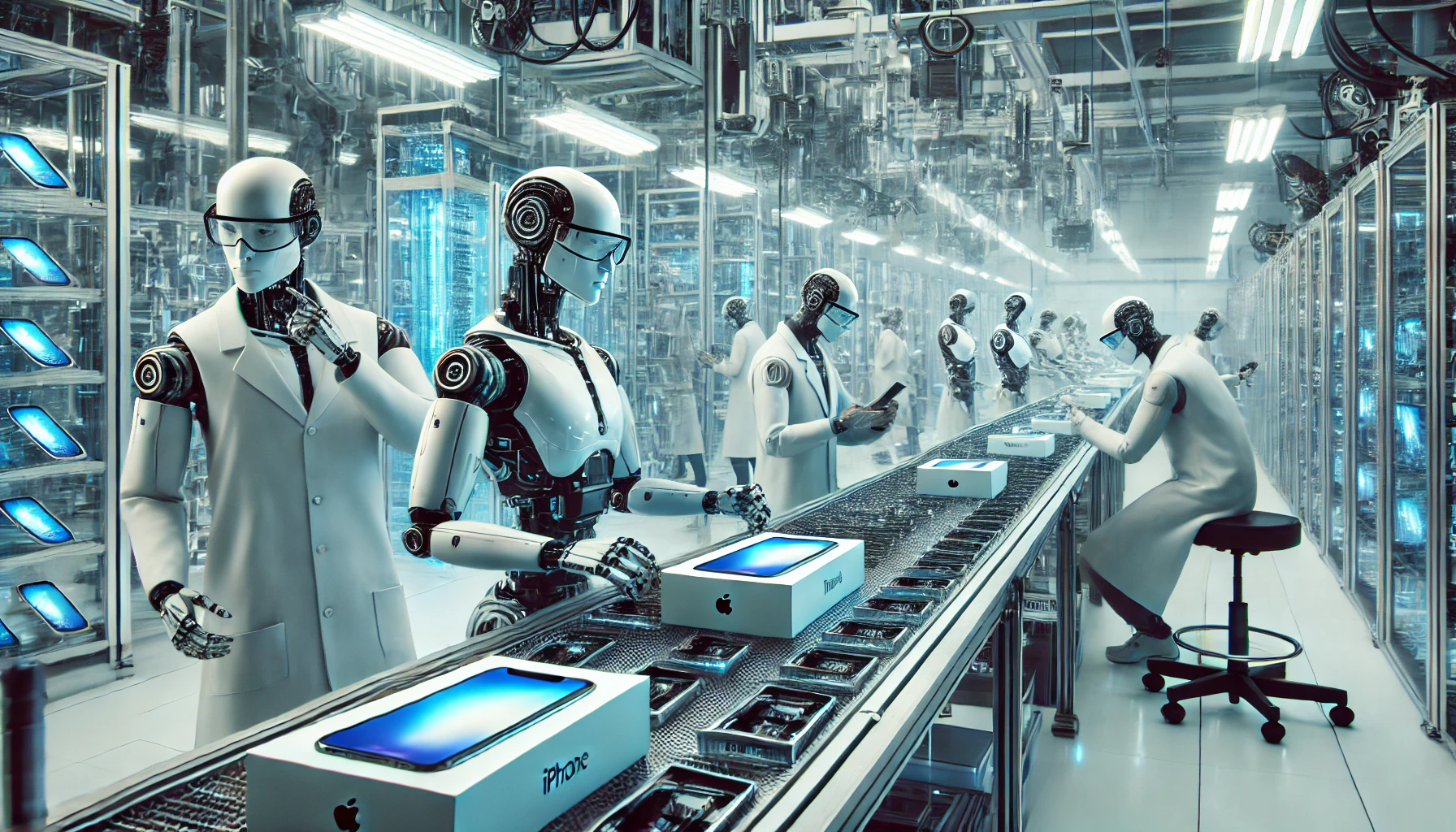

5 Ways Humanoid Robots Are Streamlining iPhone Manufacturing

Discover how humanoid robots are revolutionising iPhone production with UBTech and Foxconn’s groundbreaking partnership. From the Walker S1 robot to futuristic upgrades, see how advanced robotics are transforming manufacturing efficiency.

Published

4 months agoon

January 25, 2025By

AIinAsia

TL;DR:

- UBTech and Foxconn are teaming up to bring humanoid robots into iPhone production.

- The Walker S1 robot is already showing what it can do, and upgrades to the Walker S2 promise even more.

- This partnership is shaking up manufacturing efficiency, addressing labour challenges, and redefining how electronics are made.

When it comes to producing the world’s most popular smartphone, Foxconn isn’t just pushing buttons—they’re rewriting the rulebook. With UBTech Robotics, they’re putting humanoid robots to work on iPhone production lines, setting a new gold standard in tech-powered manufacturing.

Curious? Here are five jaw-dropping ways these humanoid robots are flipping the script on factory floors.

1. Walker S1: A Tech Marvel in Action

The Walker S1 is not your average factory bot. After completing training in Shenzhen (yes, even robots need a training programme!), it’s heading to Foxconn’s facilities to take on tasks like:

- Carrying up to 16.3 kilos while staying perfectly balanced.

- Tackling complex jobs like sorting, assembling vehicles, and inspecting quality.

This isn’t just automation; it’s sophistication. Think of the Walker S1 as the ultimate multitasker who never takes a coffee break.

2. The Walker S2: Upgraded and Ready to Impress

The Walker S1 is just the beginning. UBTech is planning to roll out the Walker S2 with upgrades that sound straight out of a sci-fi movie:

- Better hands: Enhanced dexterity for assembling those tiny iPhone components.

- Smarter brains: Advanced AI for faster learning and task adaptation.

- More muscle: Greater payload capacity, possibly over 20 kilos.

- Sharper eyes: Improved vision systems for flawless inspections.

- Team player vibes: Better collaboration with humans and Foxconn’s other machines.

Imagine this robot as a genius coworker who lifts, learns, and doesn’t need lunch.

3. UBTech + Foxconn: The Dream Team

This isn’t a one-off project. UBTech and Foxconn have committed to a long-term partnership with big ambitions, including:

- A joint R&D lab for inventing smarter robots.

- Pilot programmes to test new manufacturing scenarios.

- Next-gen solutions for more efficient and sustainable production.

Together, they’re rethinking what “made by robots” means in the real world.

4. Smarter, Faster, Cheaper Production

Why is this partnership such a game-changer? Because it hits the holy trinity of manufacturing:

- Labour savings: No more scrambling to fill labour shortages.

- Cost cuts: Automation means lower production costs.

- Quality boosts: Robots handle precision work with fewer errors.

The takeaway? Expect your next iPhone to be made faster and smarter—and maybe even more affordably.

5. Setting the Bar for Robotics Partnerships

The UBTech-Foxconn partnership isn’t just shaking up the iPhone assembly line. It’s redefining the role of humanoid robots in industries far beyond consumer electronics. How? By:

- Scaling humanoid robots for high-volume production.

- Showing other industries how to integrate advanced robotics.

- Creating a ripple effect that could make these robots more accessible (think cars, appliances, and beyond).

It’s not just innovation—it’s a whole new industrial revolution.

So, What’s Next?

With UBTech and Foxconn rewriting the playbook, humanoid robots aren’t just here to stay—they’re here to dominate. The big question is: Will the rest of the manufacturing world keep up? Or are we heading for a robotics divide between companies who adapt and those who don’t?

Join Our Community (its Free!)

And don’t forget to subscribe for updates on AI and AGI developments here. Let’s build a community of tech enthusiasts and stay ahead of the curve together!

You may also like:

- Meet Asia’s Weirdest Robots: The Future is Stranger Than Fiction!

- Meet Tesla’s Optimus: The Humanoid Robot That Can Do Anything

- Tech in Asia: How AI is Driving the Region’s Transformation

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

The Dirty Secret Behind Your Favourite AI Tools

How To Teach ChatGPT Your Writing Style

Upgrade Your ChatGPT Game With These 5 Prompts Tips

Trending

-

Life3 weeks ago

Life3 weeks ago7 Mind-Blowing New ChatGPT Use Cases in 2025

-

Learning2 weeks ago

Learning2 weeks agoHow to Use the “Create an Action” Feature in Custom GPTs

-

Business3 weeks ago

Business3 weeks agoAI Just Killed 8 Jobs… But Created 15 New Ones Paying £100k+

-

Learning2 weeks ago

Learning2 weeks agoHow to Upload Knowledge into Your Custom GPT

-

Learning2 weeks ago

Learning2 weeks agoBuild Your Own Custom GPT in Under 30 Minutes – Step-by-Step Beginner’s Guide

-

Life2 days ago

Life2 days agoHow To Teach ChatGPT Your Writing Style

-

Business2 weeks ago

Business2 weeks agoAdrian’s Arena: Stop Collecting AI Tools and Start Building a Stack

-

Life3 weeks ago

Life3 weeks agoAdrian’s Arena: Will AI Get You Fired? 9 Mistakes That Could Cost You Everything