Tools

ChatGPT’s New Voice: A Revolution in AI, but at What Cost?

Explore the implications of ChatGPT’s advanced voice mode, which mimics human emotion and conversation flow, raising both excitement and concern.

Published

8 months agoon

By

AIinAsia

TL;DR:

- ChatGPT’s advanced voice mode mimics human emotion and conversation flow.

- Users may form intimate relationships with the chatbot, raising concerns.

- The evolution of language and social behaviour contributes to this phenomenon.

- Benefits include reduced loneliness, but risks involve social isolation and altered expectations in human relationships.

Imagine chatting with an AI so human-like that it takes breaths, responds to interruptions, and even picks up on your emotional cues. That’s the latest update from ChatGPT, and it’s raising both excitement and concern. Let’s dive into the fascinating yet worrying world of AI’s increasingly human-like interactions.

The Dawn of Advanced Voice Mode

OpenAI, the company behind ChatGPT, is testing a new feature called “advanced voice mode.” This update promises more natural, real-time conversations, complete with emotional and non-verbal cues. If you’re a paid subscriber, expect to see this feature in the coming months.

But what makes advanced voice mode so remarkable? Unlike traditional voice assistants, it mimics human conversation flow. It breathes, handles interruptions smoothly, and conveys appropriate emotions. It’s designed to infer your emotional state from voice cues, making interactions incredibly lifelike.

The Evolution of Intimacy

Humans have an innate capacity for friendship and intimacy, rooted in our evolutionary past. Our ancestors used verbal “grooming” to build alliances, leading to the development of complex language and social behaviour. Conversation, especially when it involves personal disclosures, fosters intimacy.

It’s no surprise, then, that people are forming intimate relationships with chatbots. Text-based interactions can create a sense of closeness, and voice-based assistants like Siri and Alexa receive countless marriage proposals despite their non-human voices.

The Power of Voice

The introduction of voice in AI amplifies this effect. Voice is the primary sensory experience of conversation, and when an AI sounds human, the emotional connection deepens. OpenAI’s advanced voice mode takes this to a new level, making it easier for users to form social relationships with ChatGPT.

But how can we prevent this? The solution is straightforward: don’t give AI a voice, and don’t make it capable of conversational back-and-forth. However, this would mean not creating the product in the first place. The power of ChatGPT lies in its ability to mimic human traits, making it an excellent social companion.

The Writing on the Lab Chalkboard

The potential for users to form relationships with chatbots has been clear since the first chatbots emerged nearly 60 years ago. Computers have long been recognised as social actors, and the advanced voice mode of ChatGPT is just the latest increment in this evolution.

Last year, users of the virtual friend platform Replika AI found themselves unexpectedly cut off from their chatbots’ most advanced functions. Despite Replika being less advanced than the new version of ChatGPT, users formed deep attachments, highlighting the risks and benefits of such technology.

Benefits and Risks

Benefits

- Reduced Loneliness: Many people find comfort in chatbots that listen non-judgmentally, reducing feelings of loneliness and isolation.

- Insights into Culture: The impact of machines on culture can provide deep insights into how culture works.

Risks

- Social Isolation: Time spent with chatbots is time not spent with friends and family, potentially leading to social isolation.

- Altered Expectations: Interacting with polite, submissive chatbots may alter expectations in human relationships.

- Contamination of Existing Relationships: As OpenAI notes, chatting with bots can contaminate existing relationships, with users expecting human partners to behave like chatbots.

The Future of AI Interactions

The future of AI interactions is both exciting and concerning. As AI becomes more human-like, it offers unprecedented opportunities for companionship and emotional support. However, the risks of social isolation and altered expectations in human relationships are real. As we navigate the AI landscape, it’s crucial to stay informed about the latest developments and their implications.

Comment and Share:

Have you ever formed an emotional connection with an AI? How do you think advanced voice mode will change the way we interact with technology? Share your thoughts and experiences below, and don’t forget to subscribe for updates on AI and AGI developments.

You may also like:

- ChatGPT Voice Mode: The Future of AI Interaction is Here!

- In the Shadow of AI: The Uniquely Human Qualities That Endure

- Human-AI Differences: Artificial Intelligence and the Quest for AGI in Asia

- To learn more about Chat GPT voice tap here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

You may like

Life

The Dirty Secret Behind Your Favourite AI Tools

This piece explores the hidden environmental costs of AI, focusing on electricity and water consumption by popular models like ChatGPT. It unpacks why companies don’t disclose energy usage, shares sobering statistics, and spotlights efforts pushing for transparency and sustainability in AI development.

Published

9 hours agoon

June 5, 2025By

AIinAsia

The environmental cost of artificial intelligence is rising fast — yet the industry remains largely silent. Here’s why that needs to change.

TL;DR — What You Need To Know

- AI systems like ChatGPT and Google Gemini require immense electricity and water for training and daily use

- There’s no universal standard or regulation requiring AI companies to report their energy use

- Estimates suggest AI-related electricity use could exceed 326 terawatt-hours per year by 2028

- Lack of transparency hides the true cost of AI and hinders efforts to build sustainable infrastructure

- Organisations like the Green Software Foundation are working to make AI’s carbon footprint more measurable

AI Is Booming — So Are AI’s Environmental Impact

AI might be the hottest acronym of the decade, but one of its most inconvenient truths remains largely hidden from view: the vast, unspoken energy toll of its everyday use. The focus keyphrase here is clear: AI’s environmental impact.

With more than 400 million weekly users, OpenAI’s ChatGPT ranks among the five most visited websites globally. And it’s just the tip of the digital iceberg. Generative AI is now baked into apps, search engines, work tools, and even dating platforms. It’s ubiquitous — and ravenous.

Yet for all the attention lavished on deepfakes, hallucinations and the jobs AI might replace, its environmental footprint receives barely a whisper.

Why AI’s Energy Use is Such a Mystery

Training a large language model is a famously resource-intensive endeavour. But what’s less known is that every single prompt you feed into a chatbot also eats up energy — often equivalent to seconds or minutes of household appliance use.

The problem is we still don’t really know how much energy AI systems consume. There are no legal requirements for companies to disclose model-specific carbon emissions and no global framework for doing so. It’s the wild west, digitally speaking.

Why? Three reasons:

- Commercial secrecy: Disclosing energy metrics could expose architectural efficiencies and other competitive insights

- Technical complexity: Models operate across dispersed infrastructure, making attribution a challenge

- Narrative management: Big Tech prefers to market AI as a net-positive force, not a planetary liability

The result is a conspicuous silence — one that researchers, journalists and environmentalists are now struggling to fill.

The stats we do have are eye-watering

MIT Technology Review recently offered a sobering benchmark: a 5-second AI-generated video might burn the same energy as an hour-long microwave session.

Even a text-based chatbot query could cost up to 6,700 joules. Scale that by billions of queries per day and you’re looking at a formidable energy footprint. Add visuals or interactivity and the costs balloon.

The broader data centre landscape is equally stark. In 2024, U.S. data centres were estimated to use around 200 terawatt-hours of electricity — roughly the same as Thailand’s annual consumption. By 2028, AI alone could push this to 326 terawatt-hours.

That’s equivalent to:

- Powering 22% of American homes

- Driving over 300 billion miles

- Completing 1,600 round trips to the sun (in carbon terms)

Water usage, often overlooked, is another major concern. AI infrastructure guzzles water for cooling, posing risks during heatwaves and water shortages. As AI adoption grows, so too does this hidden drain on natural resources.

What’s being done — and who’s trying to fix it

A handful of organisations are beginning to push for accountability.

The Green Software Foundation — backed by Microsoft, Google, Siemens, and others — is creating sustainability standards tailored for AI. Through its Green AI Committee, it champions:

- Lifecycle carbon accounting

- Open-source tools for energy tracking

- Real-time carbon intensity metrics

Meanwhile, governments are cautiously stepping in. The EU AI Act encourages sustainability via risk assessments. In the UK, the AI Opportunities Action Plan and British Standards Institution are working on guidance for measuring AI’s carbon toll.

Still, these are fledgling efforts in an industry sprinting ahead. Without enforceable mandates, they risk becoming toothless.

Why transparency matters more than ever for AI carbon emissions

We can’t manage what we don’t measure. And in AI, the stakes are immense.

Without accurate data, regulators can’t design smart policies. Infrastructure planners can’t future-proof grids. Consumers and businesses can’t make ethical choices.

Most of all, AI firms can’t credibly claim to build a better world while masking the true environmental cost of their platforms. Sustainability isn’t a PR sidecar — it must be built into the business model.

So yes, generative AI may be dazzling. But if it’s to earn its place in a sustainable digital future, the first step is brutally simple: tell us how much it costs to run.

You May Also Like:

- AI Powering Data Centres and Draining Energy

- AI Increases Google’s Carbon Footprint by Nearly 50%

- The Thirst of AI: A Looming Water Crisis in Asia

- You can read more from the IEA by tapping here.

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Life

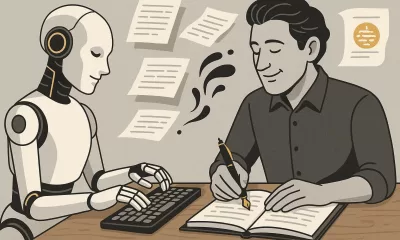

How To Teach ChatGPT Your Writing Style

This warm, practical guide explores how professionals can shape ChatGPT’s tone to match their own writing style. From defining your voice to smart prompting and memory settings, it offers a step-by-step approach to turning ChatGPT into a savvy writing partner.

Published

2 days agoon

June 4, 2025By

AIinAsia

TL;DR — What You Need To Know

- ChatGPT can mimic your writing tone with the right examples and prompts

- Start by defining your personal style, then share it clearly with the AI

- Use smart prompting, not vague requests, to shape tone and rhythm

- Custom instructions and memory settings help ChatGPT “remember” you

- It won’t be perfect — but it can become a valuable creative sidekick.

Start by defining your voice

Before ChatGPT can write like you, you need to know how you write. This may sound obvious, but most professionals haven’t clearly articulated their voice. They just write.

Think about your usual tone. Are you friendly, brisk, poetic, slightly sarcastic? Do you use short, direct sentences or long ones filled with metaphors? Swear words? Emojis? Do you write like you talk?

Collect a few of your own writing samples: a newsletter intro, a social media post, even a Slack message. Read them aloud. What patterns emerge? Look at rhythm, vocabulary and mood. That’s your signature.

Show ChatGPT your writing

Now you’ve defined your style, show ChatGPT what it looks like. You don’t need to upload a manifesto. Just say something like:

“Here are three examples of my writing. Please analyse my tone, sentence structure and word choice. I’d like you to write like this moving forward.”

Then paste your samples. Follow up with:

“Can you describe my writing style in a few bullet points?”

You’re not just being polite. This step ensures you’re aligned. It also helps ChatGPT to frame your voice accurately before trying to imitate it.

Be sure to offer varied, representative examples. The more you reflect your daily writing habits across different formats (emails, captions, articles), the sharper the mimicry.

Prompt with purpose

Once ChatGPT knows how you write, the next step is prompting. And this is where most people stumble. Saying, “Make it sound like me” isn’t quite enough.

Instead, try:

“Rewrite this in my tone — warm, conversational, and a little cheeky.” “Avoid sounding corporate. Use contractions, variety in sentence length and clear rhythm.”

Yes, you may need a few back-and-forths. But treat it like any editorial collaboration — the more you guide it, the better the results.

And once a prompt nails your style? Save it. That one sentence could be reused dozens of times across projects.

Use memory and custom instructions

ChatGPT now lets you store tone and preferences in memory. It’s like briefing a new hire once, rather than every single time.

Start with Custom Instructions (in Settings > Personalisation). Here, you can write:

“I use conversational English with dry humour and avoid corporate jargon. Short, varied sentences. Occasionally cheeky.”

Once saved, these tone preferences apply by default.

There’s also memory, where ChatGPT remembers facts and stylistic traits across chats. Paid users have access to broader, more persistent memory. Free users get a lighter version but still benefit.

Just say:

“Please remember that I like a formal tone with occasional wit.”

ChatGPT will confirm and update accordingly. You can always check what it remembers under Settings > Personalisation > Memory.

Test, tweak and give feedback

Don’t be shy. If something sounds off, say so.

“This is too wordy. Try a punchier version.” “Tone down the enthusiasm — make it sound more reflective.”

Ask ChatGPT why it wrote something a certain way. Often, the explanation will give you insight into how it interpreted your tone, and let you correct misunderstandings.

As you iterate, this feedback loop will sharpen your AI writing partner’s instincts.

Use ChatGPT as a creative partner, not a clone

This isn’t about outsourcing your entire writing voice. AI is a tool — not a ghostwriter. It can help organise your thoughts, start a draft or nudge you past a creative block. But your personality still counts.

Some people want their AI to mimic them exactly. Others just want help brainstorming or structure. Both are fine.

The key? Don’t expect perfection. Think of ChatGPT as a very keen intern with potential. With the right brief and enough examples, it can be brilliant.

You May Also Like:

- Customising AI: Train ChatGPT to Write in Your Unique Voice

- Elon Musk predicts AGI by 2026

- ChatGPT Just Quietly Released “Memory with Search” – Here’s What You Need to Know

- Or try these prompt ideas out on ChatGPT by tapping here

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

Tools

Upgrade Your ChatGPT Game With These 5 Prompts Tips

Most people ask ChatGPT the wrong way. These 5 prompt upgrades will train the AI to think sharper and deliver smarter answers every time.

Published

1 week agoon

May 29, 2025By

AIinAsia

What if the problem isn’t ChatGPT — but how you’re talking to it? As businesses across Asia scramble to integrate AI into daily workflows, far too many professionals are getting half-baked answers and wasting time refining prompts. Precision equals clarity. And clarity starts with knowing what to ask. Read on for 5 elite ChatGPT prompt tips.

TL;DR — What You Need To Know

- ChatGPT often gives vague or flawed answers because the prompt lacks structure

- Adding 5 targeted follow-up requests can dramatically improve output quality

- Smart prompting forces the AI to self-check, ask better questions, and aim higher

- These techniques turn ChatGPT into a sharper thinking partner for professionals

1. Make ChatGPT Score Its Own Work

Like a student handing in homework and marking their own essay, ChatGPT performs better when it’s made to judge itself. Give it a red pen:

“I want you to assess your response against this checklist. Rate your answer on a scale of 1-10 for each of these criteria: accuracy, completeness, relevance, clarity, and practical usefulness…”

By forcing the AI to reflect on its own output, you raise the baseline for what counts as “done.” You get more precise responses — and fewer excuses to settle for less.

2. Demand Reasoning, Not Just Answers

Don’t let ChatGPT blag its way through your questions like a first-year intern. Make it show its working — maths teacher-style.

“For each main point in your response, explain your reasoning process…”

This single move helps you spot weak assumptions and gives you greater confidence in the result. Especially important when decisions — or reputations — are on the line.

3. Make It Ask the Right Questions First

If your prompt sounds like a half-baked WhatsApp message at 2am, don’t expect brilliance. Before ChatGPT replies, let it play detective.

“Before giving me any answer, point out exactly what I’ve left out of my request…”

It’s the kind of intelligent friction that turns vague ideas into actionable prompts. Think of it as ChatGPT playing devil’s advocate before it turns into your co-pilot.

4. Find Your Blind Spots Early

Even the best minds occasionally forget the obvious. Enter ChatGPT, your AI-powered Socrates, asking the awkward questions others won’t.

“Review my request and tell me what angles I’m completely missing…”

This elevates the conversation. ChatGPT stops being a yes-man and starts acting like the curious challenger every business leader needs.

5. Push It to Think Like an Expert

You wouldn’t ask a junior exec to run your quarterly strategy — so don’t let ChatGPT deliver B-grade insights. Demand elite thinking.

“Respond to my question as if you were in the top 1% of experts in my field…”

This unlocks deeper insights, smarter trade-offs, and far more useful recommendations — especially when your questions relate to strategy, branding, or customer psychology.

Get Better Answers From ChatGPT Every Time

The best ChatGPT users aren’t the ones with the fanciest tools. They’re the ones who ask better questions. Use these five prompt upgrades as your new default. Over time, the difference isn’t just better responses — it’s better thinking.

Share YOUR ChatGPT prompt tips!

Do your ChatGPT answers leave you with digital drivel? Share your own tips and tricks in the comments below!

You may also like:

- 7 Effective AI Prompt Strategies to Elevate Your Results Instantly

- Game-Changing Google Gemini Tips for Tech-Savvy Asians

- Or tap here to try these ChatGPT prompt tips now

Author

Discover more from AIinASIA

Subscribe to get the latest posts sent to your email.

The Dirty Secret Behind Your Favourite AI Tools

How To Teach ChatGPT Your Writing Style

Upgrade Your ChatGPT Game With These 5 Prompts Tips

Trending

-

Life3 weeks ago

Life3 weeks ago7 Mind-Blowing New ChatGPT Use Cases in 2025

-

Learning2 weeks ago

Learning2 weeks agoHow to Use the “Create an Action” Feature in Custom GPTs

-

Business3 weeks ago

Business3 weeks agoAI Just Killed 8 Jobs… But Created 15 New Ones Paying £100k+

-

Learning2 weeks ago

Learning2 weeks agoHow to Upload Knowledge into Your Custom GPT

-

Learning2 weeks ago

Learning2 weeks agoBuild Your Own Custom GPT in Under 30 Minutes – Step-by-Step Beginner’s Guide

-

Life2 days ago

Life2 days agoHow To Teach ChatGPT Your Writing Style

-

Business2 weeks ago

Business2 weeks agoAdrian’s Arena: Stop Collecting AI Tools and Start Building a Stack

-

Life3 weeks ago

Life3 weeks agoAdrian’s Arena: Will AI Get You Fired? 9 Mistakes That Could Cost You Everything